Seamlessly connect with leading AI and machine learning platforms. Start a free cloud trial to explore Elastic’s gen AI capabilities or try it on your machine now.

In our last post we introduced ELSER v2, discussed its zero shot relevance and the inference performance improvements we made. This blog focuses on how we reduced its retrieval costs.

It has been noted that retrieval can be slow when using scores computed from learned sparse representations, such as ELSER. Slow is a relative term and in this context we mean slow when compared to BM25 scored retrieval. There are two principle reasons for this:

- The query expansion means we're usually matching many more terms than are present in user supplied keyword searches.

- The weight distribution for BM25 is particularly well suited to query optimisation.

The first bottleneck can be tackled at train time, albeit with a relevance retrieval cost tradeoff. There is a regularizer term in the training loss which allows one to penalize using more terms in the query expansion. There are also gains to be had by performing better model selection.

Retrieval cost aware training

When training any model it is sensible to keep the best one as optimisation progresses. Typically the quality is measured using the training loss function evaluated on a hold-out, or validation, dataset. We had found this metric alone did not correlate as well as we liked with zero-shot relevance; so we were already measuring NDCG@10 on several small datasets from the BEIR suite to help decide which model to retain. This allows us to measure other aspects of retrieval behavior. In particular, we compute the retrieval cost using the number of weight multiplications performed on average to find the top-k matches for every query.

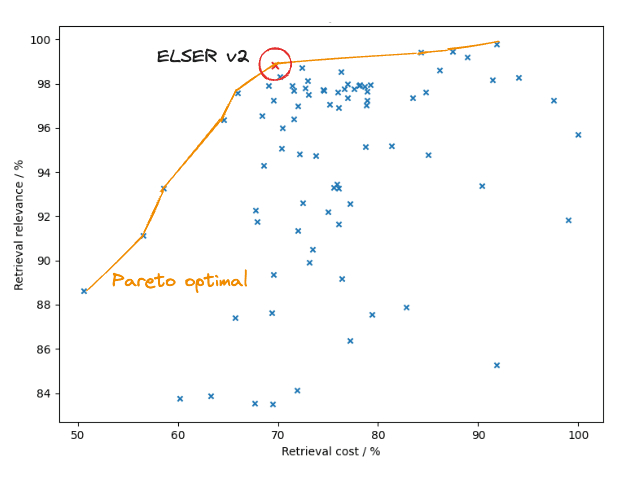

We found that there is quite significant variation between the retrieval cost for relatively small variation in retrieval quality and used this information to identify Pareto optimal models. This was done for various choices of our regularization hyperparameters at different points along their learning trajectories. The figure below shows a scatter plot of the candidate models we considered characterized by their relevance and cost, together with the choice we made for ELSER v2. In the end we sacrificed around 1% in relevance for around a 25% reduction in the retrieval cost.

Performing model selection for ELSER v2 via relevance retrieval cost multi-objective optimization

Whilst this is a nice win, the figure also shows there is only so much it is possible to achieve when making the trade off at train time. At least without significantly impacting relevance. As we discussed before, with ELSER our goal is to train a model with excellent zero-shot relevance. Therefore, if we make the tradeoff during training we make it in a global setting, without knowing anything about the specific corpus where the model will be applied. To understand how to overcome the dichotomy between relevance and retrieval cost we need to study the token statistics in a specific corpus. At the same time, it is also useful to understand why BM25 scoring is so efficient for retrieval.

Optimizing ELSER queries

The BM25 score comprises two factors, one which relates to its frequency in each document and one which relates to the frequency of each query term in the corpus. Focusing our attention on second factor, the score contribution of a term is weighted by its inverse document frequency (IDF) or . Here and and denote the matching document count and total number of documents, respectively. So is just the proportion of the documents which contain that term, modulo a small correction which is negligible for large corpuses.

It is clear that IDF is a monotonic decreasing function of the frequency. Coupled with block-max WAND, this allows retrieval to skip many non-competitive documents even if the query includes frequent terms. Specifically, in any given block one might expect some documents to contain frequent terms, but with BM25 scoring they are unlikely to be competitive with the best matches for the query.

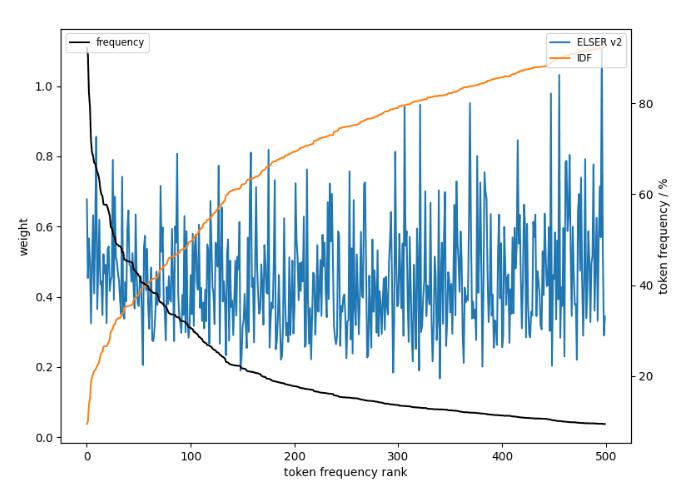

The figure below shows statistics related to the top tokens generated by ELSER v2 for the NFCorpus dataset. This is one of the datasets used to evaluate retrieval in the BEIR suite and comprises queries and documents related to nutrition. The token frequencies, expressed as a percentage of the documents which contain that token, are on the right hand axis and the corresponding IDF and the average ELSER v2 weight for the tokens are on the left hand axis. If one examines the top tokens they're what we might expect given the corpus content: things like “supplement”, “nutritional”, “diet”, etc. Queries expand to a similar set of terms. This underlines that even if tokens are well distributed in the training corpus as a whole, they can end up concentrated when we examine a specific corpus. Furthermore, we see that unlike BM25 the weight is largely independent of token frequency and this makes block-max WAND ineffective. The outcome is retrieval is significantly more expensive than BM25.

Average ELSER v2 weights and IDF for the top 500 tokens in the document expansions of NFCorpus together with the percentage of documents in which they appear

Taking a step back, this suggests we reconsider token importance in light of the corpus subject matter. In a general setting, tokens related to nutrition may be highly informative. However, for a corpus about nutrition they are less so. This in fact is the underpinning of information theoretic approaches to retrieval. Roughly speaking we have two measures of the token information content for a specific query and corpus: its assigned weight - which is the natural analogue of the term frequency term used in BM25 - and the token frequency in the corpus as a whole - which we disregard when we score matches using the product of token weights. This suggests the following simple strategy to accelerate queries with hopefully little impact on retrieval quality:

- Drop frequent tokens altogether provided they are not particularly important for the query in the retrieval phase,

- Gather slightly more matches than required, and

- Rerank using the full set of tokens.

We can calculate the expected fraction of documents a token will be present in, assuming they all occur with equal probability. This is just the ratio where is the total number of tokens in the corpus, is the number of documents in the corpus and is the vocabulary size, which is 30522. Any token that occurs in a significantly greater fraction of documents than this is frequent for the corpus.

We found that pruning tokens which are 5 times more frequent than expected was an effective relevance retrieval cost tradeoff. We fixed the count of documents reranked using the full token set to 5 times the required set, so 50 for NDCG@10. We found we achieved more consistent results setting the weight threshold for which to retain tokens as a fraction of the maximum weight of any token in the query expansion. For the results below we retain all tokens whose weight is greater than or equal to 0.4 × “max token weight for the query”. This threshold was chosen so NDCG@10 was unchanged on NFCorpus. However, the same parameterization worked for the other 13 test datasets we tested, which strongly suggests that it generalizes well.

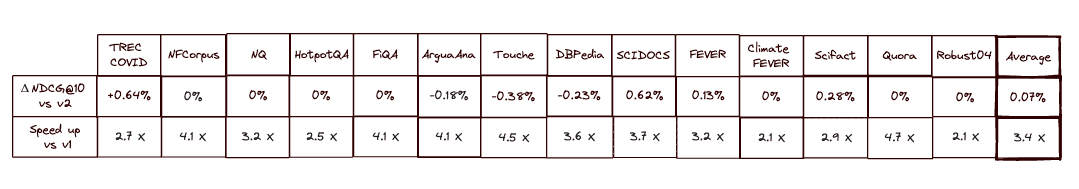

The table below shows the change in NDCG@10 relative to ELSER v2 with exact retrieval together with the retrieval cost relative to ELSER v1 with exact retrieval using this strategy. Note that the same pruning strategy can be applied to any learned sparse representation. However, we view that the key questions to answer are:

- Does the approach lead to any degradation in relevance compared to using exact scoring?

- What improvement in the retrieval latency might one expect using ELSER v2 and query optimization compared to the performance of the text_expansion query to date?

In summary, we achieved a very small improvement(!) of 0.07% in average NDCG@10 when we used the optimized query compared to the exact query and an average 3.4 times speedup. Furthermore, this speedup is measured without block-max WAND. As we expected, the optimization works particularly well together with block-max WAND. On a larger corpus (8.8M passages) we saw an 8.4 times speedup with block-max WAND enabled.

Measuring the relevance and latency impact of using token pruning followed by reranking. The relevance is measured by percentage change in NDCG@10 for exact retrieval with ELSER v2 and the speedup is measured with respect to exact retrieval with ELSER v1

An intriguing aspect of these results is that on average we see a small relevance improvement. Together with the fact that we previously showed carefully tuned combinations of ELSER v1 and BM25 scores yield very significant relevance improvements, it strongly suggests there are benefits available for relevance as well as for retrieval cost by making better use of corpus token statistics. Ideally, one would re-architect the model and train the query expansion to make use of both token weights and their frequencies. This is something we are actively researching.

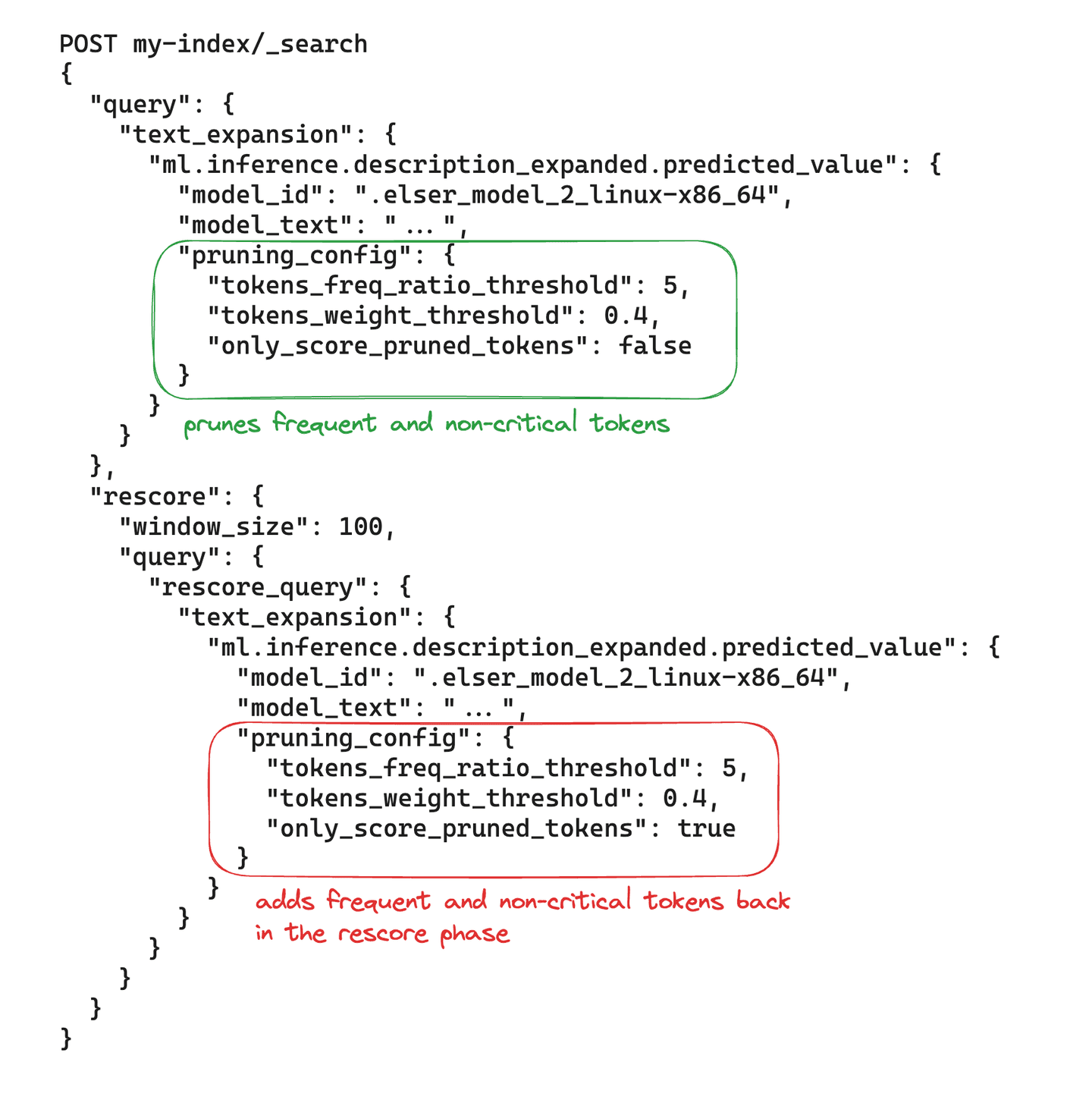

Implementing with an Elasticsearch query

As of Elasticsearch 8.13.0, we have integrated this optimization in the text_expansion query via token pruning so it is automatically applied in the retrieval phase for the text_expansion query.

For versions of Elasticsearch before 8.13.0, it is possible to achieve the same results using existing Elasticsearch query DSL given an analysis of the token frequencies and their weights.

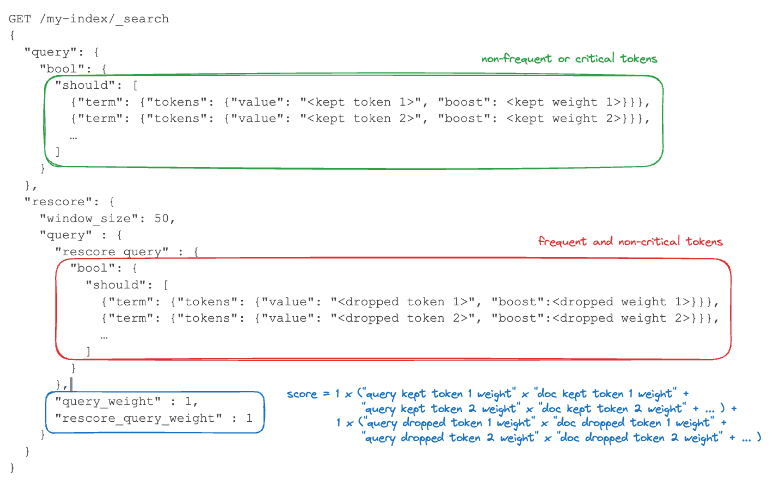

Tokens are stored in the _source field so it is possible to paginate through the documents and accumulate token frequencies to find out which tokens to exclude. Given an inference response one can partition the tokens into a “kept” and “dropped” set. The kept set is used to score the match in a should query. The dropped set is used in a rescore query on a window of the top 50 docs. Using query_weight and rescore_query_weight both equal to one simply sums the two scores so recovers the score using the full set of tokens. The query together with some explanation is shown below.

Conclusion

In these last two posts in our series we introduced the second version of the Elastic Learned Sparse EncodeR. So what benefits does it bring?

With some improvements to our training data set and regularizer we were able to obtain roughly a 2% improvement on our benchmark of zero-shot relevance. At the same time we've also made significant improvements to inference performance and retrieval latency.

We traded a small degradation (of a little less than 1%) in relevance for a large improvement (of over 25%) in the retrieval latency when performing model selection in the training loop. We also identified a simple token pruning strategy and verified it had no impact on retrieval quality. Together these sped up retrieval by between 2 and 5 times when compared to ELSER v1 on our benchmark suite. Token pruning can currently be implemented using Elasticsearch DSL, but we're also working towards performing it automatically in the text_expansion query.

To improve inference performance we prepared a quantized version of the model for x86 architecture and upgraded the libtorch backend we use. We found that these sped up inference by between 1.7 and 2.2 times depending on the text length. By using hybrid dynamic quantisation, based on an analysis of layer sensitivity to quantisation, we were able to achieve this with minimal loss in relevance.

We believe that ELSER v2 represents a step change in performance, so encourage you to give it a try!

This is an exciting time for information retrieval, which is being reshaped by rapid advances in NLP. We hope you've enjoyed this blog series in which we've tried to give a flavor of some of this field. This is not the end, rather the end of the beginning for us. We're already working on various improvements to retrieval in Elasticsearch and particularly in end-to-end optimisation of retrieval and generation pipelines. So stay tuned!

The release and timing of any features or functionality described in this post remain at Elastic's sole discretion. Any features or functionality not currently available may not be delivered on time or at all.

Elastic, Elasticsearch and associated marks are trademarks, logos or registered trademarks of Elasticsearch N.V. in the United States and other countries. All other company and product names are trademarks, logos or registered trademarks of their respective owners.