January 28, 2026

Apache Lucene 2025 wrap-up

2025 was a stellar year for Apache Lucene; here are our highlights.

December 23, 2025

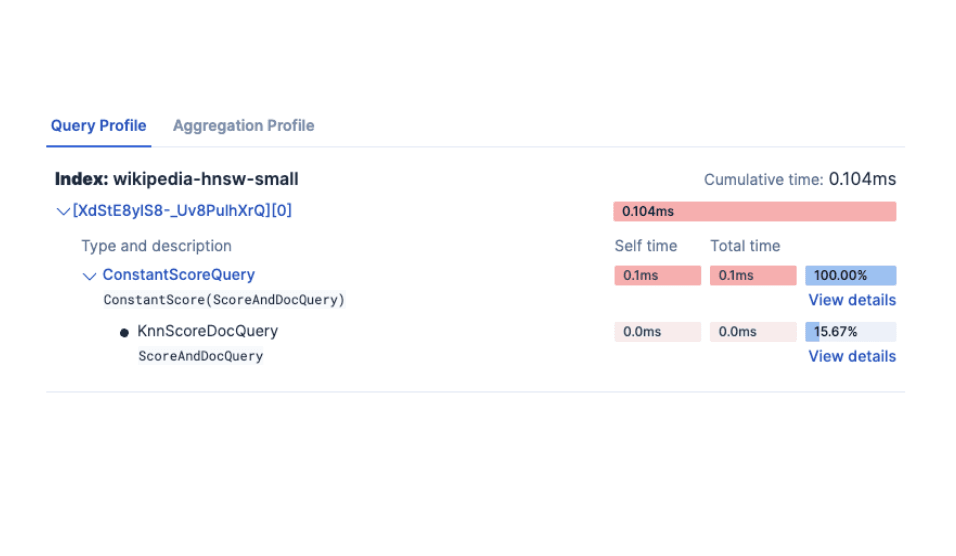

Comparing dense vector search performance with the Profile API in Elasticsearch

Learn how to use the Profile API in Elasticsearch to compare dense vector configurations and tune kNN performance with visual data from Kibana.

December 3, 2025

Up to 12x Faster Vector Indexing in Elasticsearch with NVIDIA cuVS: GPU-acceleration Chapter 2

Discover how Elasticsearch achieves nearly 12x higher indexing throughput with GPU-accelerated vector indexing and NVIDIA cuVS.

November 4, 2025

Multimodal search for mountain peaks with Elasticsearch and SigLIP-2

Learn how to implement text-to-image and image-to-image multimodal search using SigLIP-2 embeddings and Elasticsearch kNN vector search. Project focus: finding Mount Ama Dablam peak photos from an Everest trek.

November 3, 2025

Improving multilingual embedding model relevancy with hybrid search reranking

Learn how to improve the relevancy of E5 multilingual embedding model search results using Cohere's reranker and hybrid search in Elasticsearch.

October 23, 2025

Low-memory benchmarking in DiskBBQ and HNSW BBQ

Benchmarking Elasticsearch latency, indexing speed, and memory usage for DiskBBQ and HNSW BBQ in low-memory environments.

October 23, 2025

Introducing a new vector storage format: DiskBBQ

Introducing DiskBBQ, an alternative to HNSW, and exploring when and why to use it.

October 22, 2025

Deploying a multilingual embedding model in Elasticsearch

Learn how to deploy an e5 multilingual embedding model for vector search and cross-lingual retrieval in Elasticsearch.

September 19, 2025

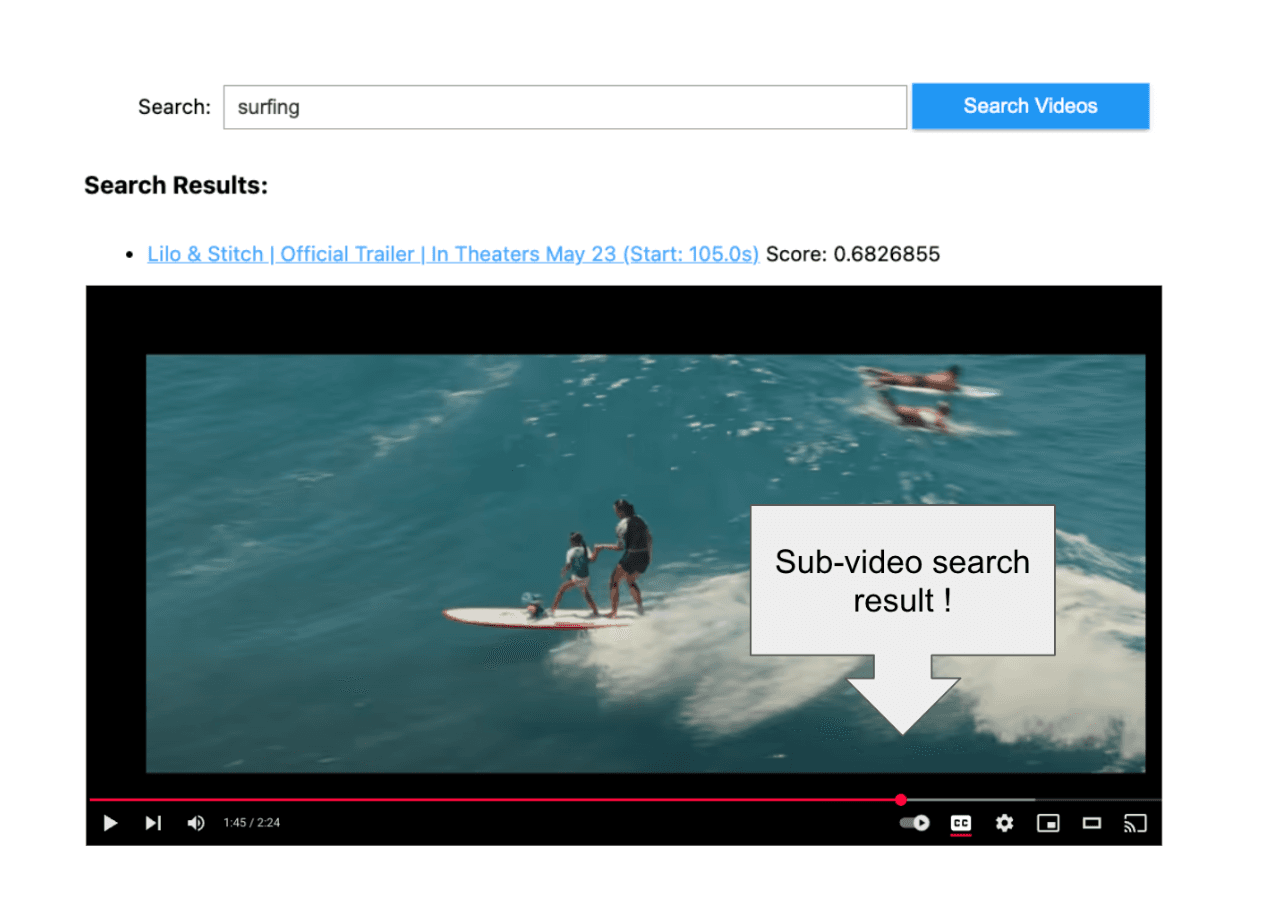

Using TwelveLabs’ Marengo video embedding model with Amazon Bedrock and Elasticsearch

Creating a small app to search video embeddings from TwelveLabs' Marengo model.