Elasticsearch has native integrations with the industry-leading Gen AI tools and providers. Check out our webinars on going Beyond RAG Basics, or building prod-ready apps with the Elastic vector database.

To build the best search solutions for your use case, start a free cloud trial or try Elastic on your local machine now.

Do you keep hearing about AI agents, and aren't quite sure what they are or how they connect to Elastic? Here I dive into AI agents, specifically covering:

- What is an AI agent?

- What problems can be solved using AI Agents?

- An example agent for travel planning, available here in GitHub, using AI SDK, Typescript and Elasticsearch.

What is an AI agent?

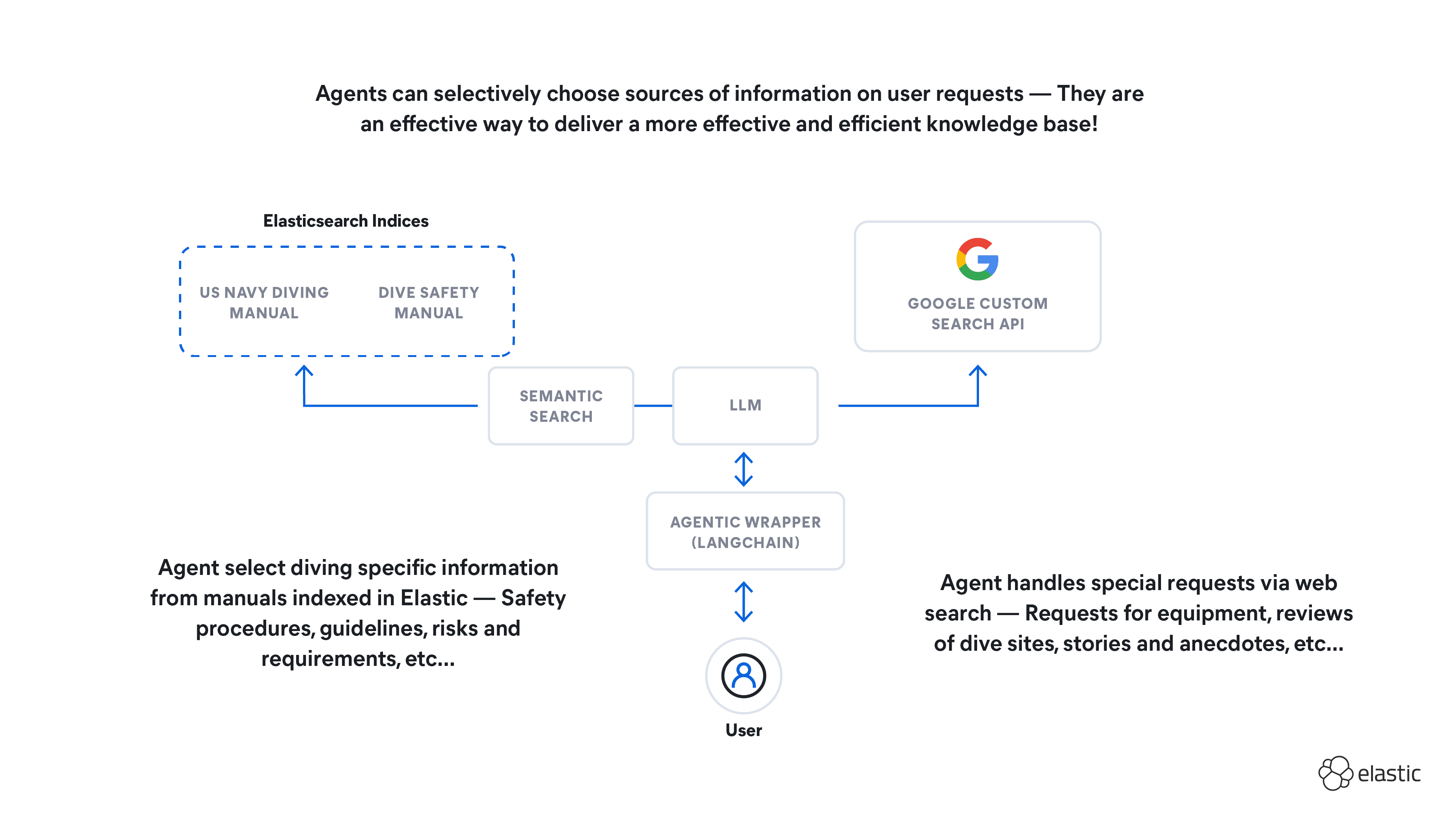

An AI agent is a software that is able to perform tasks autonomously and take actions on behalf of a human leveraging Artificial Intelligence. It achieves this by combining one or more LLMs with tools (or functions) that you define to perform particular actions. Example actions in these tools could be:

- Extracting information from databases, sensors, APIs or search engines such as Elasticsearch.

- Performing complex calculations whose results can be summarized by the LLM.

- Making key decisions based on various data inputs quickly.

- Raising necessary alerts and feedback based on the response.

What can be done with AI agents?

AI agents could be leveraged for many different use cases in numerous domains based on the type of agent you build. Possible examples include:

- A utility-based agent to evaluate actions and make recommendations to maximize the gain, such as to suggest films and series to watch based on a person's prior watching history.

- Model-based agents that make real-time decisions based on input from sensors, such as self-driving cars or automated vacuum cleaners.

- Learning agents that combine data and machine learning to identify patterns and exceptions in cases such as fraud detection.

- Utility agents that recommend investment decisions based on a person's risk market and existing portfolio to maximize their return. With my former finance hat on this could expedite such decisions if accuracy, reputational risk and regulatory factors are carefully weighted.

- Simple chatbots, as seen today, that can access our account information and answer basic questions using language.

AI agent example: Travel Planner

To better understand what these agents can do, and how to build one using familiar web technologies, let's walk through a simple example of a travel planner written using AI SDK, Typescript and Elasticsearch.

Architecture

Our example comprises of 5 distinct elements:

- A tool, named

weatherToolthat pulls weather data for the location specified by the questioner from Weather API. - A

fcdoTooltool that provides the current travel status of the destination from the GOV.UK Content API. - The flight information is pulled from Elasticsearch using a simple query in tool

flightTool. - All of the above information is then passed to LLM gpt-4o.

Model choice

When building your first AI agent, it can be difficult to figure out which is the right model to use. Resources such as the Hugging Face Open LLM Leaderboard are a good start. But for tool usage guidance you can also check out the Berkeley Function-Calling Leaderboard .

In our case, AI SDK specifically recommends using models with strong tool calling capabilities such as gpt-4 or gpt-4o in their Prompt Engineering documentation. Selecting the wrong model, as I found at the start of this project, can lead to the LLM not calling multiple tools in the way you expect, or even compatibility errors as you see below:

Prerequisites

To run this example, please ensure the prerequisites in the repository README are actioned.

Basic chat assistant

The simplest AI agent that you can create with AI SDK will generate a response from the LLM without any additional grounding context. AI SDK supports numerous JavaScript frameworks as outlined in their documentation. However the AI SDK UI library documentation lists varied support for React, Svelte, Vue.js and SolidJS, with many of the tutorials targeting Next.js. For this reason, our example is written with Next.js.

The basic anatomy of any AI SDK chatbot uses the useChat hook to handle requests from the backend route, by default /api/chat/:

The page.tsx file contains our client-side implementation in the Chat component, including the submission, loading and error handling capabilities exposed by the useChat hook. The loading and error handling functionality are optional, but recommended to provide an indication of the state of the request. Agents can take considerable time to respond when compared to simple REST calls, meaning that it's important to keep a user updated on state and prevent key mashing and repeated calls.

Because of the client interactivity of this component, I use the use client directive to make sure the component is considered part of the client bundle:

The Chat component will maintain the user input via the input property exposed by the hook, and will send the response to the appropriate route on submission. I have used the default handleSubmit method, which will invoke the /ai/chat/ POST route.

The handler for this route, located in /ai/chat/route.ts, initializes the connection to the gpt-4o LLM using the OpenAI provider:

Note that the above implementation will pull the API key from the environment variable OPENAI_API_KEY by default. If you need to customize the configuration of the openai provider, use the createOpenAI method to override the provider settings.

With the above routes, a little help from Showdown to format the GPT Markdown output as HTML, and a bit of CSS magic in the globals.css file, we end up with a simple responsive UI that will generate an itinerary based on the user prompt:

Adding tools

Adding tools to AI agents is basically creating custom functions that the LLM can use to enhance the response it generates. At this stage I shall add 3 new tools that the LLM can choose to use in generation of an itinerary, as shown in the below diagram:

Weather tool

While the generated itinerary is a great start, we may want to add additional information that the LLM was not trained on, such as weather. This leads us to write our first tool that can be used not only as input to the LLM, but additional data that allows us to adapt the UI.

The created weather tool, for which the full code is shown below, takes a single parameter location that the LLM will pull from the user input. The schema attribute accepts the input and output schemas using the TypeScript schema validation library Zod and ensures that the correct parameter types are passed. The description attribute allows you to define what the tool does to help the LLM decide if it wants to invoke the tool.

You may have guessed that the execute attribute is where we define an asynchronous function with our desired tool logic. Specifically, the location to send to the weather API is passed to our tool function. The response is then transformed into a single JSON object that can be shown on the UI, and also used to generate the itinerary.

Given we are only running a single tool at this stage, we don't need to consider sequential or parallel flows. It's simply the case of adding the tools property to the streamText method that handles the LLM output in the original api/chat route:

The tool output is provided alongside the messages, which allows us to provide a more complete experience for the user. Each message contains a parts attribute that contains type and state properties. Where these properties are of value tool-invocation and result respectively, we can pull the returned results from the toolInvocation attribute and show them as we wish.

The page.tsx source is changed to show the weather summary alongside the generated itinerary:

The above will provide the following output to the user:

FCDO tool

The power of AI agents is that the LLM can choose to trigger multiple tools to source relevant information when generating the response. Let's say we want to check the travel guidance for the destination country. A new tool fcdoGuidance, as per the below code, can trigger an API call to the GOV.UK Content API:

You will notice that the format is very similar to the weather tool discussed previously. Indeed, to include the tool into the LLM output it's just a case of adding to the tools property and amending the prompt in the /api/chat route:

Once the components showing the output for the tool are added to the page, the output for a country where travel is not advised should look something like this:

LLMs that support tool calling have the choice not to call a tool unless it feels the need. With gpt-4o both of our tools are being called in parallel. However, prior attempts using llama3.1 would result in a single model being called depending on the input.

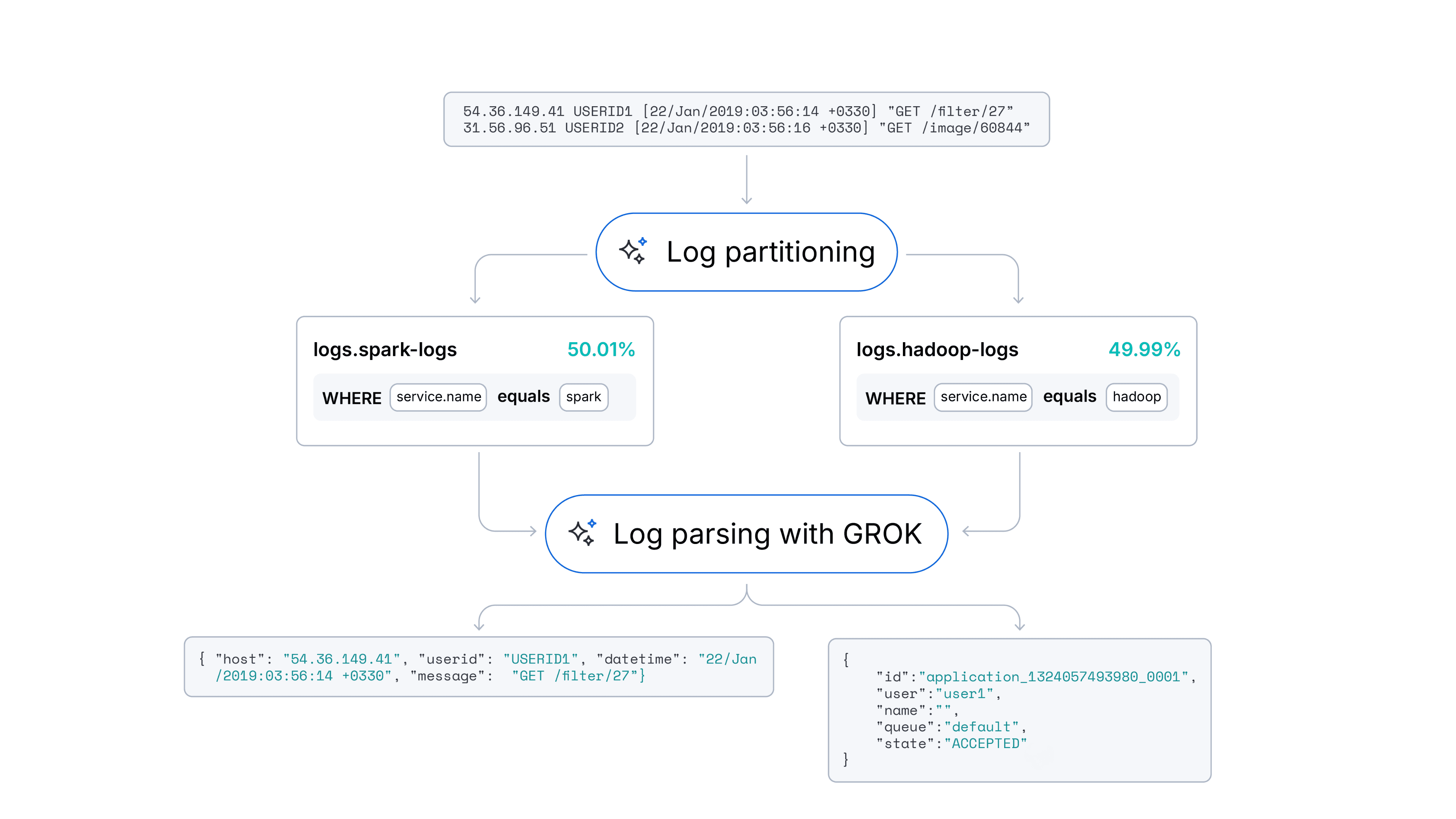

Flight information tool

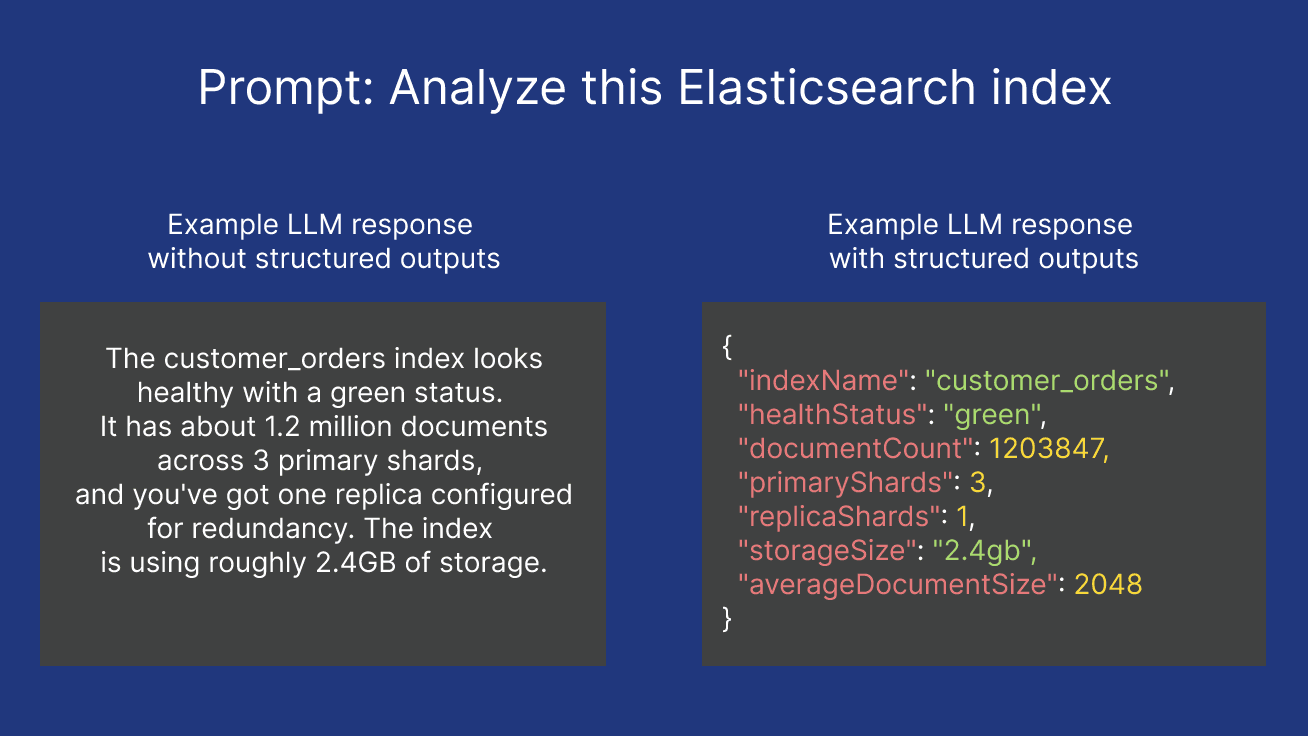

RAG, or Retrieval Augmented Generation, refers to software architectures where documents from a search engine or database is passed as the context to the LLM to ground the response to the provided set of documents. This architecture allows the LLM to generate a more accurate response based on data it has not been trained on previously. While Agentic RAG processes the documents using a defined set of tools, or alongside vector or hybrid search, it's also possible to utilize RAG as part of a complex flow with traditional lexical search as we do here.

To pass the flight information alongside the other tools to the LLM, a final tool flightTool pulls outbound and inbound flights using the provided source and destination from Elasticsearch using the Elasticsearch JavaScript client:

This example makes use of the Multi search API to pull the outbound and inbound flights in separate searches, before pulling out the documents using the extractFlights utility method.

To use the tool output, we need to amend our prompt and tool collection once more in /ai/chat/route.ts:

With the final prompt, all 3 tools will be called to generate an itinerary including flight options:

Summary

If you weren't 100% confident about what AI agents are, now you do! We've covered that using a simple travel planner example using AI SDK, Typescript and Elasticsearch. It would be possible to extend our planner to add other sources, allow the user to book the trip along with tours, or even generate image banners based on the location (for which support in AI SDK is currently experimental).

If you haven't dived into the code yet, check it out here!

Resources

Frequently Asked Questions

What is an AI agent?

An AI agent is a software that is able to perform tasks autonomously and take actions on behalf of a human leveraging Artificial Intelligence.

What can be done with AI agents?

AI agents could be leveraged for many different use cases in numerous domains based on the type of agent you build. Possible examples include: A utility-based agent to evaluate actions and make recommendations to maximize the gain, and model-based agents that make real-time decisions based on input from sensors, such as self-driving cars or automated vacuum cleaners.