Operational Logging at Lyft: Migrating from Splunk Cloud to Amazon ES to Self-Managed Elasticsearch

Looking for an alternative to Splunk? Learn how migrating to Elastic from Splunk can help you unify your observability and security data into a single platform, while decreasing your overall costs and admin overhead.

This post is a recap of a user talk given at Elastic{ON} 2018. Interested in seeing more talks like this? Check out the conference archive or find out when the Elastic{ON} Tour is coming to a city near you.

Want to learn more about the differences between the Amazon Elasticsearch Service and our official Elasticsearch Service? Visit our AWS Elasticsearch comparison page.

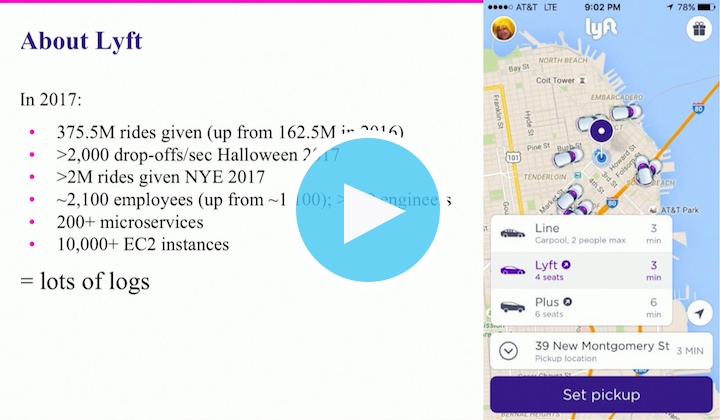

Lyft needs a powerful technological infrastructure to handle hundreds of millions of rides globally each year (more than 375 million in 2017 alone) and to make sure all of their customers get where they need to go. In 2017, that meant 200+ microservices across 10,000+ EC2 instances — numbers that would increase for surge times like New Year’s Eve (>2 million rides in one day) and Halloween (2,000+ rides per minute at peak). To keep all those systems and rides rolling along, it’s important that Lyft engineers have access to all of the operational logs. For Michael Goldsby and his Observability team, that means keeping their log pipeline service flowing.

In Lyft’s early days of logging analytics, they used Splunk Cloud as their service provider. But the retention limits of their contract, ingest backups of up to 30 minutes during busy periods, and the costs associated with scaling made Lyft decide to switch to Elasticsearch.

In late 2016, a team of two engineers was able to transition Lyft off of Splunk and onto Amazon Elasticsearch Service on AWS in about a month. Getting onto Elasticsearch was great, but there were some parts of the AWS offering that weren’t perfect. For one, Goldsby’s team wanted to upgrade to Elasticsearch 5.x, but AWS only offered version 2.3 at that time. Also, new instances were limited to Amazon EBS for storage, which had suboptimal performance and reliability at Lyft’s scale. And not all of the Elasticsearch APIs were exposed on AWS. But these AWS limitations weren’t enough to outweigh the benefits of the transition off of Splunk Cloud.

With ingest limits removed (or at least increased dramatically), the Observability team decided to open the floodgates and adopt a new philosophy: “send us all your logs.” This quickly jumped their ingest rates from 100K events per minute to 1.5M events per minute. With this new approach, things were great for about four months. When Goldsby’s team experienced ingest timeouts, retention shrinkage, or slow Kibana dashboards, all they had to do was scale up. And when they hit the AWS node limit of 20, they were given a special exception allowing them to bump up to 40 nodes.

But even with 40 nodes, there were problems. Red cluster problems.

Red clusters were a problem that Goldsby’s team knew how to fix (restart, replace nodes, etc.). The real problem was that they didn’t have direct access to their clusters. Instead, they would have to open a ticket with with Amazon, sometimes waiting for hours during peak business for support. Then the ticket would need to be escalated, as the first line of support could not access the clusters. Eventually Lyft learned that to expedite the escalation process, they could go directly to their Technical Account Manager (TAM) who would handle getting the clusters back to green.

Between the unavoidable support interventions, the 2.3 version limit, the EBS requirement, and other AWS-imposed limitations (round-robin load balancing, 60-second timeouts, API obfuscation, etc.), the Observability team decided to transition again — this time to a self-managed Elasticsearch deployment. “[On AWS] you don’t get all the features. You can’t press all the buttons,” said Goldsby. “We felt we had enough operational experience with Elasticsearch in-house…[that] we felt we could do it.”

With a slightly larger team than they used for the Splunk migration, Lyft was able to migrate from Amazon Elasticsearch Service to self-managed Elasticsearch in just two weeks. Now they have their own deployment, as well as all the features that were previously gated in AWS. This also means that they’re in charge of all operational aspects of the Elastic Stack, including keeping their clusters green and their users (co-workers) happy.

Learn how Lyft implemented their self-managed Elasticsearch deployment by watching Lyft's Wild Ride from Amazon ES to Self-Managed Elasticsearch for Operational Log Analytics talk from Elastic{ON} 2018. You’ll hear all about hardware choices, index lifecycle management decisions, and more, including why “log everything ≠ log anything” in regards to quality of service.