Herding Llama 3.1 with Elastic and LM Studio

The latest LM Studio 0.3 update has made Elastic’s AI Assistant for Security run with an LM Studio-hosted model easier and faster. In this blog, Elastic and LM Studio teams will show you how to get started in minutes. You no longer need to set up a proxy if you work on the same network or locally on your machine.

About Elastic AI Assistant

Over a year ago, we put the Elastic AI Assistant in the hands of our users, empowering them to harness generative AI to resolve problems and incidents faster. Learn more about the efficiency gains of Elastic AI Assistant.

About LM Studio

LM Studio is an explorer/IDE for local large language models (LLMs), focused on making local AI valuable and accessible while providing developers a platform on which to build.

There are several reasons users consider local LLMs, but chief among them is the ability to leverage AI without giving up sovereignty over your or your company's data. Other reasons include:

Enhancing data privacy and security

Reducing latency in threat detection

Gaining operational benefits

Securing your organization in the modern threat landscape

LM Studio provides a unified interface for locally discovering, downloading, and running leading open source models like Llama 3.1, Phi-3, and Gemini. Locally hosted models with LM Studio allow the SecOps team to use Elastic AI Assistant to help with context-aware guidance on alert triage, incident response, and more. All without requiring organizations to connect to third-party model hosting services.

How to set up LM Studio

Download and install the latest version of LM Studio.

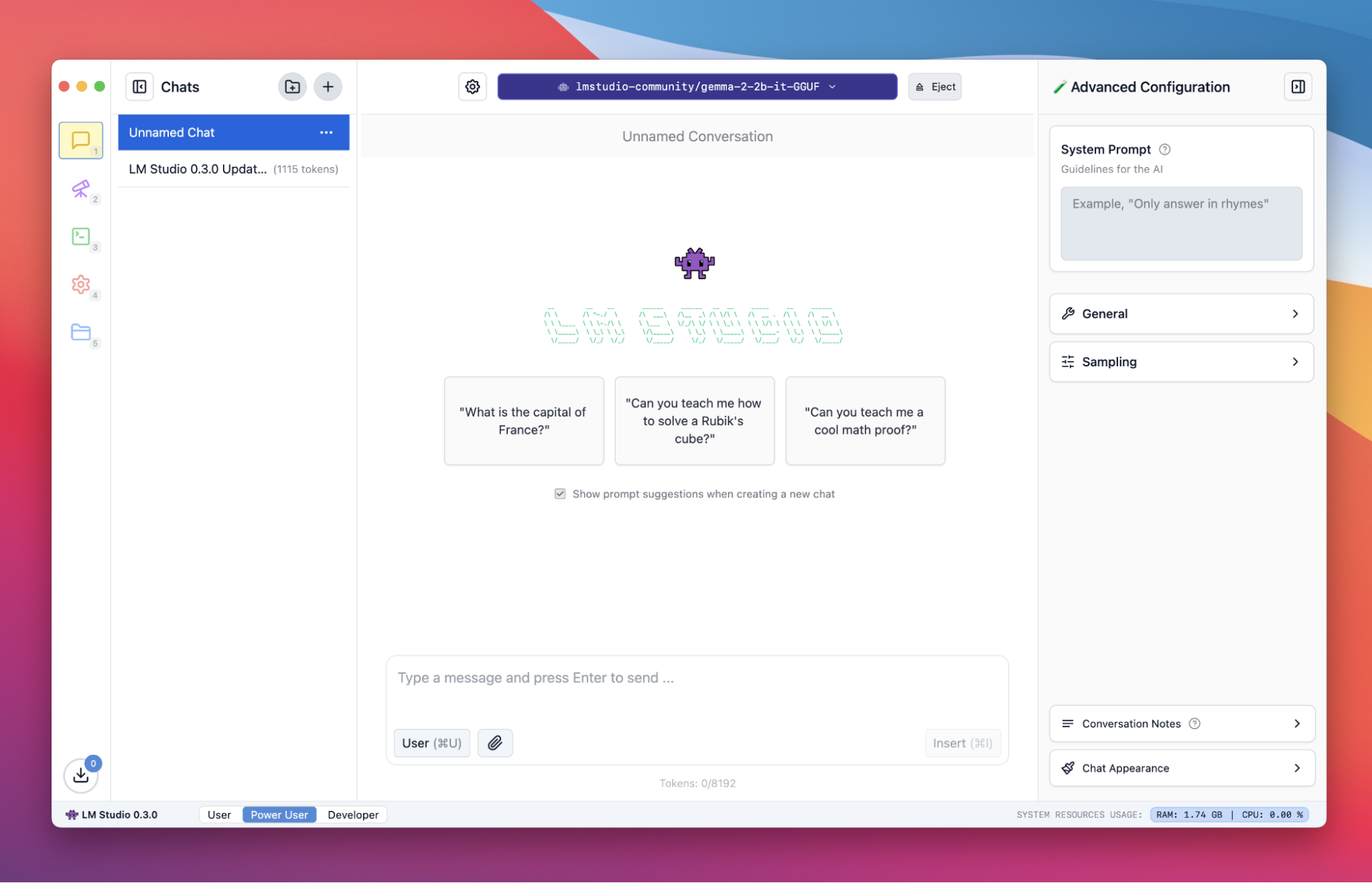

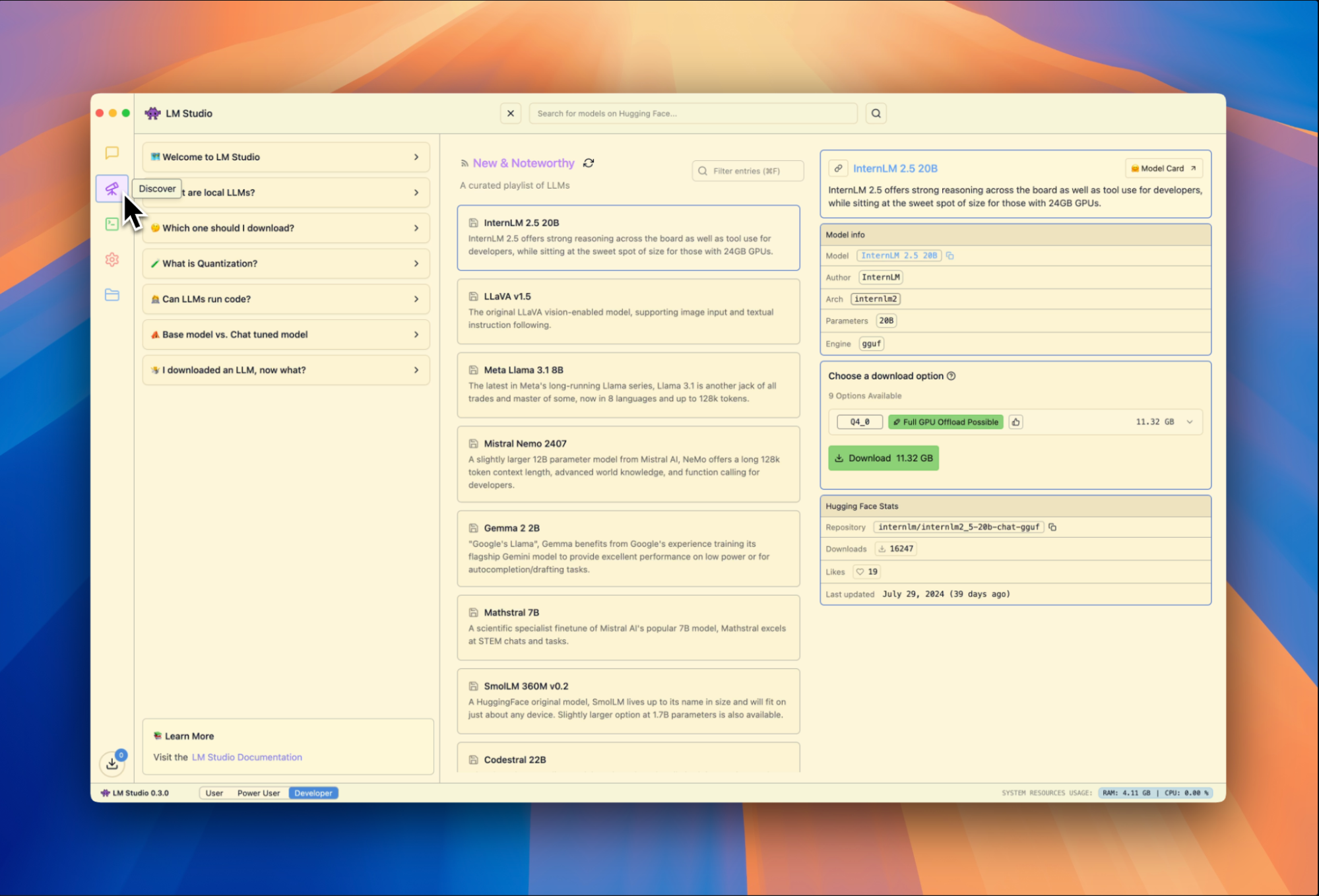

Once installed, download your first model by navigating to the Discover tab. Here, you can search for any LLM from Hugging Face and see a list of popular models curated by LM Studio. We will be setting up the Meta Llama 3.1 8B model for Elastic AI Assistant to leverage.

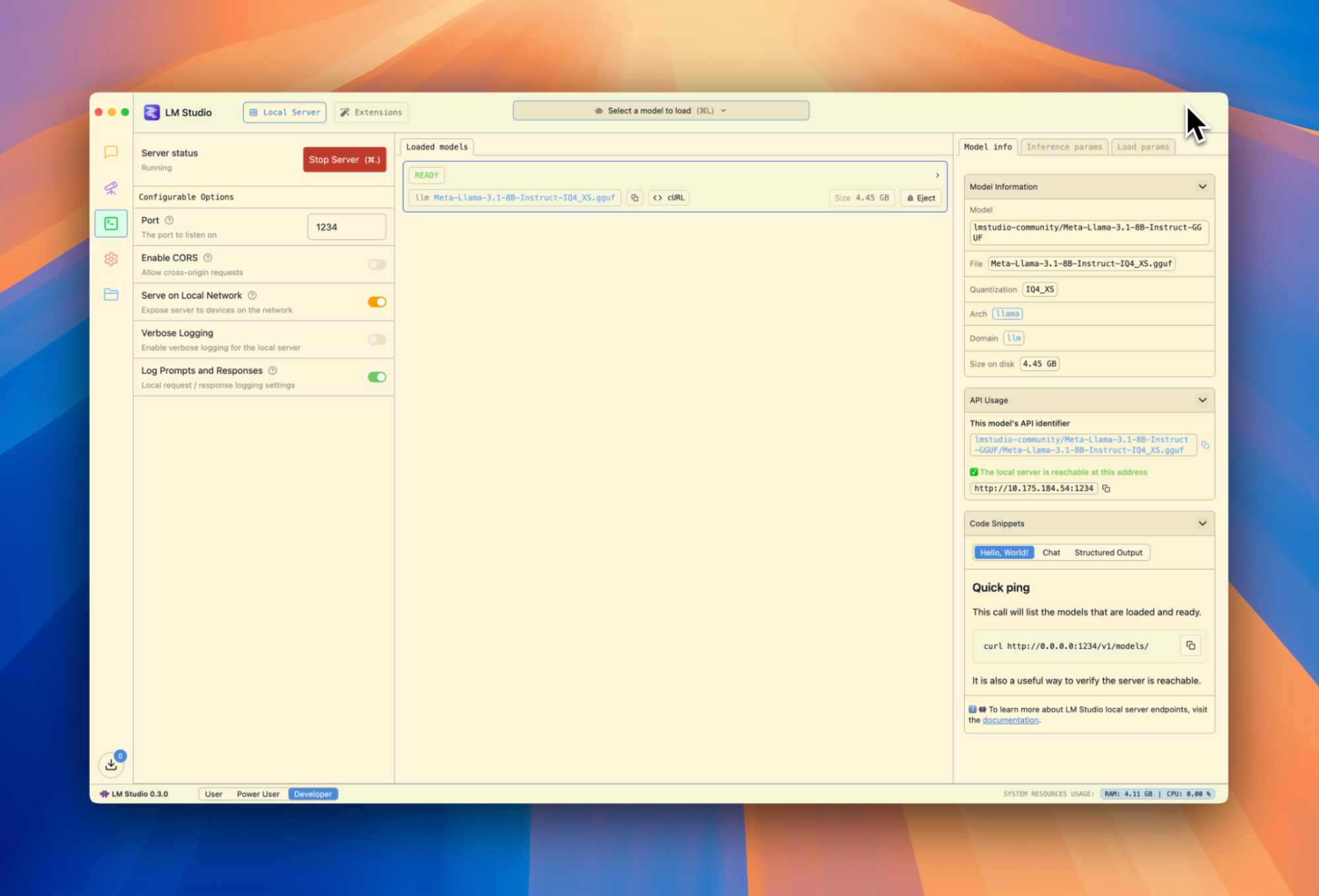

After you’ve picked your model, head to the Developer tab. Here you’ll start a Local Server for the Elastic AI Assistant to connect to. This allows applications or devices on your local network to make API calls to whatever model you run. Requests and responses follow OpenAI's API format.

Click Start Server, then select Llama 3.1 8B from the dropdown menu. You’ll want to ensure the Port is configured to 1234 (it should be like this automatically). Finally, turn on Serve on Local Network. This will allow any device on your network to connect to LM Studio and call whatever model you run.

How to set up Elastic AI Assistant

For this setup, we will run Elastic in Docker. We’ll reference the article “The Elastic Container Project for Security Research” from Elastic Security Labs. Instructions are also available on GitHub.

Elastic Docker installation

Install the prerequisites.

Change the default passwords.

Start the Elastic container.

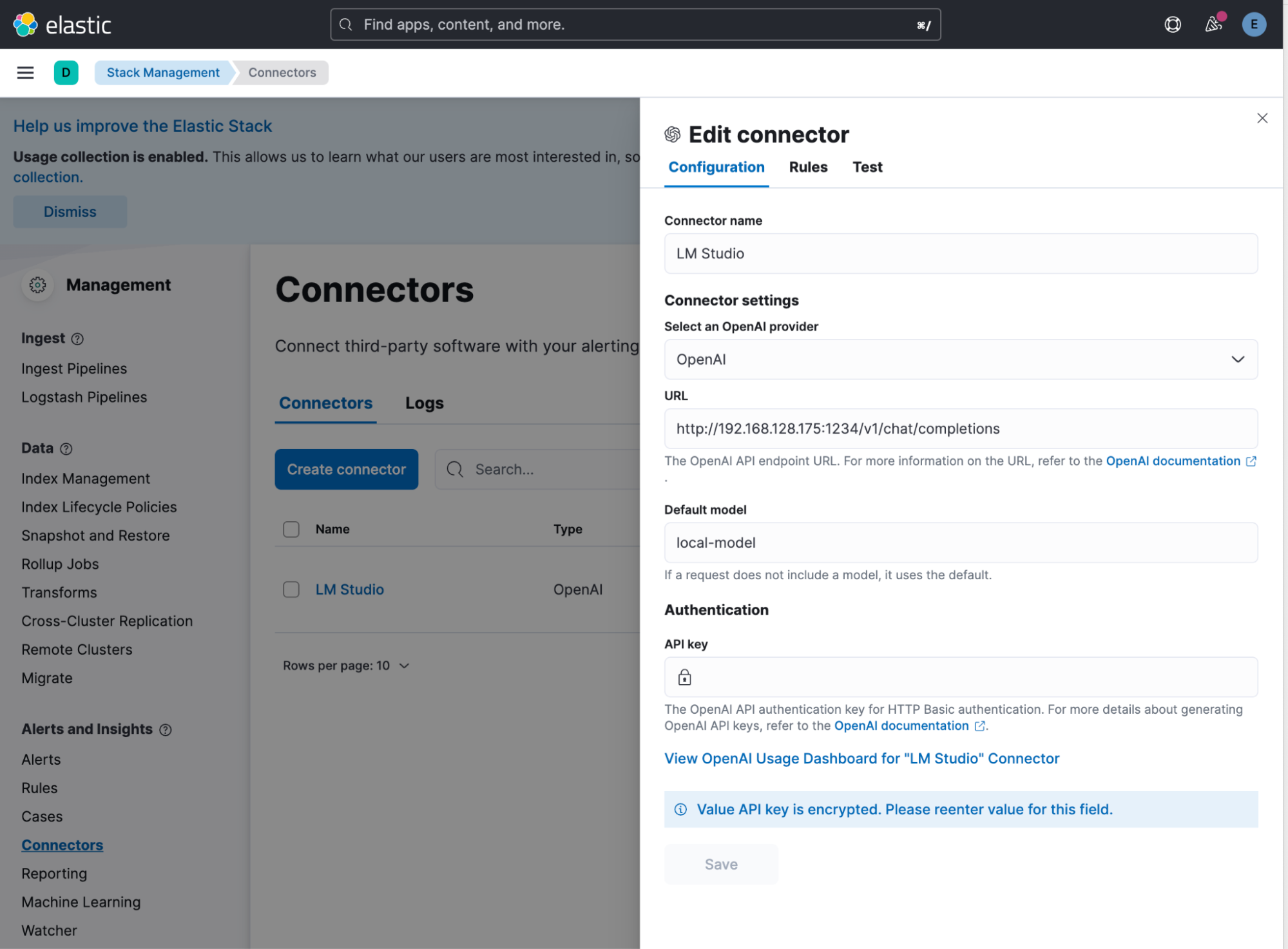

Once Elastic is up and running, navigate to Stack Management > Connectors and create a connector. Update the URL value to include the host's IP address as 127.* address will not route. Fill in anything for the API key.

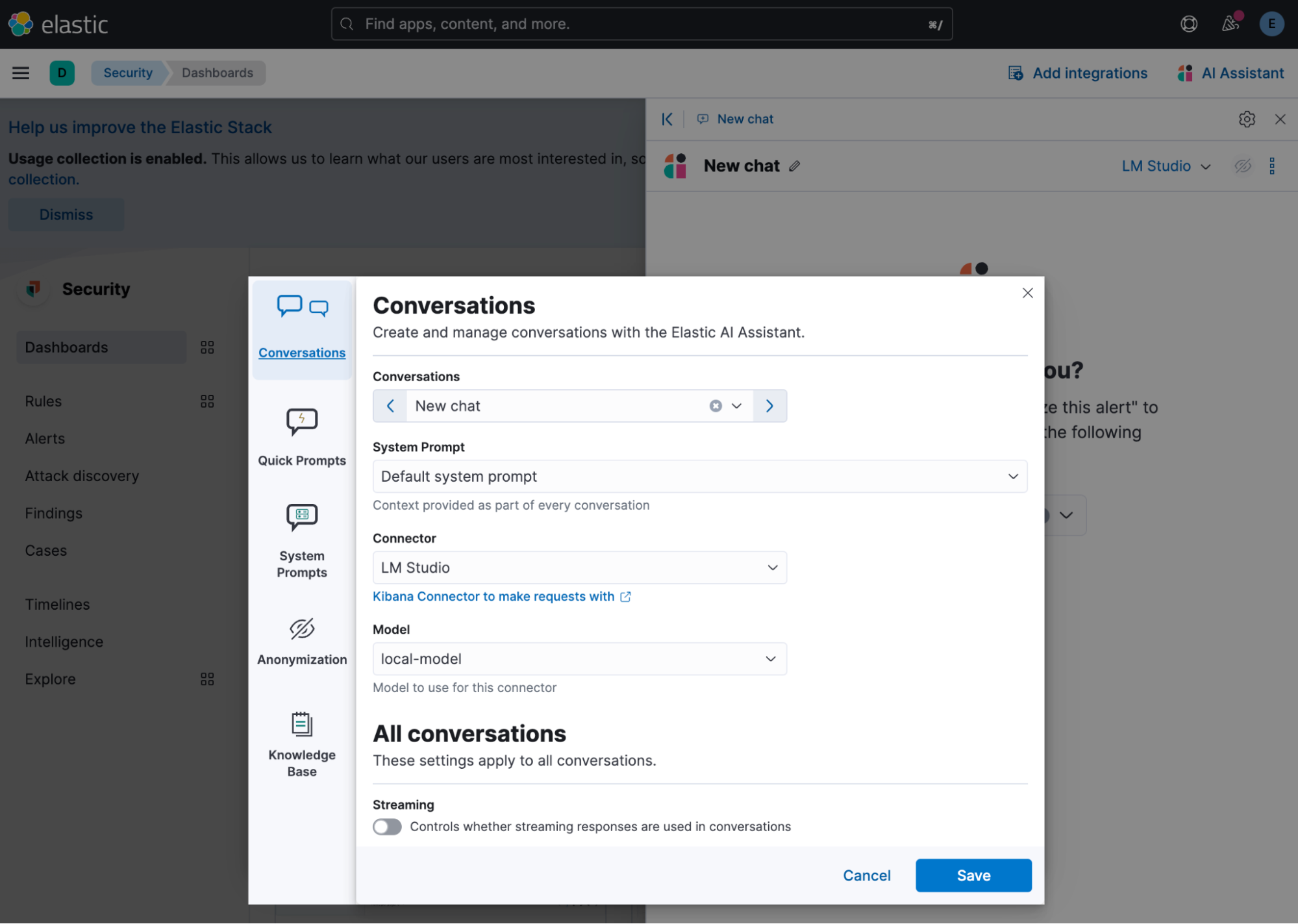

Now, in the security section, land on one of the subpages to open up the Elastic AI Assistant. Select the gear icon at the top right, and under the Conversation tab, turn streaming off.

Voilà! You can now use the model you previously loaded in LM Studio to power the Elastic AI Assistant and accomplish great things!

Elevate your security operations

This blog outlined how to easily elevate every security practitioner with Elastic AI Assistant configured with a locally hosted Llama 3.1 Model using LM Studio. This augments the security analysts' expertise by providing AI-powered guidance through triage, investigation, and response, improving productivity.

To learn more, check out our recent addition to Elastic Security documentation on how to set up Elastic and LM Studio. For further information, refer to:

The release and timing of any features or functionality described in this post remain at Elastic's sole discretion. Any features or functionality not currently available may not be delivered on time or at all.

In this blog post, we may have used or referred to third party generative AI tools, which are owned and operated by their respective owners. Elastic does not have any control over the third party tools and we have no responsibility or liability for their content, operation or use, nor for any loss or damage that may arise from your use of such tools. Please exercise caution when using AI tools with personal, sensitive or confidential information. Any data you submit may be used for AI training or other purposes. There is no guarantee that information you provide will be kept secure or confidential. You should familiarize yourself with the privacy practices and terms of use of any generative AI tools prior to use.

Elastic, Elasticsearch, ESRE, Elasticsearch Relevance Engine and associated marks are trademarks, logos or registered trademarks of Elasticsearch N.V. in the United States and other countries. All other company and product names are trademarks, logos or registered trademarks of their respective owners.