Elasticsearch vs. OpenSearch: Unraveling the performance gap

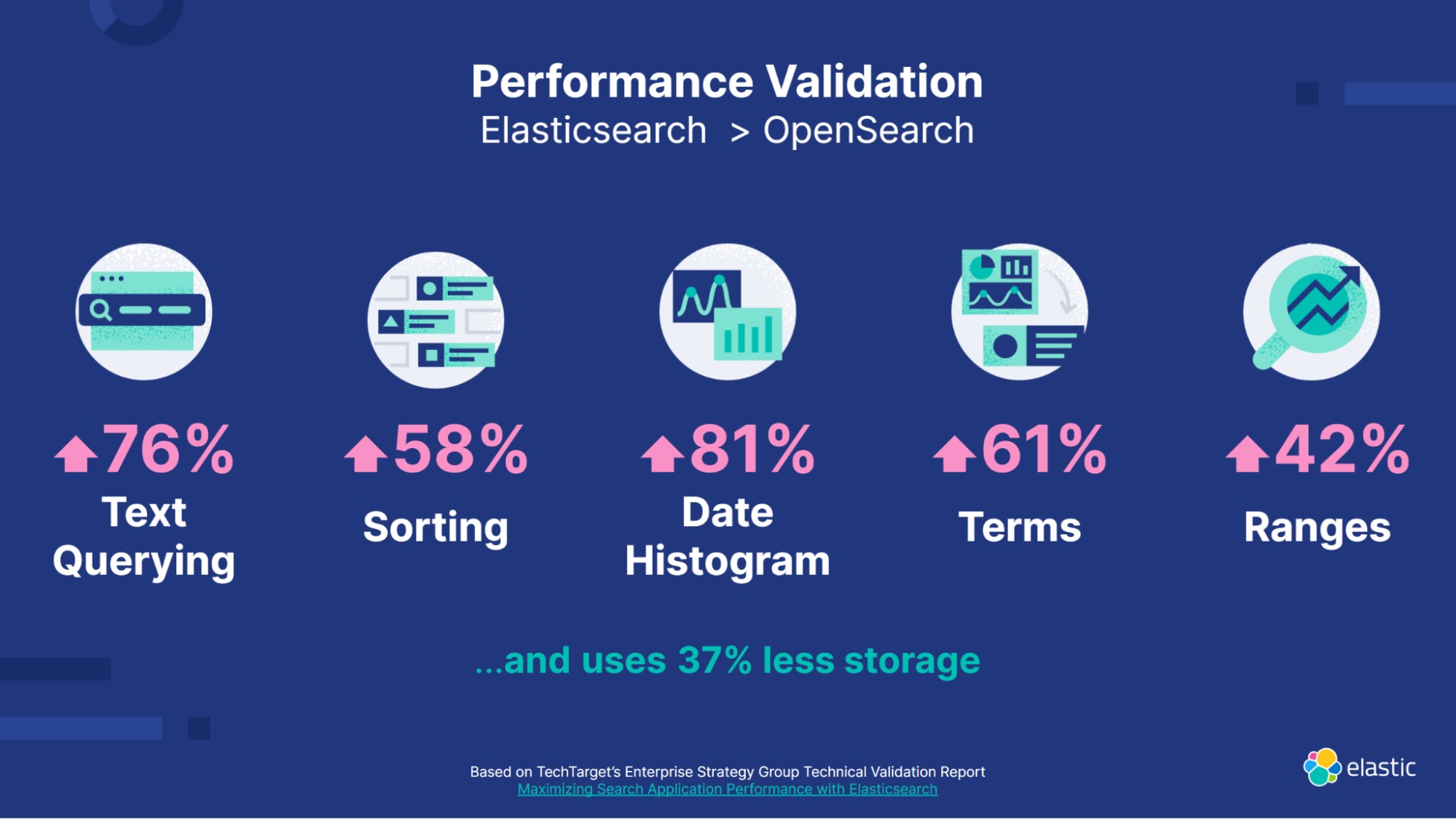

A powerful, fast, and efficient search engine is a crucial element for any organization that relies on searching data quickly and accurately. For developers and architects, choosing the right search platform can greatly influence your organization's capacity to provide fast and relevant results. In our thorough performance testing, Elasticsearch emerges as the clear choice. Elasticsearch is 40%–140% faster than OpenSearch while using fewer compute resources.

In this article, we'll carry out a performance comparison between Elasticsearch 8.7 and OpenSearch 2.7 (the latest in both at the time of testing) across six major areas: text querying, sorting, date histogram, range, and terms and including resource utilization. Our aim is to provide fair, practical, technical insights that can assist you in making informed decisions, whether you're optimizing an existing system or designing a new one. This comparison is also intended to clearly highlight the differences between Elasticsearch performance and OpenSearch performance, showing both are in no way the same.

We will first go over the results of the performance comparison followed by our testing methodology and testing environment.

Results

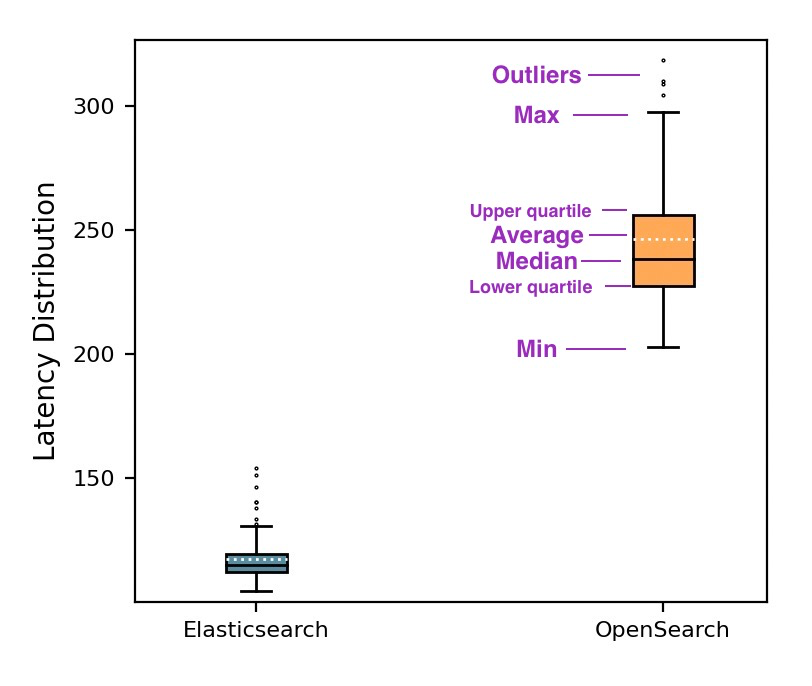

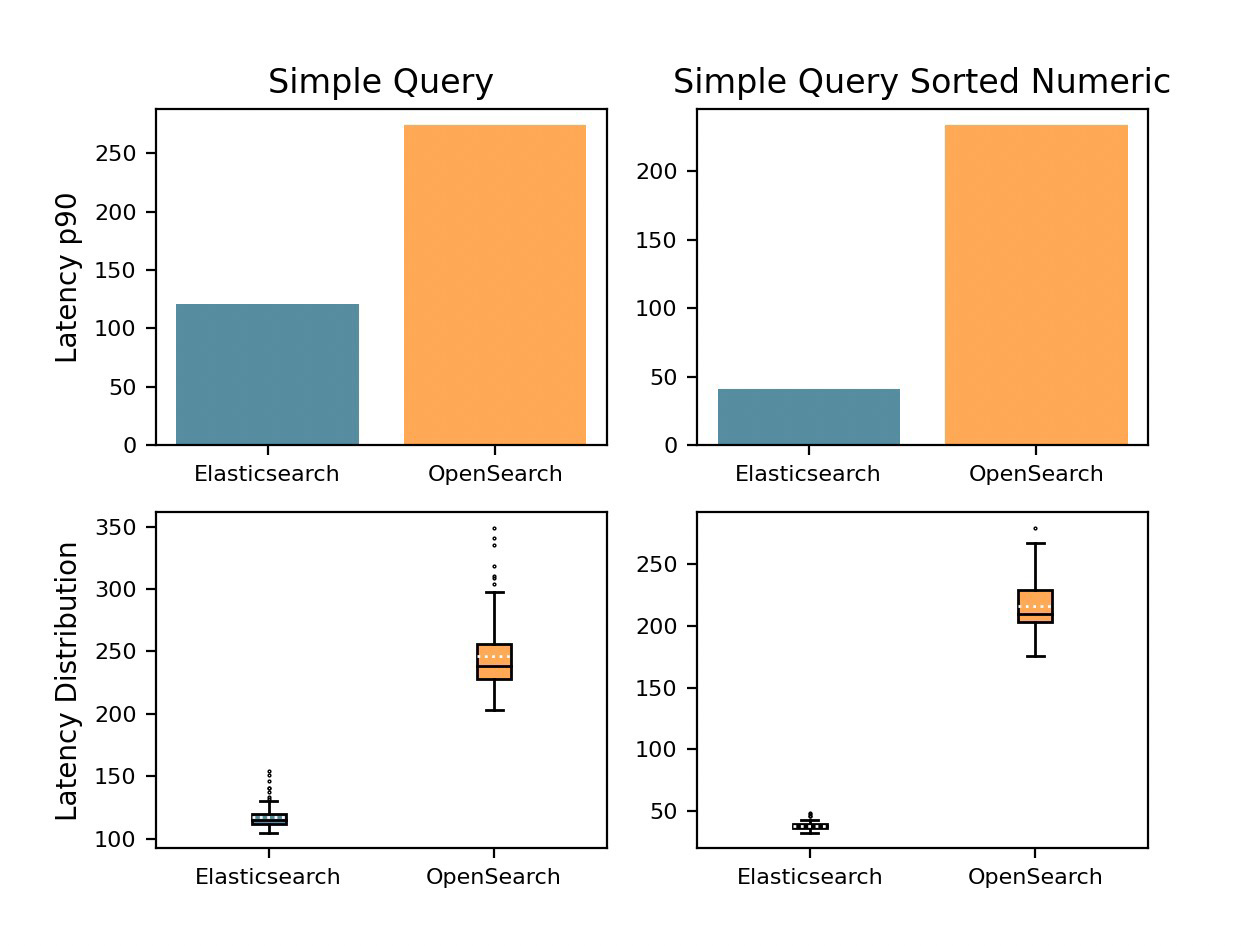

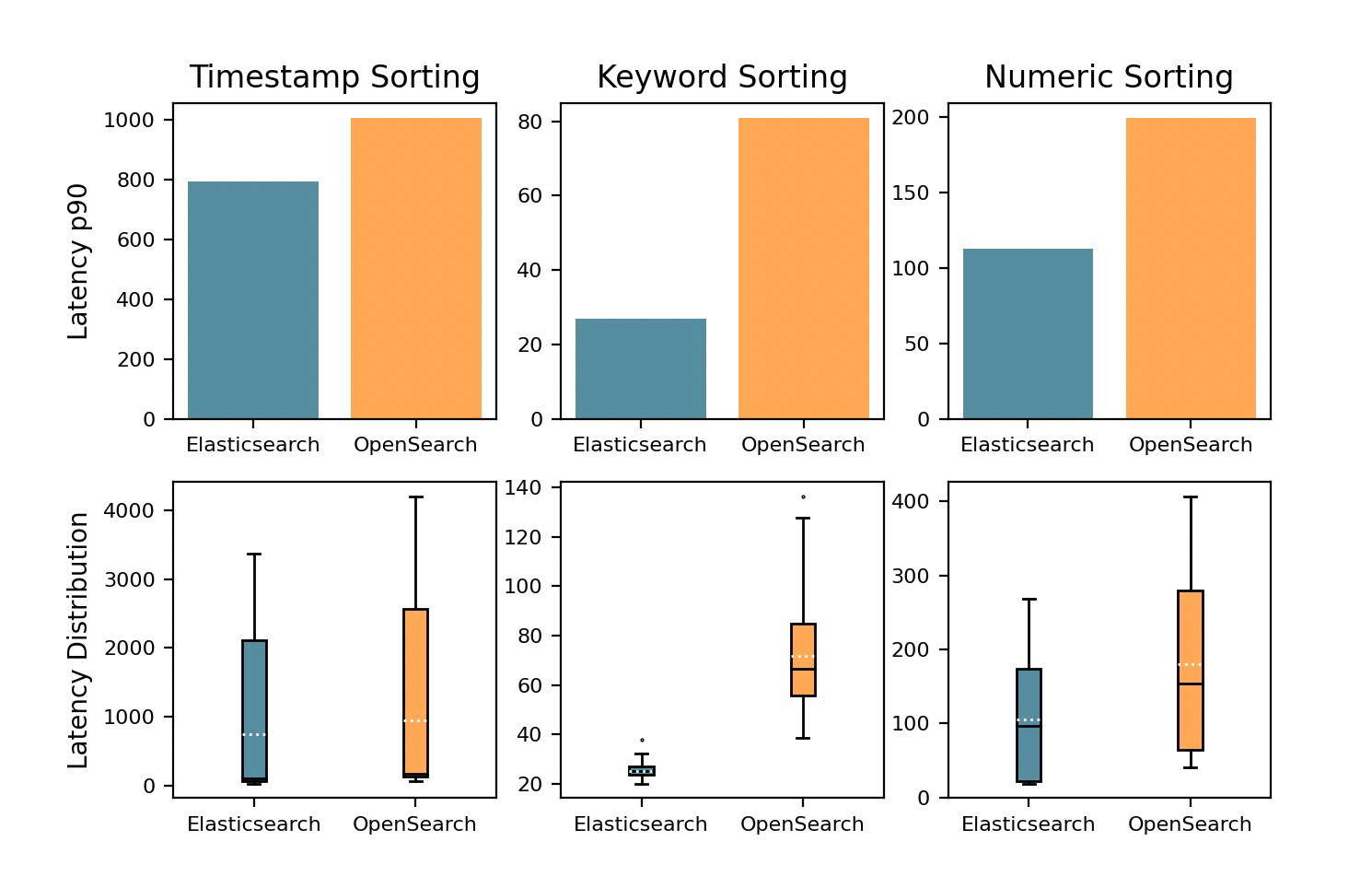

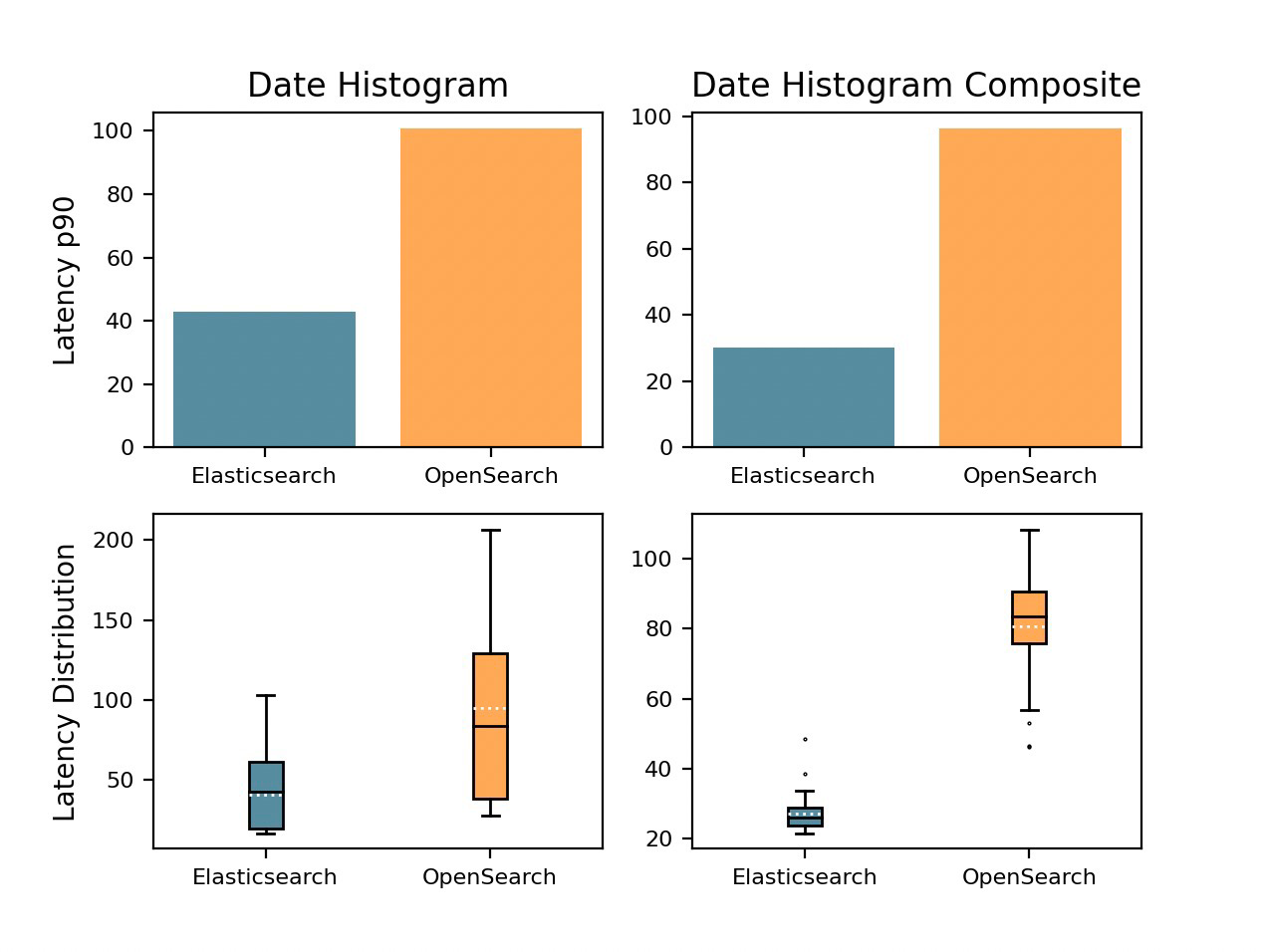

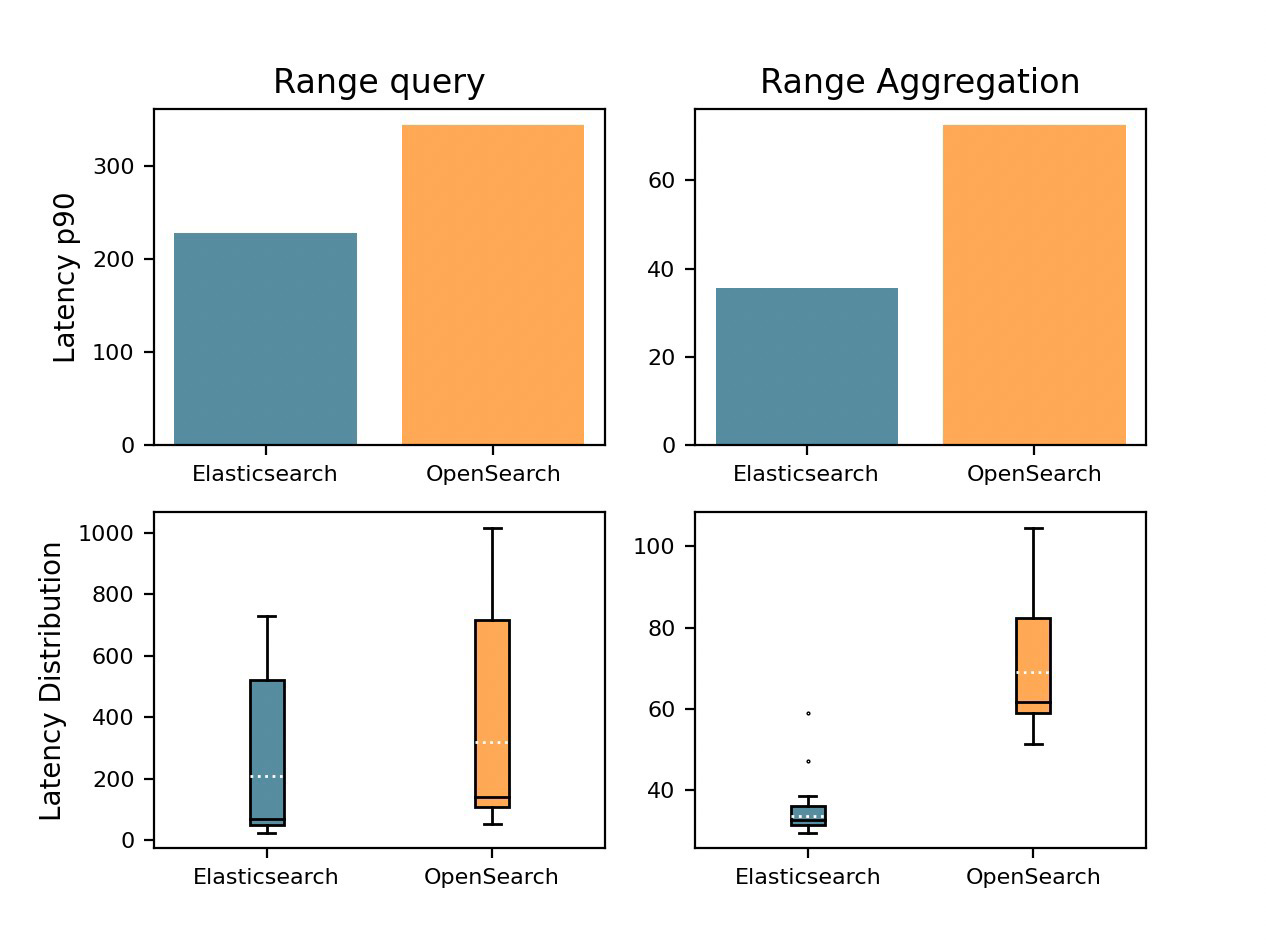

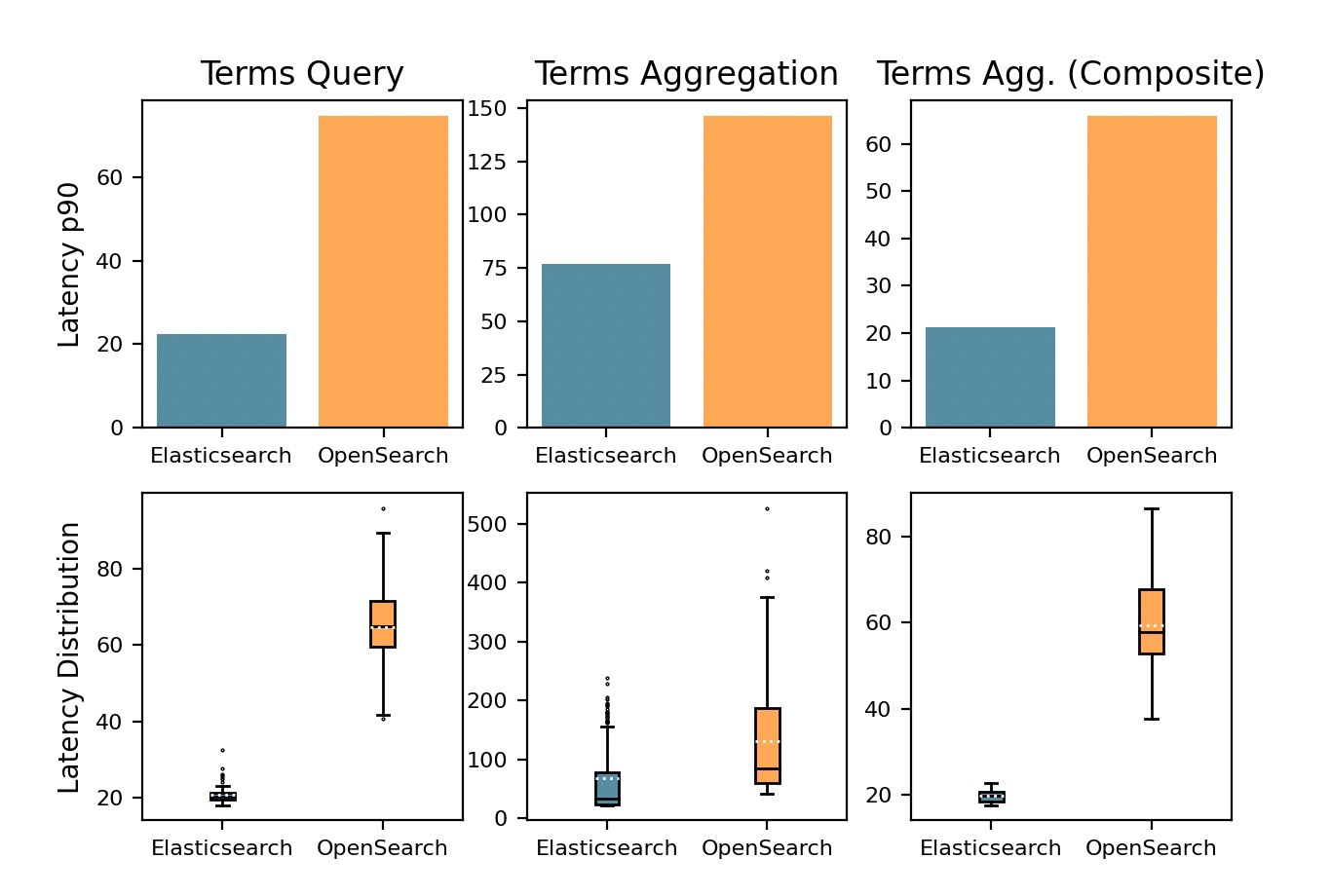

The performance comparison results, focusing on the p90 (90th percentile) of the requests, were cross-validated using a t-test to ensure statistical differences in latency measurements between the two solutions. The relative change, expressed as a percentage, was calculated for each query type. We also show the latency distribution of 100% of the requests using box plots which display the minimum, maximum, median, average, and outliers. The actual box shows the lower and the upper quartile in which 25% and 75% of the observations fall, respectively. In this way, we can have a sense of how the values are actually distributed.

Text querying — 76% Faster

“Show me all data that has [email protected]."

Elasticsearch demonstrated a remarkable lead, being 76% faster in executing text queries than OpenSearch.

Text querying is foundational and crucial for full-text search, which is the primary feature of Elasticsearch. Text field queries allow users to search for specific phrases, individual words, or even parts of words in the text data. Users are enabled to perform complex searches through text data — it enhances the overall search experience and supports a wide range of applications and solutions.

Sorting

"Show me the most expensive products first."

In sorting results of simple text queries, Elasticsearch outperformed OpenSearch by a staggering 140%. Additionally, Elasticsearch displayed faster execution times of 24%, 97%, and 53% for timestamp, keyword, and numeric sort queries, respectively.

Sorting is a process of arranging data in a particular order, such as alphabetical, numerical, or chronological. Sorting is useful for search results based on specific criteria, ensuring that the most relevant results are presented to customers. It is a vital feature that enhances the user experience and improves the overall effectiveness of the search process.

Date histogram

“Show me a bar chart ordered in time for all the data."

For date histogram aggregations, Elasticsearch showcased its prowess by being 81% faster than OpenSearch. This acceleration in processing time is beneficial for generating ordered bar charts based on time-series data.

The date histogram aggregation is useful for aggregating and analyzing time-based data by dividing it into intervals or buckets. This capability allows users to visualize and better understand trends, patterns, and anomalies over time.

Range

"Show me just the price of your products between 0–25."

Elasticsearch was 40% faster in range query and it was 68% faster in range aggregations.

Searching range queries on test or keyword fields is another core parameter of performance and scalability. The range query is useful for filtering search results based on a specific range of values in a given field. This capability allows users to narrow down their search results and find more relevant information quickly.

Faster facet creation is critical because it involves categorizing data into groups (facets) based on specific attributes and then performing summary operations within each group. This process enables easier analysis, filtering, and visualization by providing a structured view of the data that is often used in ecommerce applications.

Terms

"Group the data by what products were bought together."

Elasticsearch demonstrated its superiority by being 108% faster in term queries and 103% faster in composite terms aggregations than OpenSearch. These advantages make Elasticsearch a more compelling choice for tasks involving grouping and filtering data.

The "Significant Terms" aggregation in Elasticsearch automatically excludes common or uninteresting terms, such as stopwords ("and", "the", "a") or terms that appear frequently in the index from the results. This is based on a statistical analysis of the term frequency and distribution in the indexed data.

Resource utilization

Not only did Elasticsearch outperform OpenSearch in various search-related tasks, but it also proved to be more resource-efficient. OpenSearch by default uses the best_speed codec for datastream (which prioritizes query speed over storage efficiency), while Elasticsearch uses best_compression. With their default out-of-the-box settings, Elasticsearch used 37% less disk space and when using best_compression on both (the codec used for this benchmark), Elasticsearch was still 13% more space efficient.

Time-Series Datastream (TSDS)

We went one step further and reindexed the data into a Time-Series Datastream which compressed the data even further — the average document size dropped from 218 kb to 124 kb, a reduction of 54.8%, as you can see in the table below.

Average Document Size | Difference from OpenSearch | |

OpenSearch Datastream | 249 kb | - |

Elasticsearch Datastream | 218 kb | 13% |

Elasticsearch TSDS | 124 kb | 54.8% |

Third-party validation

Our performance testing methodology and results have been independently validated by TechTarget's Enterprise Strategy Group, a respected third-party vendor. Tech Target's Enterprise Strategy Group ESG's validation lends added credibility and impartiality to our findings, assuring that the testing methodologies and the subsequent results maintain the highest standards of accuracy and integrity. Their validation reaffirms the robustness and reliability of our comparison, enabling you to make a well-informed decision based on the results of our benchmark testing.

Testing methodology

How we came to these results

In the spirit of a fair and precise comparison between Elasticsearch and OpenSearch, we created two equivalent 5-node clusters, each equipped with 32GB memory, 8 CPU cores, and 300GB disk per node. For each product, we ingested the same 1TB log file that was randomly generated which contained 22 fields (more details below).

Testing was done within separate Kubernetes node pools ensuring that each product had dedicated resources. We adhered to best practices for Elasticsearch and OpenSearch, including force merging indices before initiating queries and strategies to prevent influence from cached requests, thus ensuring the integrity of our test results.

.png)

In our commitment to transparency in the comparison between Elasticsearch and OpenSearch, we have made the complete benchmarking process available as an open-source project. The repository, which is accessible here, includes Terraform configurations for provisioning the Kubernetes cluster and the Kubernetes manifests for creating both Elasticsearch and OpenSearch clusters. Additionally, the queries used in the benchmark are provided in the repository.

Not only can you test yourself, but you can also use this repository for your own investigation and improve upon the performance of your Elasticsearch projects.

What we tested

Our testing between Elasticsearch and OpenSearch was conducted across critical usage areas, including:

- Search — Ecommerce use case with typical search bar

- Observability — Large volumes of system telemetry data, such as logs, metrics, and application traces

- Security — Real-time analysis of security events

The forthcoming comparison will provide an in-depth analysis of how each platform performs in these areas including text querying, sorting, data histogram, range, and terms.

Data set and ingestion

A 1TB data set was generated using this open-source tool, and it was then uploaded to a GCP bucket. Logstash® was utilized to ingest the data set from the GCP bucket into both Elasticsearch and OpenSearch. Instructions for generating a similar dataset are also included in the repository, in case you want to replicate the benchmark.

All logs composed of the fields are shown in the table below. The values are randomized across all events with the exception of @timestamp, which is sequential and unique per event.

Related: How we sped up data ingestion in Elasticsearch 8.6, 8.7, and 8.8

Field | Value |

@timestamp | Jan 3, 2023 @ 18:59:58.000 |

agent.id | baac7358-a449-4c36-bf0f-befb211f1d38 |

agent.name | fernswisher |

agent.type | filebeat |

| agent.version | 8.8.0 |

| aws.cloudwatch.ingestion_time | 2023-05-01T20:49:30.820Z |

| aws.cloudwatch.log_group | /var/log/messages |

| aws.cloudwatch.log_stream | northcurtain |

| cloud.region | ap-southeast-3 |

| data_stream.dataset | benchmarks |

| data_stream.namespace | day3 |

| data_stream.type | logs |

| event.dataset | generic |

| event.id | gravecrane |

| input.type | aws-cloudwatch |

| log.file.path | /var/log/messages/northcurtain |

| message | 2023-05-01T20:49:30.820Z May 01 20:49:30 ip-106... |

| meta.file | 2023-01-03/1682974095-gotext.ndjson.gz |

| metrics.size | 408 |

| metrics.tmin | 238 |

| process.name | systemd |

| tags | preserve_original_event |

Benchmarks

A total of 35 query types in the five key areas were considered, making a grand total of 387,000 requests. Each query type was executed 100 times, following 100 warm-up queries, and the process was repeated 50 times per query.

Rally is an open-source tool developed by Elastic® for benchmarking and performance testing of Elasticsearch and other components of the Elastic Stack. It allows users to simulate various types of workloads, such as indexing and searching, against Elasticsearch clusters and measure their performance in a reproducible manner. While Rally is developed by Elastic and primarily designed for benchmarking Elasticsearch, it is a flexible tool that can be adapted to work with OpenSearch.

Learn how to set up your Elasticsearch Cluster and get started on data collection and ingestion with our on-demand webinar.

Elastic runs nightly benchmarks to ensure any new code in Elasticsearch performs as it did yesterday or better. We also use our own ML to identify anomalies in performance or inefficiencies in resource utilization. We present performance and size tests in a transparent and public way to benefit everyone using our products. It is important to note that others don’t provide this which can help users monitor the changes they’re interested in over time.

Conclusion: Elasticsearch — The clear victor

Taking into account the results from various tests, it's clear that Elasticsearch consistently outperforms OpenSearch. Whether it's handling simple queries, sorting data, generating histograms, processing terms or range queries, or even resource optimization, Elasticsearch leads the way.

When choosing a search engine platform, businesses should prioritize speed, efficiency, and low resource utilization — all attributes where Elasticsearch excels. This makes it a compelling choice for organizations that rely on swift and accurate search results. Whether you're an ecommerce platform sorting search results, a security analyst identifying threats, or simply needing to observe your critical applications efficiently, Elasticsearch emerges as the clear leader in this comparison.

Ready to test Elasticsearch for yourself?

Get started with a free 14-day trial of Elastic Cloud to see how Elasticsearch performance can help you on your projects. Additionally, watch our on-demand webinar and get started with Elasticsearch.

The release and timing of any features or functionality described in this post remain at Elastic's sole discretion. Any features or functionality not currently available may not be delivered on time or at all.