Build and buy: Why a durable enterprise architecture delivers business impact at scale

Why adopt a “build and buy” AI strategy

As a technology leader at Elastic, my team is responsible for building AI experiences on a centralized architecture to enable our sales and customer support teams to scale their impact and take advantage of AI faster. The dilemma we faced and one that every technology team will face is no longer whether we should build or buy AI applications; instead, it’s how do we design a durable enterprise architecture that uses our existing SaaS investments while building complex, business-tailored AI applications for maximum impact?

Many SaaS applications have embedded AI features and experiences that allow users to accelerate workflows. However, these AI features are fragmented in applications that are disconnected from relevant enterprise context and systems. The reality is that 95% of enterprise AI investments are failing and getting zero return, according to a recent MIT study. This isn’t a funding problem; it’s an architectural issue that shows up as low adoption rates. When AI fails to deliver the correct answer or action, users stop engaging, and the expected business impact is lost.

Instead of choosing between “build or buy,” technology teams need to build a durable enterprise architecture. Doing so allows organizations to create custom AI experiences that connect context from all relevant, distributed source systems and SaaS applications to deliver accurate and trusted outputs. SaaS applications still serve a purpose by bringing use case-specific knowledge, providing a workflow engine, and delivering a system of record.

By building a context retrieval mechanism that pulls knowledge from SaaS applications within an enterprise architecture, technology teams can build context-aware AI applications. By layering technology that enables performance visibility, they can also track user engagement, accuracy, and impact.

Components of a durable enterprise AI architecture

A durable AI architecture is what enables the “build and buy” strategy to function. It allows teams to move from simple, low-complexity AI use cases to highly complex agentic workflows. The architecture has two main areas of focus: context retrieval and performance monitoring.

Components of context retrieval

The key to high-value AI is ensuring that AI models have deep, accurate, and secure context about your business by providing access to high-quality data. Context retrieval mechanisms are built using context engineering, a collection of practices and capabilities that enable large language models (LLMs) to access the right information to complete a desired task.

Enterprise knowledge from SaaS applications: Ingest, unify, and store unstructured, semi-structured, and structured business data at scale from SaaS applications and enterprise systems via integrations and connectors within an open vector database. This includes additional information, such as standard operating procedures, company policies, and business processes, that enable AI responses to be grounded in business logic and proprietary data.

Information security: Implement and enforce data security on enterprise knowledge using document- and field-level security and role-based access controls (RBAC) so that only the right users have access to relevant information.

Search retrieval: For LLMs to pull the most relevant context, teams need access to advanced search techniques that can match user query intent with relevant context from source systems. This includes various search and ranking techniques — semantic search with machine learning (ML), hybrid retrieval, advanced relevance tuning, and reranking — to power AI applications that deliver accurate results.

AI/ML integrations: With a platform approach, enterprise IT teams can quickly access preferred LLMs and AI development frameworks to speed up development. Using ML models, teams can further tune relevance to pass the most relevant context to LLMs, improving accuracy, user experience, and trust in outputs.

The more context that’s provided, the more accurate the responses and actions become. Based on the level of use-case complexity, adding context will increase the effort of context engineering and architectural complexity. However, the upfront investment to build an architecture that pulls distributed data and context from source systems will alleviate the technical burden as more use cases are built on the architecture.

Components of technology performance monitoring

Without AI performance management, IT teams have no visibility and can’t trust or drive adoption of AI experiences. Layering observability into your architecture is a crucial pillar in the “build and buy” strategy.

Application performance monitoring (APM): APM provides critical insights, including response times, error rates, and resource utilization, to help track the performance of the services and infrastructure supporting the AI application. By tracking bottlenecks and anomalies, teams can quickly isolate issues and resolve potential incidents before they impact customer experience.

LLM observability: LLM observability provides critical business insights into AI model performance, context accuracy, and usage patterns, such as user engagement, response accuracy, conversation quality, and user sentiment. Grouping these conversations and interactions by use case provides additional insight into how users are engaging. These insights are essential signals for developers, enabling them to quickly fine-tune experiences, expand successful use cases, and augment solutions aligned with real user needs that further enhance user satisfaction and accelerate adoption.

Cloud monitoring: Most AI applications are hosted in the cloud because workflows are resource-intensive and dynamic. This allows for easier access to enterprise-ready LLMs and AI frameworks, autoscaling infrastructure, application availability based on SLAs/SLOs, and cost management. With cloud monitoring, teams can correlate the performance and cost of cloud infrastructure, enabling them to quickly diagnose infrastructure-related bottlenecks and optimize resource usage.

With observability tools, development teams can easily track the performance and accuracy of AI applications.

Note: Typically, SaaS applications do not offer AI insights into user engagement or response accuracy. With limited visibility into AI performance in SaaS applications, it’s critical to invest in a durable enterprise architecture for AI that enables organizations to build custom AI solutions. These are monitored with observability tooling to gain insights into AI performance.

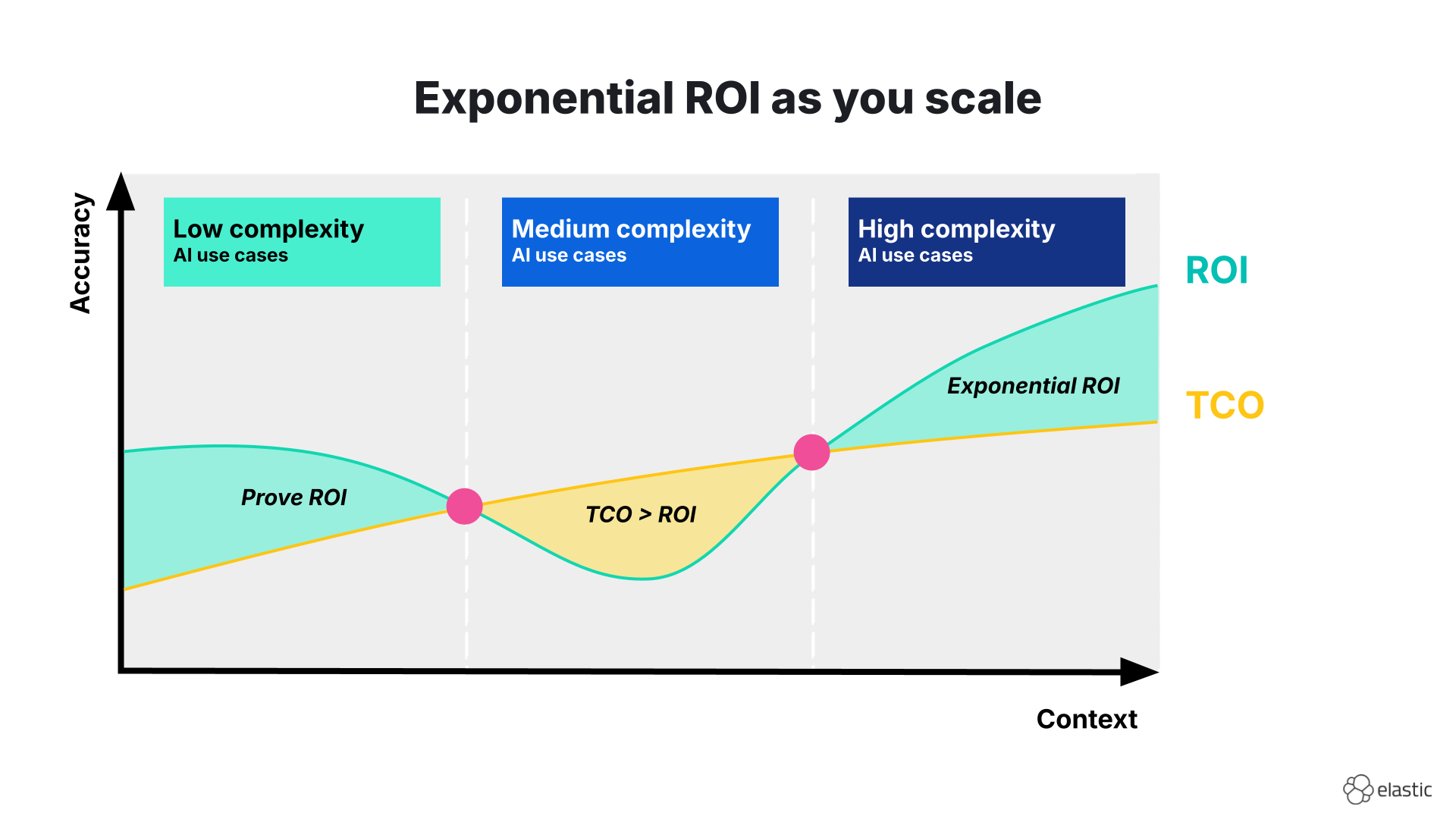

Balance architectural complexity with return on investment

The goal of a durable architecture is not to quickly build the most complex AI use cases but to build with intention and drive return on investment (ROI) over time. Initial investments in simple AI use cases might quickly show business impact, as it may only require a small selection of context from source systems.

However, when moving to medium- and high-complexity use cases, development teams will need to exert additional effort to implement their context retrieval mechanisms and monitoring layer, which will increase the total cost of ownership (TCO). However, once the architecture is in place, every subsequent AI use case built on the same context is faster and cheaper to deploy, exponentially increasing ROI as teams scale.

At Elastic, we saw this with our AI use cases. By building a unified, context-aware architecture with monitoring using Elastic Observability, our internal Support Assistant achieved an estimated $1.7 million in cost avoidance from case deflections in the first year. Additionally, 30% of support is delivered digitally through the Support Assistant. This proves that when you focus on unified context and technology performance, ROI can scale rapidly. We’re now using the same architecture to deploy sales use cases, such as account-based insights, RFP automation, and AI account plans.

Elastic enables durable AI architectures

The “build and buy” approach is necessary to shift from short-term project thinking to a long-term AI strategy. Existing SaaS investments can and should be used to provide AI experiences with all the relevant context needed to produce accurate outputs. By prioritizing an architecture-first approach, your organization can move beyond fragmented AI features and build toward a scalable AI solution.

At Elastic, we build our durable enterprise AI architecture using our Search AI Platform. We use Elasticsearch to build context-retrieval mechanisms via context engineering, providing LLMs with the most relevant data and tools so that our AI experiences deliver accurate responses and actions. And with direct access to Elastic Observability within the platform, we can monitor technology performance to deliver reliable AI applications that drive business impact.

The release and timing of any features or functionality described in this post remain at Elastic's sole discretion. Any features or functionality not currently available may not be delivered on time or at all.

In this blog post, we may have used or referred to third party generative AI tools, which are owned and operated by their respective owners. Elastic does not have any control over the third party tools and we have no responsibility or liability for their content, operation or use, nor for any loss or damage that may arise from your use of such tools. Please exercise caution when using AI tools with personal, sensitive or confidential information. Any data you submit may be used for AI training or other purposes. There is no guarantee that information you provide will be kept secure or confidential. You should familiarize yourself with the privacy practices and terms of use of any generative AI tools prior to use.

Elastic, Elasticsearch, and associated marks are trademarks, logos or registered trademarks of Elasticsearch B.V. in the United States and other countries. All other company and product names are trademarks, logos or registered trademarks of their respective owners.