In part 1 of this series, we explored how writing integration tests that allow us to test our software against real Elasticsearch isn't rocket science. This post will demonstrate various techniques for data initialization and performance improvements.

Different purposes, different characteristics

Once the testing infrastructure is set up, and the project is already using an integration test framework for at least one test (like we use Testcontainers in our demo project), adding more tests becomes easy because it doesn't require mocking. For example, if you need to verify that the number of books fetched for the year 1776 is correct, all you have to do is add a test like this:

@Test

void shouldFetchTheNumberOfBooksPublishedInGivenYear() {

var systemUnderTest = new BookSearcher(client);

int books = systemUnderTest.numberOfBooksPublishedInYear(1776);

Assertions.assertEquals(2, books, "there were 2 books published in 1776 in the dataset");

}This is all that's needed, provided the dataset used to initialize Elasticsearch already contains the relevant data. The cost of creating such tests is low, and maintaining them is nearly effortless (as it mostly involves updating the Docker image version).

No software exists in a vacuum

Today, every piece of software we write is connected to other systems. While tests using mocks are excellent for verifying the behavior of the system we're building, integration tests give us confidence that the entire solution works as expected and will continue to do so. This can make it tempting to add more and more integration tests.

Integration tests have their costs

However, integration tests aren't free. Due to their nature — going beyond in-memory-only setups — they tend to be slower, costing us execution time.

It's crucial to balance the benefits (confidence from integration tests) with the costs (test execution time and billing, often translating directly to an invoice from cloud vendors). Instead of limiting the number of tests because they are slow, we can make them run faster. This way, we can maintain the same execution time while adding more tests. The rest of this post will focus on how to achieve that.

Let's revisit the example we've been using so far, as it is painfully slow and needs optimization. For this and subsequent experiments, I assume the Elasticsearch Docker image is already pulled, so it won't impact the time. Also, note that this is not a proper benchmark but more of a general guideline.

Tests with Elasticsearch can also benefit from performance improvements

Elasticsearch is often chosen as a search solution because it performs well. Developers usually take great care to ensure production code is optimized, especially in critical paths. However, tests are often seen as less critical, leading to slow tests that few people want to run. But it doesn't have to be this way. With some simple technical tweaks and a shift in approach, integration tests can run much faster.

Let's start with the current integration test suite. It functions as intended, but with only three tests, it takes five and a half minutes — roughly 90 seconds per test — when you run time ./mvnw test '-Dtest=*IntTest*'. Please note your results may vary depending on your hardware, internet speed, etc.

Batch me if you can

In integration test suites, many performance issues stem from inefficient data initialization. While certain processes may be natural or acceptable in a production flow (such as data arriving as users enter it), these processes may not be optimal for tests, where we need to import data quickly and in bulk.

The dataset in our example is about 50 MiB and contains nearly 81,000 valid records. If we process and index each record individually, we end up making 81,000 requests just to prepare data for each test.

Instead of a naive loop that indexes documents one by one (as in the main branch):

boolean hasNext = false;

do {

try {

Book book = it.nextValue();

client.index(i -> i.index("books").document(book));

hasNext = it.hasNextValue();

} catch (JsonParseException | InvalidFormatException e) {

// ignore malformed data

}

} while (hasNext);We should use a batch approach, such as with BulkIngester. This allows concurrent indexing requests, with each request sending multiple documents, significantly reducing the number of requests:

try (BulkIngester<?> ingester = BulkIngester.of(bi -> bi

.client(client)

.maxConcurrentRequests(20)

.maxOperations(5000))) {

boolean hasNext = true;

while (hasNext) {

try {

Book book = it.nextValue();

ingester.add(BulkOperation.of(b -> b

.index(i -> i

.index("books")

.document(book))));

hasNext = it.hasNextValue();

} catch (JsonParseException | InvalidFormatException e) {

// ignore malformed data

}

}

}This simple change reduced the overall testing time to around 3 minutes and 40 seconds, or roughly 73 seconds per test. While this is a nice improvement, we can push further.

Stay local

We reduced test duration in the previous step by limiting network round-trips. Could there be more network calls we can eliminate without altering the tests themselves?

Let's review the current situation:

- Before each test, we fetch test data from a remote location repeatedly.

- While the data is fetched, we send it to the Elasticsearch container in bulk.

We can improve performance by keeping the data as close to the Elasticsearch container as possible. And what's closer than inside the container itself?

One way to import data into Elasticsearch en masse is the _bulk REST API, which we can call using curl. This method allows us to send a large payload (e.g., from a file) written in newline-delimited JSON format. The format looks like this:

action_and_meta_data\n

optional_source\n

action_and_meta_data\n

optional_source\n

....

action_and_meta_data\n

optional_source\nMake sure the last line is empty.

In our case, the file might look like this:

{"index":{"_index":"books"}}

{"title":"...","description":"...","year":...,"publisher":"...","ratings":...}

{"index":{"_index":"books"}}

{"title":"Whispers of the Wicked Saints","description":"Julia ...","author":"Veronica Haddon","year":2005,"publisher":"iUniverse","ratings":3.72}Ideally, we can store this test data in a file and include it in the repository, for example, in src/test/resources/. If that's not feasible, we can generate the file from the original data using a simple script or program. For an example, take a look at CSV2JSONConverter.java in the demo repository.

Once we have such a file locally (so we have eliminated network calls to obtain the data), we can tackle the other point, which is: copying the file from the machine where the tests are running into the container running Elasticsearch. It's easy, we can do that using a single method call, withCopyToContainer, when defining the container. So after the change it looks like this:

@Container

ElasticsearchContainer elasticsearch =

new ElasticsearchContainer(ELASTICSEARCH_IMAGE)

.withCopyToContainer(MountableFile.forHostPath("src/test/resources/books.ndjson"), "/tmp/books.ndjson");The final step is making a request from within the container to send the data to Elasticsearch. We can do this with curl and the _bulk endpoint by running curl inside the container. While this could be done in the CLI with docker exec, in our @BeforeEach, it becomes elasticsearch.execInContainer, as shown here:

ExecResult result = elasticsearch.execInContainer(

"curl", "https://localhost:9200/_bulk?refresh=true", "-u", "elastic:changeme",

"--cacert", "/usr/share/elasticsearch/config/certs/http_ca.crt",

"-X", "POST",

"-H", "Content-Type: application/x-ndjson",

"--data-binary", "@/tmp/books.ndjson"

);

assert result.getExitCode() == 0;Reading from the top, we're making this way a POST request to the _bulk endpoint (and wait for the refresh to complete), authenticated as the user elastic with the default password, accepting the auto-generated and self-signed certificate (which means we don't have to disable SSL/TLL), and the payload is the content of the /tmp/books.ndjson file, which was copied to the container when it was starting. This way, we reduce the need for frequent network calls. Assuming the books.ndjson file is already present on the machine running the tests, the overall duration is reduced to 58 seconds.

Less (often) is more

In the previous step, we reduced network-related delays in our tests. Now, let's address CPU usage.

There's nothing wrong with relying on @Testcontainers and @Container annotations. The key, though, is to understand how they work: when you annotate an instance field with @Container, Testcontainers will start a fresh container for each test. Since container startup isn't free (it takes time and resources), we pay this cost for every test.

Starting a fresh container for each test is necessary in some scenarios (e.g., when testing system start behavior), but not in our case. Instead of starting a new container for each test, we can keep the same container and Elasticsearch instance for all tests, as long as we properly reset the container's state before each test.

First, make the container a static field. Next, before creating the books index (by defining the mapping) and populating it with documents, delete the existing index if it exists from a previous test.

For this reason the setupDataInContainer() should start with something like:

ExecResult result = elasticsearch.execInContainer(

"curl", "https://localhost:9200/books", "-u", "elastic:changeme",

"--cacert", "/usr/share/elasticsearch/config/certs/http_ca.crt",

"-X", "DELETE"

);

// we don't check the result, because the index might not have existed

// now we create the index and give it a precise mapping, just like for production

result = elasticsearch.execInContainer(

"curl", "https://localhost:9200/books", "-u", "elastic:changeme",

"--cacert", "/usr/share/elasticsearch/config/certs/http_ca.crt",

"-X", "PUT",

"-H", "Content-Type: application/json",

"-d", """

{

"mappings": {

"properties": {

"title": { "type": "text" },

"description": { "type": "text" },

"author": { "type": "text" },

"year": { "type": "short" },

"publisher": { "type": "text" },

"ratings": { "type": "half_float" }

}

}

}

"""

);

assert result.getExitCode() == 0;As you can see, we can use curl to execute almost any command from within the container. This approach offers two significant advantages:

- Speed: If the payload (like

books.ndjson) is already inside the container, we eliminate the need to repeatedly copy the same data, drastically improving execution time. - Language Independence: Since

curlcommands aren't tied to the programming language of the tests, they are easier to understand and maintain, even for those who may be more familiar with other tech stacks.

While using raw curl calls isn't ideal for production code, it's an effective solution for test setup. Especially when combined with a single container startup, this method reduced my tests execution time to around 30 seconds.

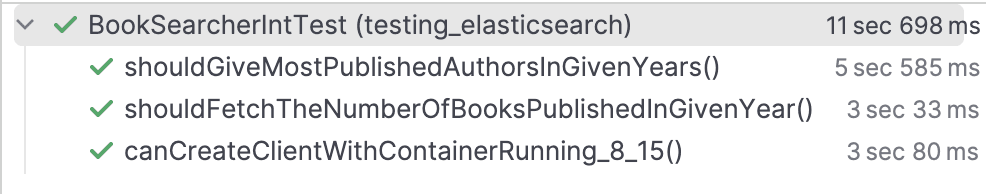

It's also worth noting that in the demo project (branch data-init), which currently has only three integration tests, roughly half of the total duration is spent on container startup. After the initial warm-up, individual tests take about 3 seconds each. Consequently, adding three more tests won't double the overall time to another 30 seconds, but will only increase it by roughly 9-10 seconds. The test execution times, including data initialization, can be observed in the IDE:

Summary

In this post, I demonstrated several improvements for integration tests using Elasticsearch:

- Integration tests can run faster without changing the tests themselves — just by rethinking data initialization and container lifecycle management.

- Elasticsearch should be started only once, rather than for every test.

- Data initialization is most efficient when the data is as close to Elasticsearch as possible and transmitted efficiently.

- Although reducing the test dataset size is an obvious optimization (and not covered here), it's sometimes impractical. Therefore, we focused on demonstrating technical methods instead.

Overall, we significantly reduced the test suite's duration — from 5.5 minutes to around 30 seconds — lowering costs and speeding up the feedback loop.

In the next post, we'll explore more advanced techniques to further reduce execution time in Elasticsearch integration tests.

Let us know if your case is using one of the techniques described above or if you have questions on our Discuss forums and the community Slack channel.

Want to get Elastic certified? Find out when the next Elasticsearch Engineer training is running!

Elasticsearch is packed with new features to help you build the best search solutions for your use case. Dive into our sample notebooks to learn more, start a free cloud trial, or try Elastic on your local machine now.