Functionbeat overview

editFunctionbeat overview

editFunctionbeat is an Elastic Beat that you deploy as a function in your serverless environment to collect data from cloud services and ship it to the Elastic Stack.

Version 8.1.3 supports deploying Functionbeat as an AWS Lambda service. It responds to triggers defined for the following event sources:

Functionbeat is an Elastic Beat. It’s

based on the libbeat framework. For more information, see the

Beats Platform Reference.

The following sections explore some common use cases for Functionbeat:

Want to ship logs from Google Cloud? Use our Google Cloud Dataflow templates to ship Google Pub/Sub and Google Cloud Storage logs directly from the Google Cloud Console. To learn more, refer to GCP Dataflow templates.

Monitor cloud deployments

editYou can deploy Functionbeat on your serverless environment to collect logs and metrics generated by cloud services and stream the data to the Elastic Stack for centralized analytics.

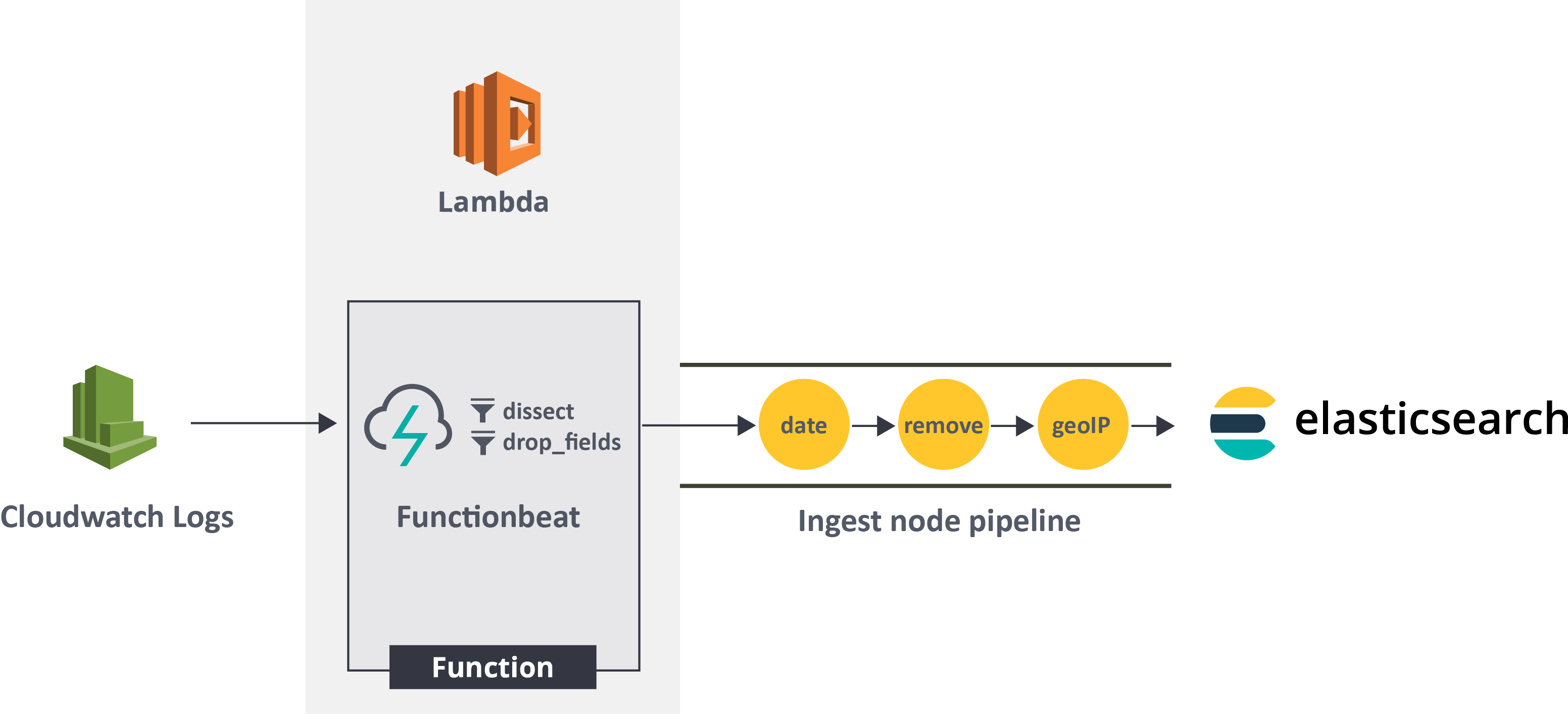

Monitor AWS services with CloudWatch logs

editYou can deploy Functionbeat as a Lambda function on AWS to receive events from a Cloudwatch Log group, extract and structure the relevant fields, then stream the events to Elasticsearch.

The processing pipeline for this use case typically looks like this:

- Functionbeat runs as a Lambda function on AWS and reads the data stream from a Cloudwatch Log group.

- Beats processors, such as dissect and drop_fields, filter and structure the events.

- Optional ingest pipelines in Elasticsearch further enhance the data.

- The structured events are indexed into an Elasticsearch cluster.

Perform event-driven processing

editYou can use Functionbeat to implement event-driven processing workflows with cloud messaging queues and the Elastic Stack. Functionbeat responds to event triggers from AWS Kinesis and SQS.

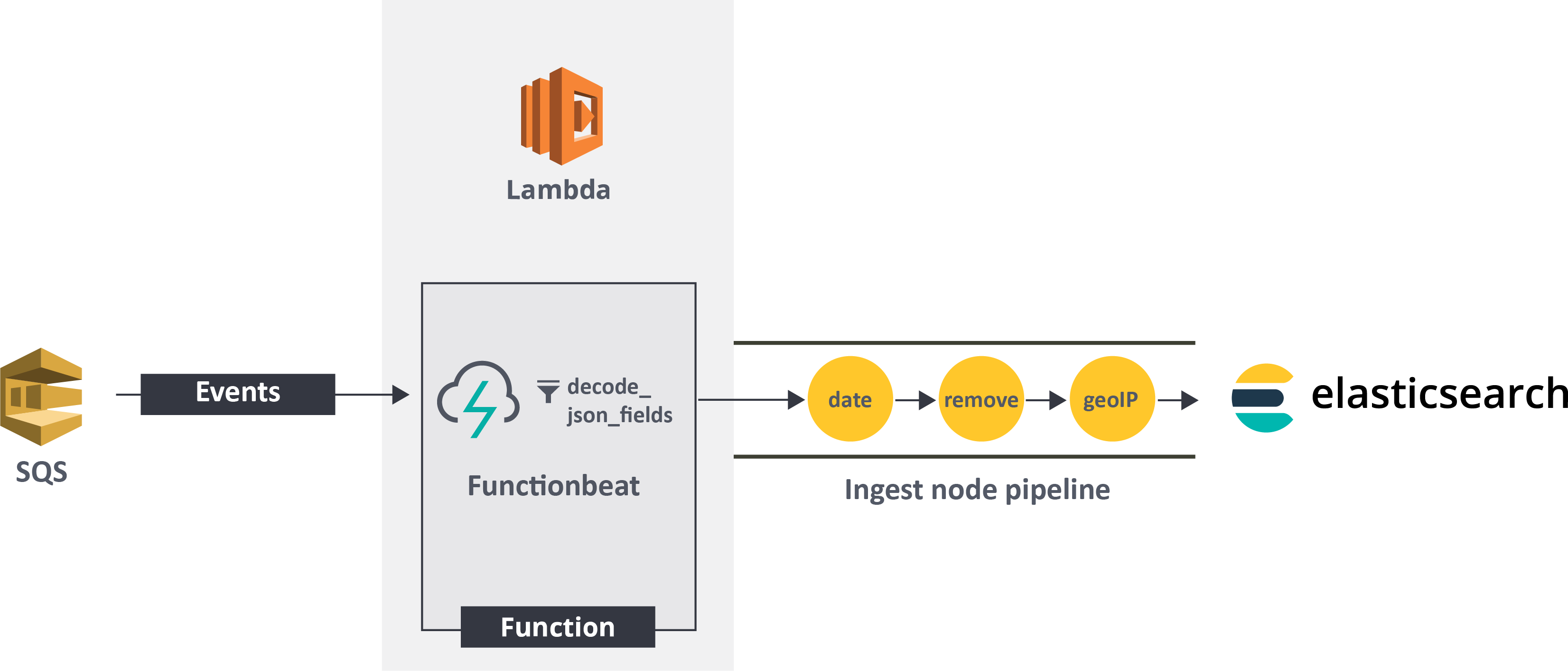

Analyze application data from SQS

editFor applications that send JSON-encoded events to an SQS queue, Functionbeat can listen for, ingest, and decode JSON events prior to shipping them to Elasticsearch, where you can analyze the streaming data.

The processing pipeline for this use case typically looks like this:

- Functionbeat runs as a serverless shipper and listens to an SQS queue for application events.

- The Beats decode_json_fields processor decodes JSON strings and replaces them with valid JSON objects.

- Optional ingest pipelines in Elasticsearch further enhance the data.

- The events are indexed into an Elasticsearch cluster.