Kafka module

editKafka module

editThe kafka module collects and parses the logs created by

Kafka.

When you run the module, it performs a few tasks under the hood:

- Sets the default paths to the log files (but don’t worry, you can override the defaults)

- Makes sure each multiline log event gets sent as a single event

- Uses ingest node to parse and process the log lines, shaping the data into a structure suitable for visualizing in Kibana

- Deploys dashboards for visualizing the log data

Read the quick start to learn how to set up and run modules.

Compatibility

editThe kafka module was tested with logs from versions 0.9, 1.1.0 and 2.0.0.

Configure the module

editYou can further refine the behavior of the kafka module by specifying

variable settings in the

modules.d/kafka.yml file, or overriding settings at the command line.

The following example shows how to set paths in the modules.d/kafka.yml

file to override the default paths for logs:

- module: kafka

log:

enabled: true

var.paths:

- "/path/to/logs/controller.log*"

- "/path/to/logs/server.log*"

- "/path/to/logs/state-change.log*"

- "/path/to/logs/kafka-*.log*"

To specify the same settings at the command line, you use:

-M "kafka.log.var.paths=[/path/to/logs/controller.log*, /path/to/logs/server.log*, /path/to/logs/state-change.log*, /path/to/logs/kafka-*.log*]"

Variable settings

editEach fileset has separate variable settings for configuring the behavior of the

module. If you don’t specify variable settings, the kafka module uses

the defaults.

For more information, see Configure variable settings. Also see Override input settings.

When you specify a setting at the command line, remember to prefix the

setting with the module name, for example, kafka.log.var.paths

instead of log.var.paths.

log fileset settings

edit-

var.kafka_home -

The path to your Kafka installation. The default is

/opt. For example:- module: kafka log: enabled: true var.kafka_home: /usr/share/kafka_2.12-2.4.0 ... -

var.paths -

An array of glob-based paths that specify where to look for the log files. All

patterns supported by Go Glob

are also supported here. For example, you can use wildcards to fetch all files

from a predefined level of subdirectories:

/path/to/log/*/*.log. This fetches all.logfiles from the subfolders of/path/to/log. It does not fetch log files from the/path/to/logfolder itself. If this setting is left empty, Filebeat will choose log paths based on your operating system.

Timezone support

editThis module parses logs that don’t contain timezone information. For these logs,

Filebeat reads the local timezone and uses it when parsing to convert the

timestamp to UTC. The timezone to be used for parsing is included in the event

in the event.timezone field.

To disable this conversion, the event.timezone field can be removed with

the drop_fields processor.

If logs are originated from systems or applications with a different timezone to

the local one, the event.timezone field can be overwritten with the original

timezone using the add_fields processor.

See Processors for information about specifying processors in your config.

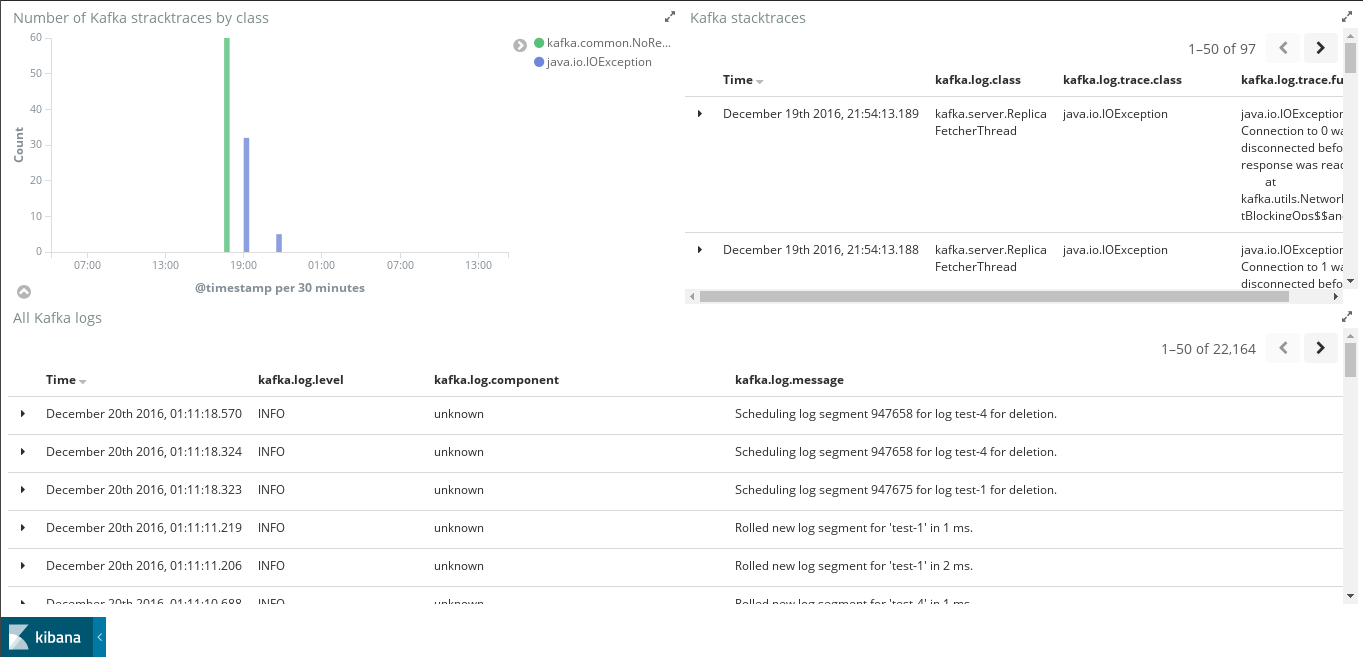

Example dashboard

editThis module comes with a sample dashboard to see Kafka logs and stack traces.

Fields

editFor a description of each field in the module, see the exported fields section.