How Orca leverages Search AI to help users gain visibility, achieve compliance, and prioritize risks

As organizations continue deploying more applications to the cloud, it becomes critical to manage their cloud security posture. Security technology providers such as Orca Security are leading the pack by offering organizations better ways of protecting their environments and prioritizing their greatest cloud risks. However, searching for the most relevant data can be quite tricky for security teams as their ecosystems become more complex, with adversary attacks becoming even more sophisticated in their nature. This is especially true for organizations that are adopting multiple cloud providers, and analysts may have to consider the nuances of different taxonomies for each provider, making the retrieval of key information more difficult.

Despite evaluating a “vector embeddings first” database product, Orca found that without a proper keyword search added to embeddings, the results were lacking. That is why Orca turned to Elasticsearch, integrating its advanced search capabilities to create a smarter, AI-driven search engine for its security solution. This strategic choice transformed Orca’s platform, enabling its users to perform complex, domain-specific searches with ease and accuracy.

Search at the center of attention

Orca Security needed a tool to stay ahead of the curve and keep pace with the demands of cybersecurity teams (as well as developers, DevOps, cloud architects, risk governance, and compliance teams) who need to easily and intuitively understand exactly what’s in their cloud environments. Orca wanted teams across the organization, regardless of their skill level, to quickly respond to zero day risks, perform audits, optimize cloud assets, and understand exposure to threats to facilitate data-driven decisions.

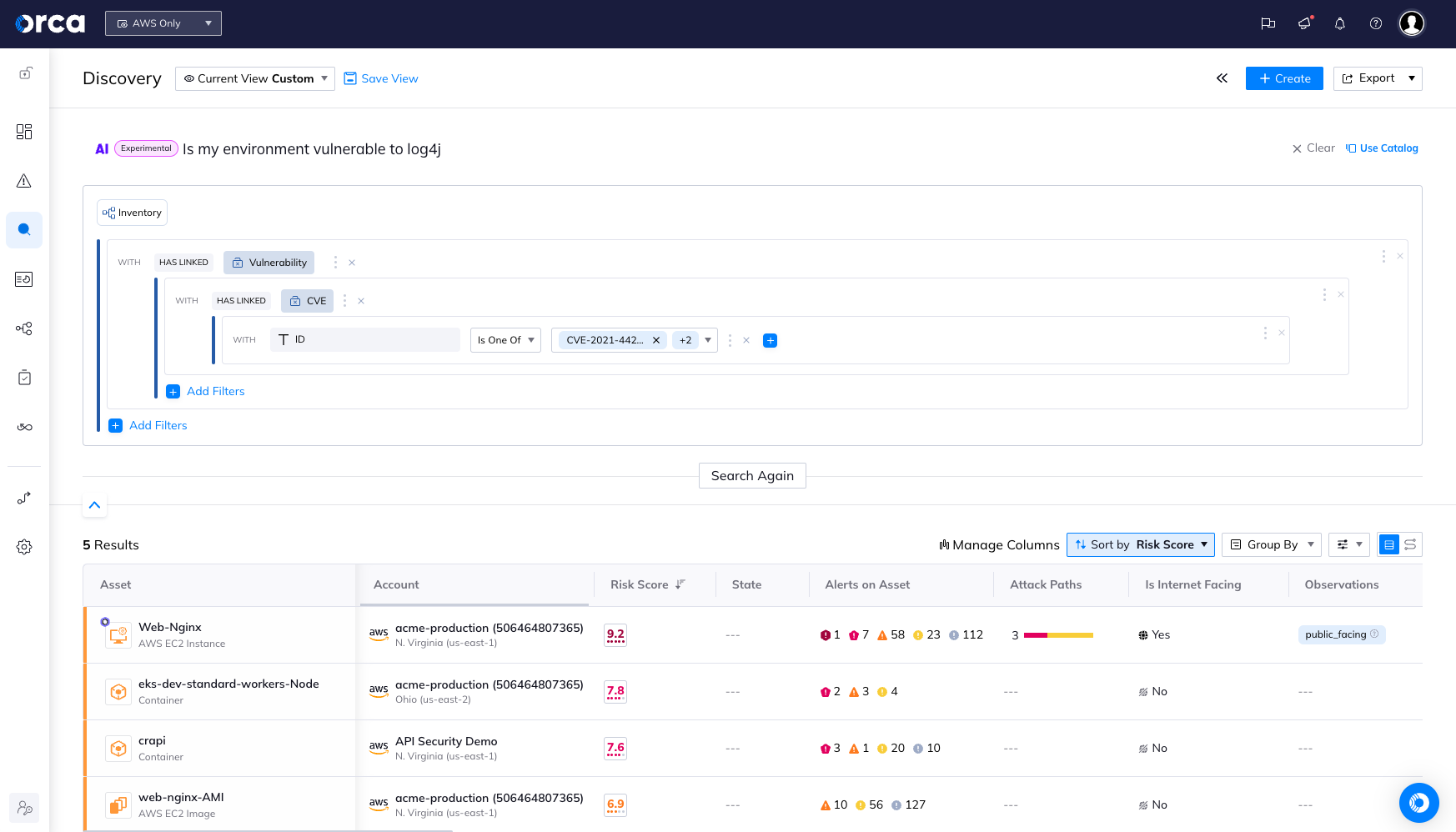

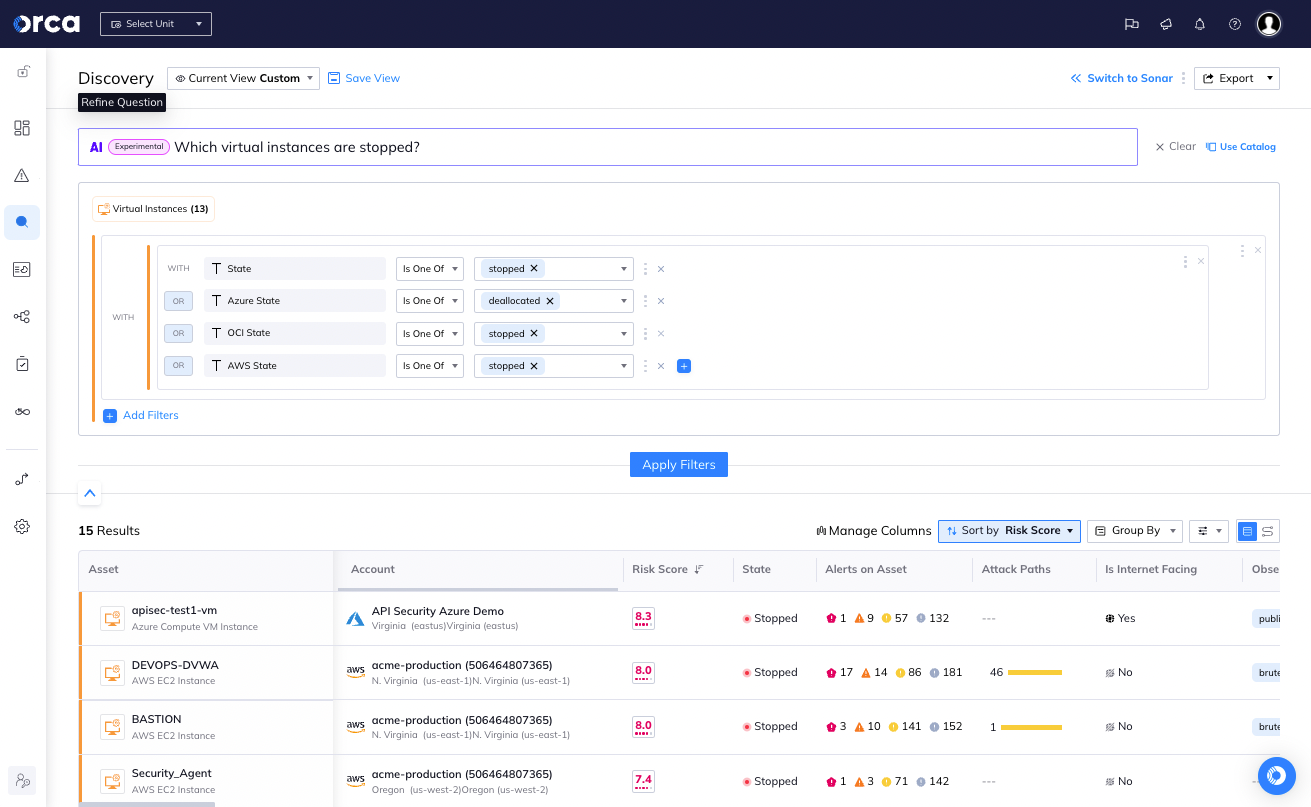

Orca realized that its users needed a smarter, more intuitive in-app way to search for domain-specific queries, ask complex questions in simple language, and get accurate results instantly — for example, a customer might ask a question like, “Which internet-exposed VMs contain personal health information?” These queries require understanding complex subjects, attributes, and relationships within the data. Orca needed a search engine that could interpret these questions and automatically generate the appropriate filters.

So the team at Orca sought to implement a search engine powered by AI that could make those complex tasks easier, and Elasticsearch was the perfect fit. Elasticsearch brought several significant advantages, contributing to the overall promise of Orca Security's AI-driven search engine. Below are some of the key advantages the team at Orca saw in Elasticsearch:

High-performance search capabilities

Elasticsearch delivers a hybrid search setup that combines keyword matching with vector search, providing precise and relevant results even for complex queries involving domain-specific terms and attributes. Its powerful filtering capabilities are essential, especially when working with schemas like the Orca Schema. For instance, if the query's subject is determined to be a VM and the AI is searching for an attribute like “Has PII,” Elasticsearch scopes and filters the search to only include attributes related to VMs. This excludes irrelevant attributes from other models, such as PII on a database, ensuring both accuracy and the creation of valid queries.

Flexibility and customization

Elasticsearch's ability to handle custom boostings and multi-match fields enhances the search quality. For instance, boosting the weight of names and descriptions differently ensures a balanced search result. Orca leveraged these features to fine-tune search parameters, providing a tailored experience for its users.

Cost and performance efficiency

Elasticsearch enables significant cost savings for GenAI use cases by efficiently reducing the load on traditional large language models (LLMs), which can be expensive and slow, especially when processing large volumes of data. Elasticsearch’s filtering and retrieval capabilities result in faster and more cost-effective searches. By optimizing the selection of relevant examples for each query, known as retrieval augmented generation (RAG), Elasticsearch significantly reduces the cost of LLM operations.

Foundation model LLMs, trained on generic data, often do not inherently understand Orca's query language (DSL) or the constantly evolving cybersecurity data graph with thousands of unique asset types and attributes. Explaining the DSL rules alone consumed around 2,000 tokens, and providing examples of transformations added even more. Given the LLMs' limited context windows (8K tokens at the time), every additional token increased latency and cost. By using Elasticsearch, we could select the three to six most relevant examples from hundreds, ensuring only the necessary data was sent to the LLM. This approach not only saved costs but also improved accuracy and reduced latency.

Load on LLMs

While we can't disclose specific data figures, the key takeaway is this: Elasticsearch enabled us to drastically reduce the amount of data sent to the LLM. By pre-filtering and curating only the most relevant examples (three to six instead of potentially hundreds), we minimized the LLM’s workload. This directly translates to faster response times, lower cost, and a more efficient search experience overall.

The AI search is among the most loved features of the platform, and users have performed queries with thousands and thousands of different cybersecurity concepts and permutations — in dozens of different languages (more on language support to come in a future post).

Search AI powers a supercharged cloud security experience

Leveraging the power of Elasticsearch, and the Orca team’s deep commitment to AI innovation, they were able to greatly improve the user journey. The new search experience lowers skill thresholds, simplifies tasks, accelerates remediation, and improves understanding of the cloud environment. This is how it works:

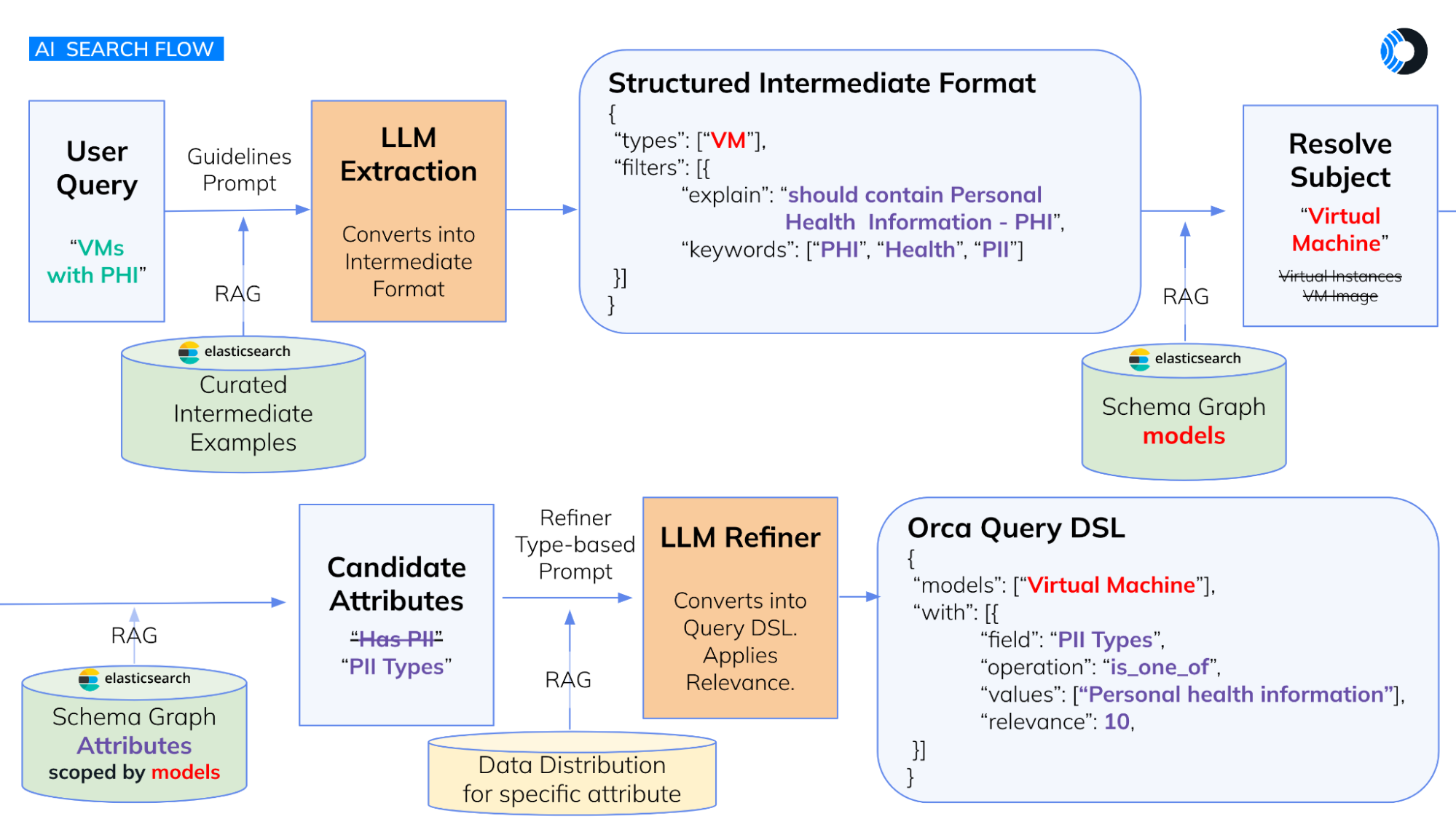

Step 1: User query processing

The investigation starts with the user inputting a query into a search box. Orca Security uses an LLM to convert the user's question into an intermediate format. This format includes the subject (e.g., virtual machine) and the required attributes (e.g., personal health information).

Step 2: Data transformation and RAG

In the context of Orca, RAG involves curating examples that transform user queries into an intermediate format. When a user inputs a query, Elasticsearch selects the most relevant examples by combining keyword matching and embedding search.

For instance, if the query is “Assets with PII,” Elasticsearch finds the closest curated examples, such as:

“Do we have any PII outside of Europe?”

“VMs with credit cards & PCI that have unencrypted SSH keys”

“Abandoned assets and Resources”

Each example comes with its curated expected JSON output and reasoning. This process ensures the LLM has sufficient context to accurately transform the query into a structured format, improving the overall search experience and ensuring valid query creation.

In Step 2, RAG using Elasticsearch is critical for translating user queries into Orca’s internal representation. Here’s how it works:

Curated examples: We’ve created hundreds of examples demonstrating how to transform natural language queries into Orca’s structured format.

Elasticsearch’s role: For each new user query, Elasticsearch identifies the most relevant examples from our curated set. It does this by combining keyword matching (finding exact terms) and embedding search (understanding semantic similarity).

Example: If a user asks, “Show me all internet-facing servers with vulnerabilities,” Elasticsearch might retrieve examples like “Find assets exposed to the internet,” “List all servers with critical CVEs,” and “Show me resources missing security patches.”

LLM’s task: These relevant examples, along with the user’s original query, are sent to the LLM. The LLM then uses this context to accurately transform the user’s request into Orca’s structured query language.

We also evaluated a “Vector Embeddings first” database but found that without proper keyword search added to embeddings, results were lacking.

Step 3: Schema modeling and attribute matching

Orca Security has modeled its entire schema within Elasticsearch, including hundreds of subjects and thousands of attributes. Elasticsearch’s accurate matching capabilities help translate user queries into the correct terms used in Orca’s database. For example, a user might refer to a “VM,” but the system needs to understand various related terms like “virtual machine” or “virtual instances.”

Step 4: Enhancing relevance with keywords

To improve the relevance of search results, the LLM generates keywords from the user's query. These keywords boost the search attributes' relevance, ensuring the system retrieves the most pertinent data. The LLM also converts the query into Orca Security's domain-specific language, making it executable on the front end.

What’s next for Orca and AI

Orca Security's vision goes beyond just improving search capabilities, but continuing to make advanced data analysis accessible to everyone, regardless of their technical expertise. By leveraging Elasticsearch and AI, Orca Security aims to transform how users interact with and interpret data. Through this integration, Orca Security has not only enhanced its service offering but also set a new standard for AI-driven search in the cybersecurity industry. The future looks promising as Orca Security continues to push the boundaries of what's possible with AI and Elasticsearch.

Learn more about Elastic and Orca Security:

- Check out Orca Security's website to learn more about its offering.

- Read about what we’re working on now at Elastic Search Labs.

- See more use cases of innovative AI search experiences with Elastic.

The release and timing of any features or functionality described in this post remain at Elastic's sole discretion. Any features or functionality not currently available may not be delivered on time or at all.

In this blog post, we may have used or referred to third party generative AI tools, which are owned and operated by their respective owners. Elastic does not have any control over the third party tools and we have no responsibility or liability for their content, operation or use, nor for any loss or damage that may arise from your use of such tools. Please exercise caution when using AI tools with personal, sensitive or confidential information. Any data you submit may be used for AI training or other purposes. There is no guarantee that information you provide will be kept secure or confidential. You should familiarize yourself with the privacy practices and terms of use of any generative AI tools prior to use.

Elastic, Elasticsearch, ESRE, Elasticsearch Relevance Engine and associated marks are trademarks, logos or registered trademarks of Elasticsearch N.V. in the United States and other countries. All other company and product names are trademarks, logos or registered trademarks of their respective owners.