Elasticsearch: The context engine for grounding and orchestration in Microsoft Azure AI Foundry Agent Service

_(1).png)

The need for enterprise grounding in the agentic era

The rise of large language models (LLMs) and agentic applications promises to transform enterprise workflows. Yet, the core challenge remains: How do we ensure these powerful agents generate accurate, relevant, and trustworthy responses based on proprietary enterprise data rather than relying solely on their generic training knowledge? The answer lies in grounding — connecting the LLM to verified, trusted, and up-to-date information.

For software developers and AI search architects building next-generation applications, integrating the vast, context-rich data residing in Elasticsearch with the cutting-edge Microsoft Azure AI Foundry Agent Service is key to unlocking this promise. This native integration ensures that your AI agents are always grounded in your enterprise truth, delivering verifiable and relevant outcomes without using your data to train public LLMs.

Microsoft AI Foundry: The home for enterprise agentic AI

Microsoft Azure AI Foundry Agent Service — built on the robust Microsoft Agent Framework integrating Nextgen, Semantic Kernel, and AutoGen concepts — is the unified, enterprise-grade platform for building, running, and governing sophisticated multi-agent systems.

To operate effectively, agents in the AI Foundry Agent Service use a powerful set of building blocks:

Knowledge bases: Collections of trusted, proprietary data like documents, emails, and meeting notes that help agents provide smarter, more accurate answers. Data stored and retrieved from Elasticsearch is ideal for this purpose, forming the basis for grounding with an associated embedding model and chat completion interface.

Tools: Functions or services available to the agent sourced from public and private servers, including those exposed over the Model Context Protocol (MCP). Tools enable the agents to perform actions.

Agent-to-Agent (A2A) protocol: A cornerstone of the Microsoft Agent Framework, A2A allows agents to collaborate across different runtimes and frameworks using structured, protocol-driven messaging, facilitating complex, multistep tasks.

This dual-protocol architecture is where the Elasticsearch Platform shines, providing a comprehensive integration story through both the MCP and A2A protocols.

Elastic's dual role: Tool integration via MCP and agent collaboration via A2A

Elasticsearch's integration with the Microsoft ecosystem offers a flexible strategy: It can serve as a potent retrieval tool/knowledge base for any Microsoft Agent, and an Elastic Agent (built with Elastic Agent Builder) can become a collaborative peer in a multi-agent workflow via A2A.

1. Tool integration and grounding via MCP

For grounding agents in enterprise data, Elasticsearch is natively supported as a source via the MCP.

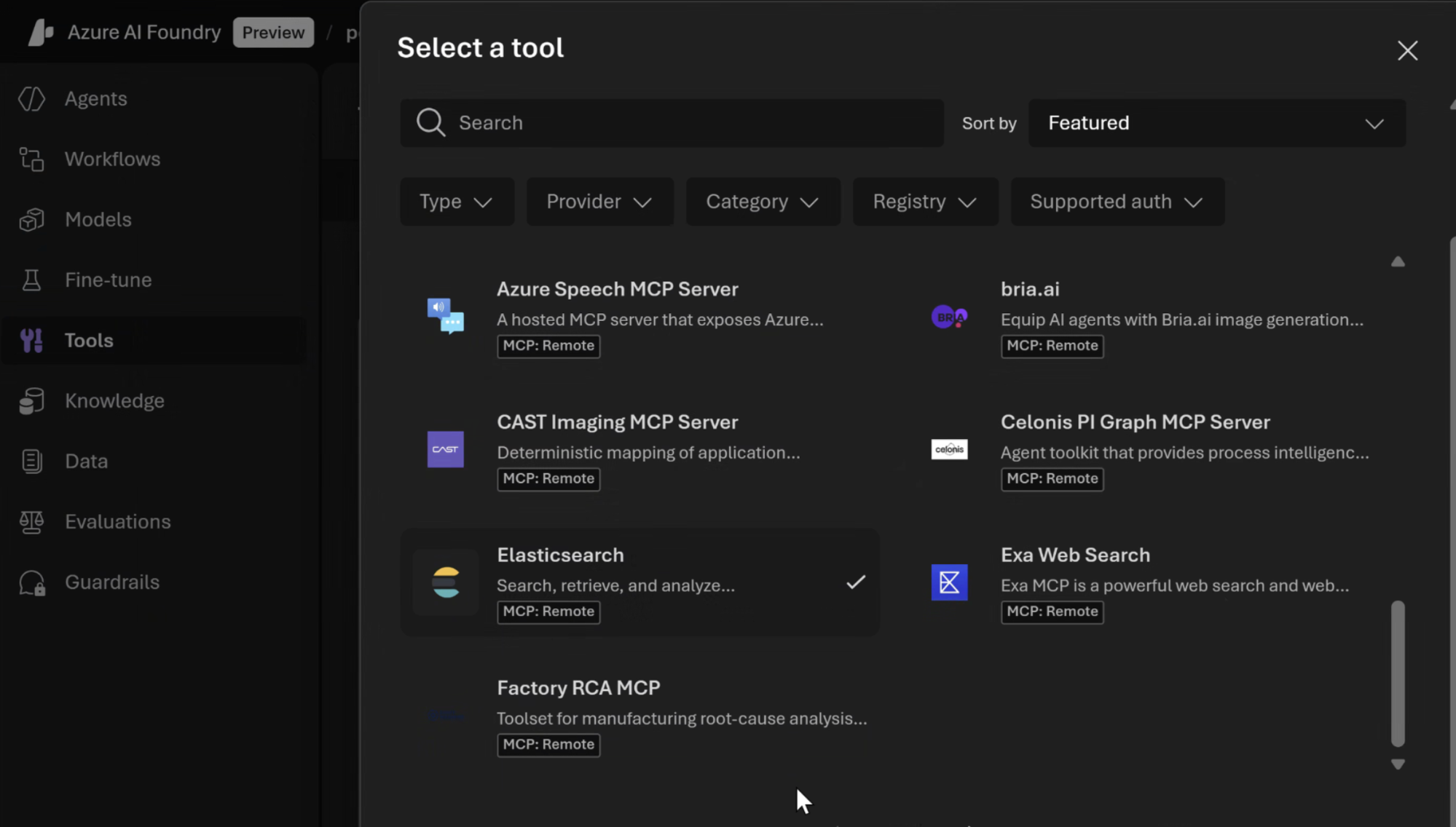

- Discoverable tool: Elasticsearch is registered as a remote MCP server and listed in the AI Foundry tool catalog, which accesses https://mcp.azure.com.

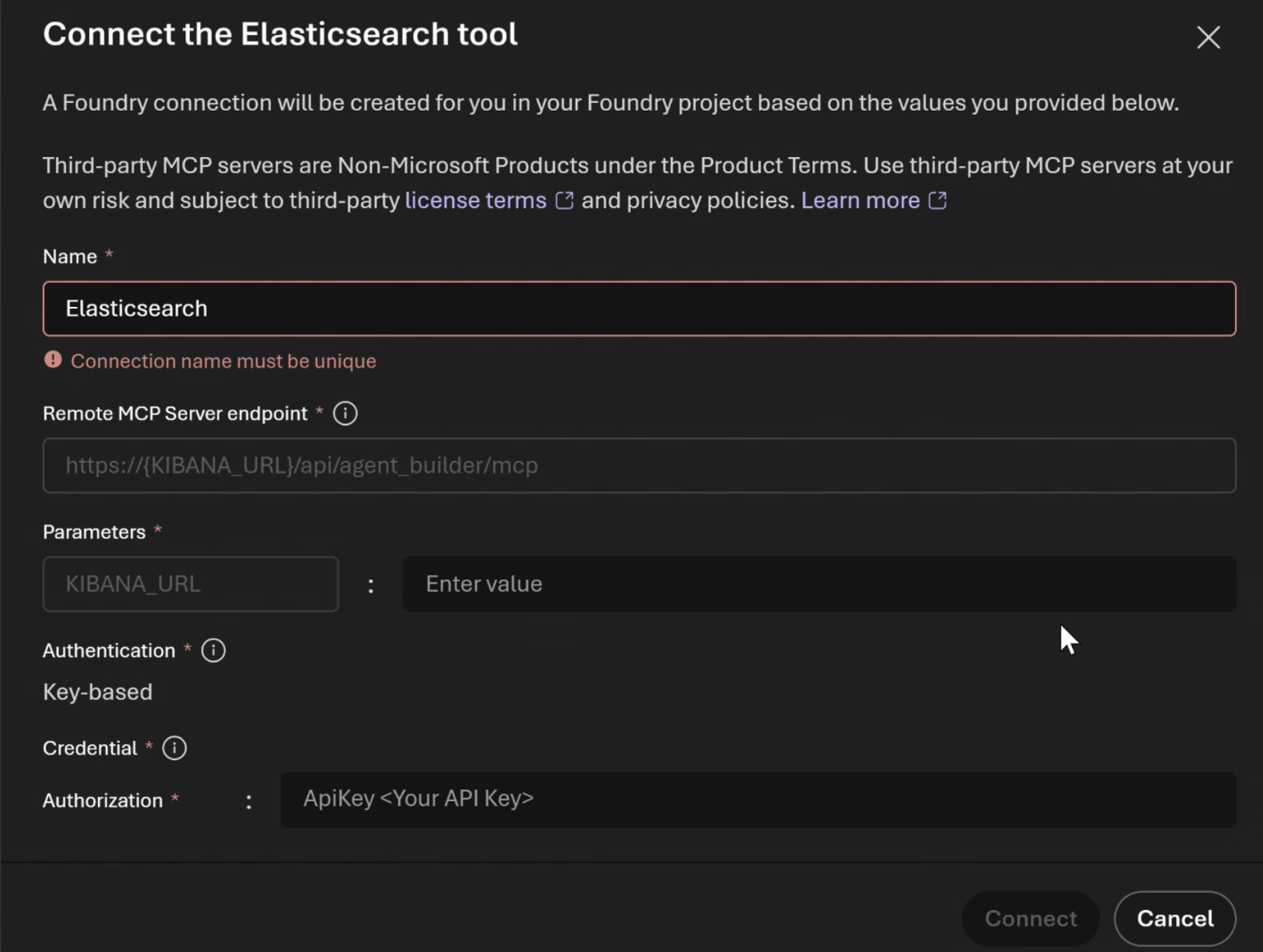

- Simplified connection: A dedicated Foundry Connection is easily created in your AI Foundry project. This connection points to Elastic Agent Builder's remote MCP endpoint: https://{KIBANA_URL}/api/agent_builder/mcp, secured with an associated API key.

- Customizable retrieval augmented generation (RAG): This connection allows the AI Foundry Agent Service to execute unified RAG calls. Crucially, it uses Elasticsearch Search Templates to push down sophisticated retrieval logic (e.g., hybrid search with the Elasticsearch Relevance Engine, or ESRE) to your Elastic cluster, giving you full control over the context provided for grounding.

2. Agent-to-Agent (A2A) collaboration

Elastic Agent Builder supports the A2A protocol, which enables a powerful collaboration model between the platforms.

The A2A protocol allows an Elastic Agent — which is already grounded in your private Elasticsearch data and can execute specific domain-centric tasks — to communicate directly and collaborate with a Microsoft Agent running in AI Foundry. This facilitates sophisticated workflows. For example, one Elastic Agent retrieves and summarizes data from financial indices → passes the structured contextual information to a Microsoft Agent via A2A → Microsoft Agent performs a cross-system analysis or engages in a conversational user interaction.

This capability moves beyond simple data retrieval and allows for true orchestration where expert agents from different domains or data sources coordinate at the protocol level, regardless of their underlying framework.

Trust, accuracy, and speed for the enterprise

By using the native integration points of MCP for grounding and A2A for collaboration, Elasticsearch and Microsoft Azure AI Foundry Agent Service deliver a unified, enterprise-grade solution:

Trust and verifiability: Agent responses are reliably grounded in your private, trusted data, drastically reducing LLM hallucinations.

Comprehensive agent strategy: You can implement both simple RAG tasks via MCP and complex, cooperative workflows via A2A, maximizing the utility of both platforms.

Development velocity: The use of open standards like MCP and A2A streamlines agent development and deployment, allowing you to move faster from experimentation to scale.

Start building intelligent agents today that access the organizational context they need directly from Elasticsearch, and collaborate seamlessly with other agents in the Microsoft Azure AI Foundry ecosystem to serve your enterprise with unparalleled accuracy and confidence.

Can’t wait? Begin a 7-day free trial of Elasticsearch, Elastic Observability, or Elastic Security on Microsoft Azure now!

The release and timing of any features or functionality described in this post remain at Elastic's sole discretion. Any features or functionality not currently available may not be delivered on time or at all.

In this blog post, we may have used or referred to third party generative AI tools, which are owned and operated by their respective owners. Elastic does not have any control over the third party tools and we have no responsibility or liability for their content, operation or use, nor for any loss or damage that may arise from your use of such tools. Please exercise caution when using AI tools with personal, sensitive or confidential information. Any data you submit may be used for AI training or other purposes. There is no guarantee that information you provide will be kept secure or confidential. You should familiarize yourself with the privacy practices and terms of use of any generative AI tools prior to use.

Elastic, Elasticsearch, and associated marks are trademarks, logos or registered trademarks of Elasticsearch B.V. in the United States and other countries. All other company and product names are trademarks, logos or registered trademarks of their respective owners.