A comprehensive guide for workload migration to the cloud with the Elasticsearch Platform, ElastiFlow, and Kyndryl

The transition from on-premises infrastructure to cloud environments is no longer just an option, but a necessity for businesses seeking to stay competitive in a rapidly evolving digital world. A well-executed cloud migration strategy can deliver compelling benefits like enhanced flexibility, scalability, efficiency, and cost-effectiveness. However, the journey to the cloud is often complex and presents several challenges, making it crucial for organizations to understand the entire migration process and prepare adequately.

Cloud migration typically follows a distinct set of phases, each with its unique objectives and potential hurdles. These include:

1. Assess:

- Planning: This initial phase involves a comprehensive evaluation of the existing IT infrastructure, applications, and data to identify which elements are suitable for cloud migration and in what order. The planning stage also involves selecting the appropriate cloud service model (IaaS, PaaS, or SaaS) and provider.

- Business case development and cost calculation: The economic feasibility of the migration project is assessed in this stage. It involves calculating the total cost of ownership (TCO) for both the current on-premise setup and the proposed cloud environment. This analysis will help justify the migration project to stakeholders and plan for the financial commitment involved.

2. Mobilize: During the assessment phase, a thorough analysis of the applications, workloads, and data to be migrated is carried out, identifying any potential compatibility issues or integration difficulties. The mobilization phase then involves preparing the organization and the IT environment for the upcoming migration.

3. Migrate and Modernize: This is the execution phase, where the actual migration takes place. Applications and data are moved to the cloud, often in a phased approach to minimize disruption. Post-migration, applications are often modernized to leverage the full potential of the cloud environment.

4. Steady State: After successful migration and modernization, continuous optimization is necessary to ensure that the cloud environment is delivering the expected benefits and cost savings. This might involve performance tuning, cost optimization, or adopting new cloud-native approaches.

As straightforward as these phases might seem, cloud migration is often accompanied by an array of challenges at every stage, and even post-migration. Common challenges include compatibility and integration issues, data security and privacy concerns, potential downtime and service disruptions, cost overruns, technical expertise gaps, vendor lock-in risks, compliance and legal requirements, organizational change management, performance and latency issues, and data migration complexities.

How Elastic with ElastiFlow and Kyndryl can help

The Elasticsearch platform, coupled with the network flow data analytics power of ElastiFlow and the expert services from Kyndryl, offers a holistic solution to navigate the complexities of cloud migration efficiently.

The Elastic advantage

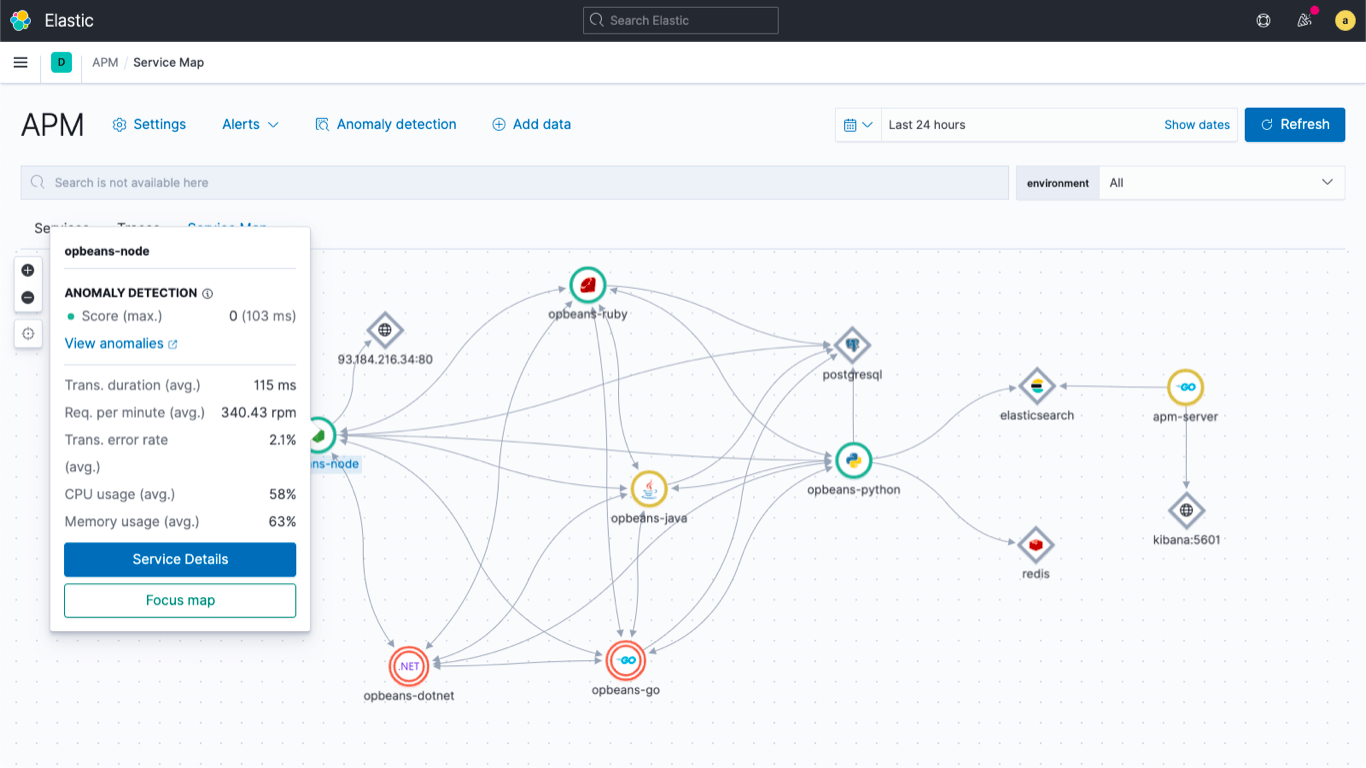

The Elasticsearch platform provides real-time insights and analytics capabilities, supporting informed decision-making at every phase of the migration process. From the planning and business case development stages, Elastic’s Observability features offer valuable insights into the current IT infrastructure and application performance. These insights aid in identifying the appropriate applications and data for cloud migration and also help in cost estimation.

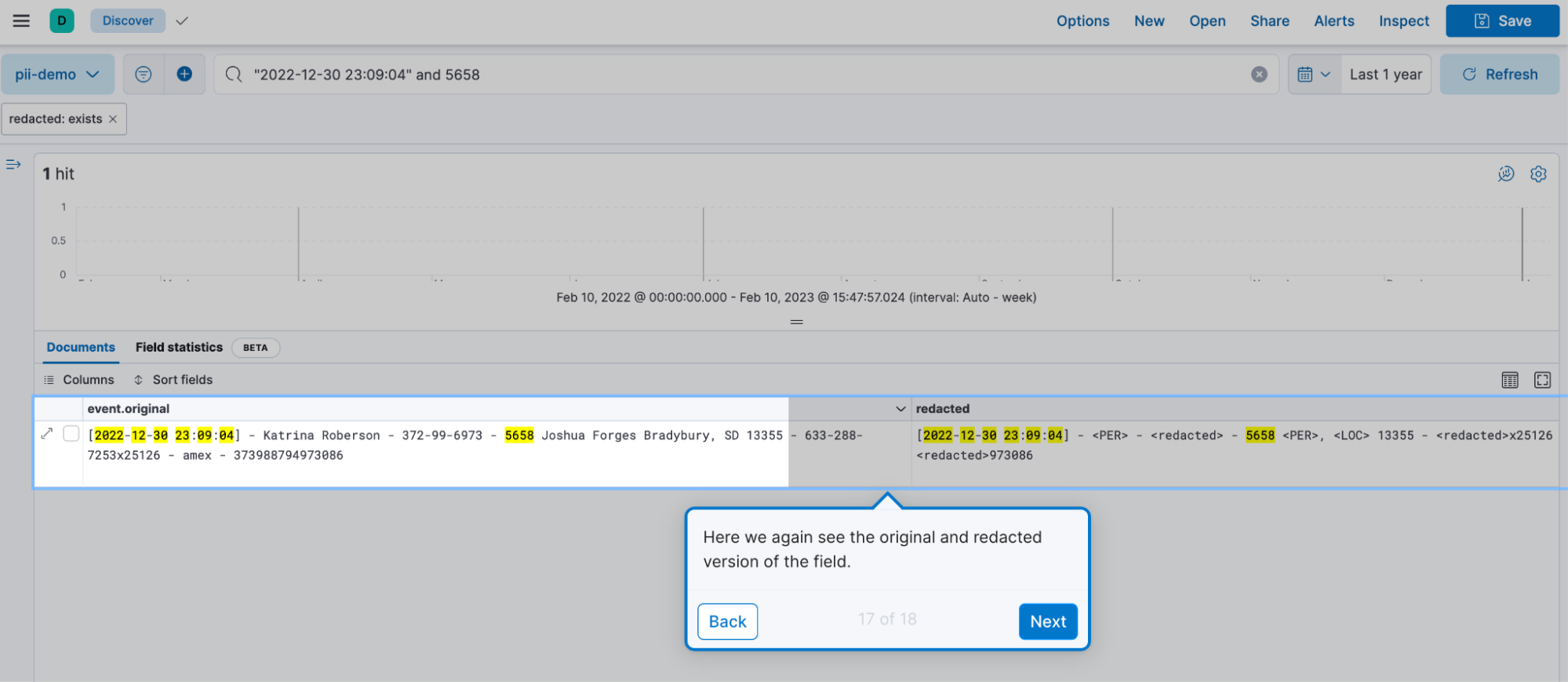

During the Assess and Mobilize phases, Elastic’s search and analytics capabilities, including AI and natural language processing (NLP), can help identify potential compatibility issues and plan for integration with cloud services. In the Migrate and Modernize phase, real-time monitoring from Elastic ensures minimal disruption and helps in modernization efforts. In the Steady State phase, Elastic’s continuous monitoring and analysis features support optimization efforts, ensuring that the cloud environment delivers on its promise.

The ElastiFlow edge

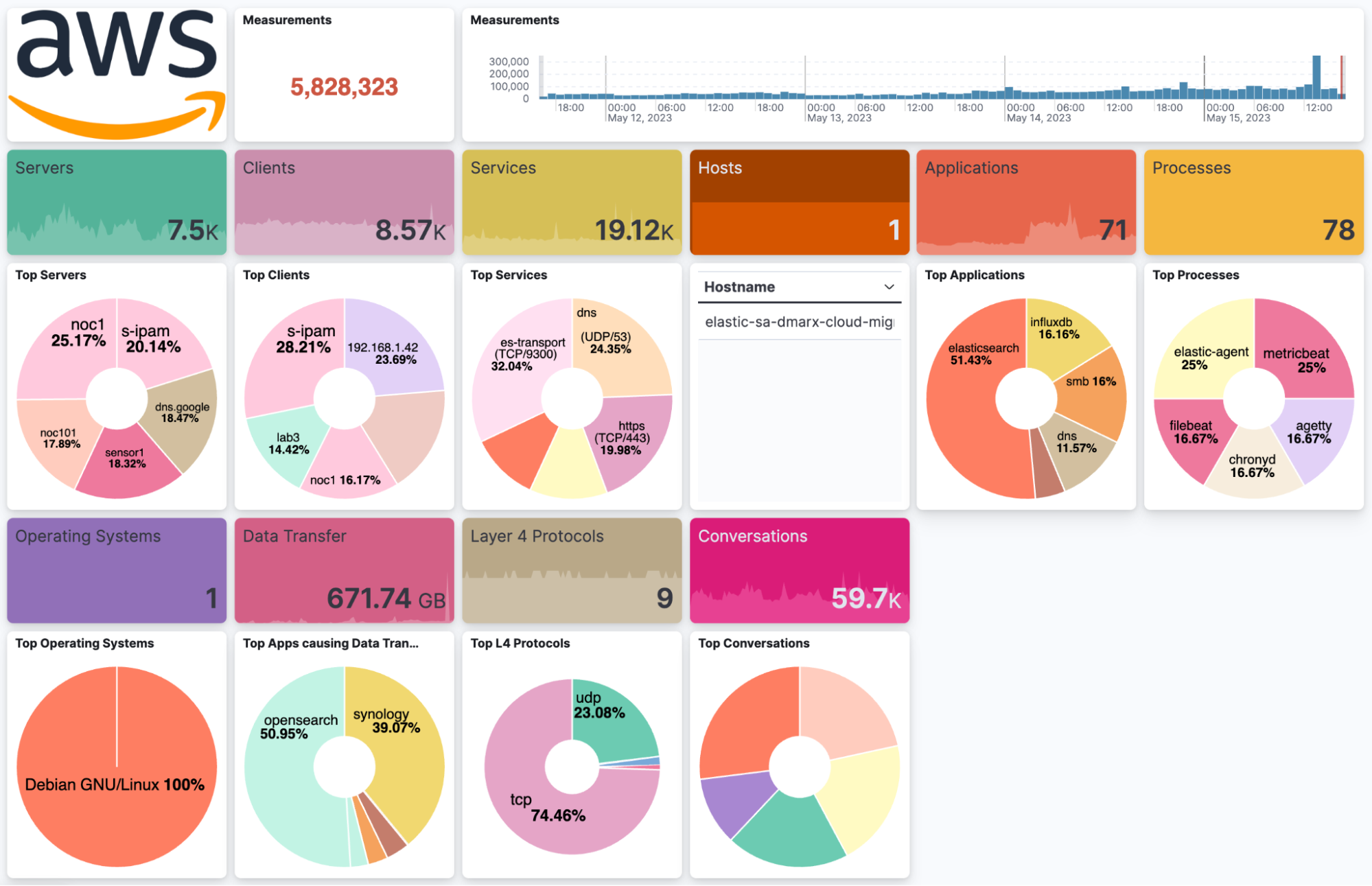

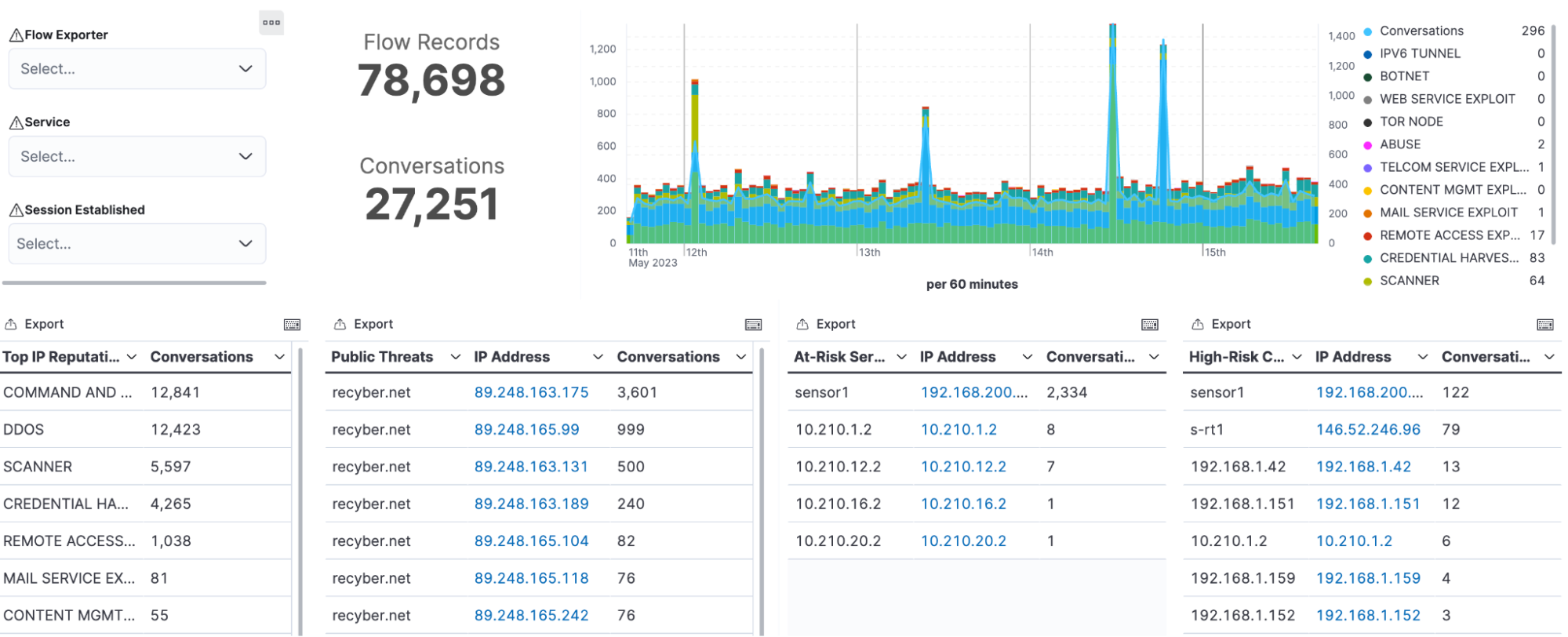

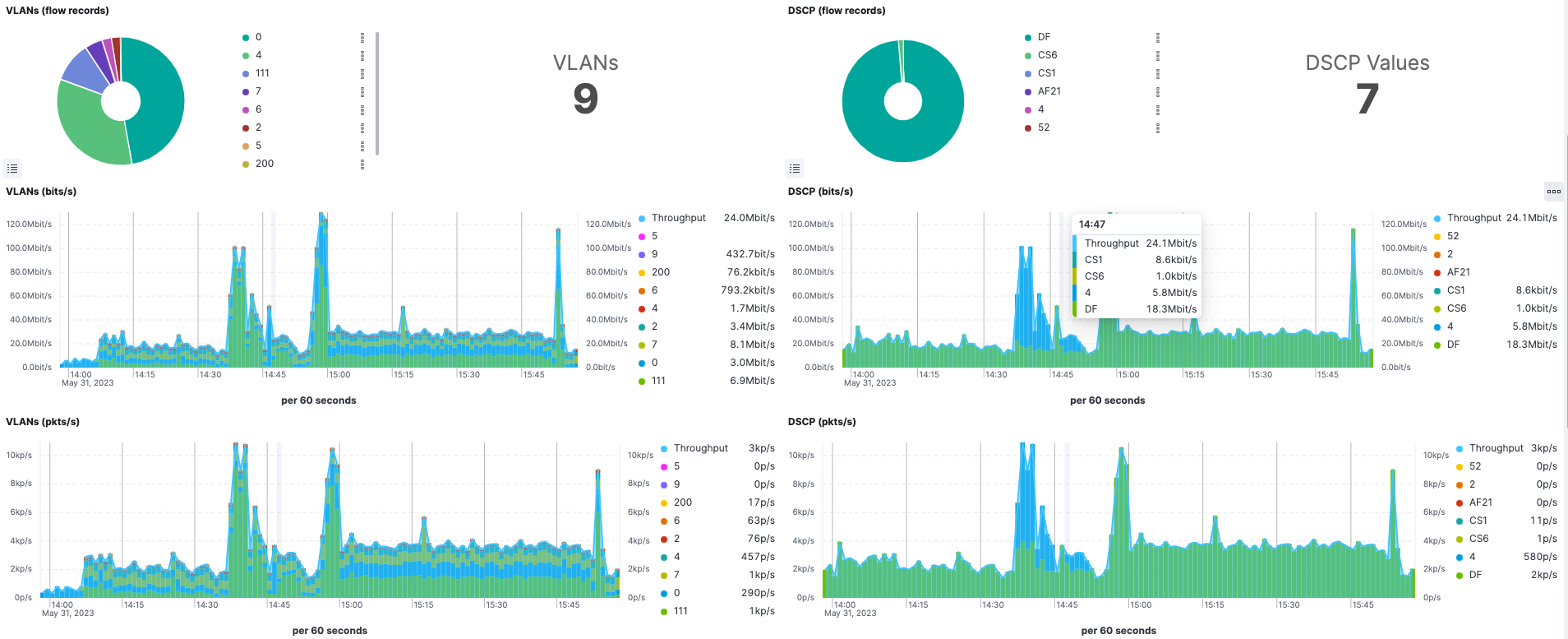

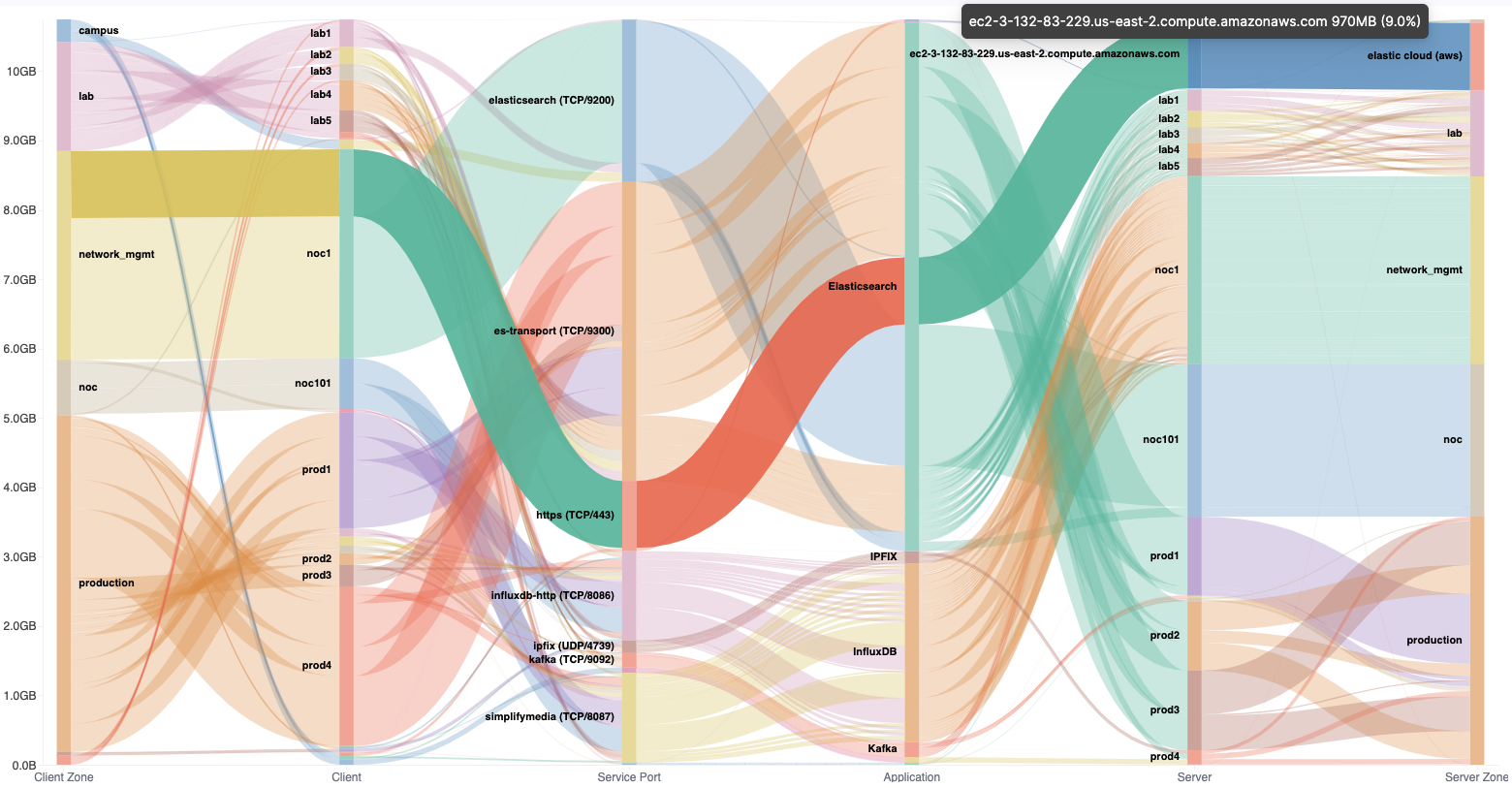

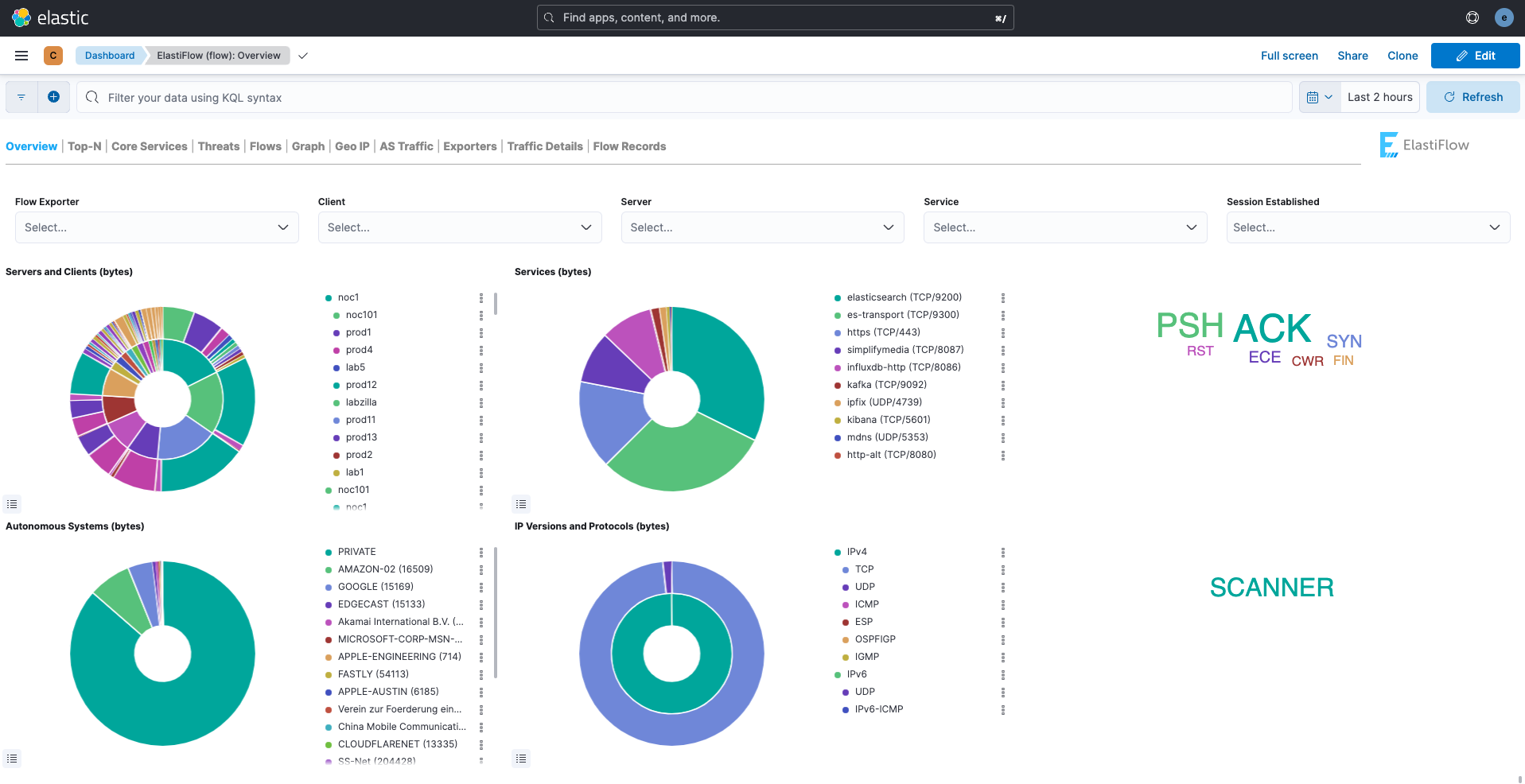

While Elastic provides a robust platform for analytics and insights, as well as for search and security, ElastiFlow enhances this capability by offering granular visibility into network traffic patterns. This data can be instrumental in understanding the traffic, dependencies, and protocols patterns during the assessment phase, identifying potential issues during the migration process and optimizing network performance post-migration.

The Kyndryl partnership

Kyndryl’s expert services can complement the technical capabilities of Elastic and ElastiFlow. From providing specialized expertise in cloud migration to assisting with potential challenges, Kyndryl can ensure a smooth and efficient migration process. It can even help secure assessment and/or migration funds from hyperscalers, further aiding in cost management efforts.

Together, Elastic, ElastiFlow, and Kyndryl can provide a comprehensive solution for cloud migration, addressing the various challenges that might arise during each phase of the process. With these tools and services at their disposal, organizations can confidently navigate their journey to the cloud, ensuring a successful migration and exploiting the full benefits of their new cloud environment.

Migration phases detail

Assess Phase

Planning

The planning phase is essential to establish the objectives and framework for the migration. Organizations face several challenges during this phase:

- Understanding the existing environment: Organizations often lack visibility into their current IT infrastructure, making it challenging to create a comprehensive migration plan.

Solution: The Elastic platform offers valuable insights into your current IT environment. ElastiFlow can provide a granular view of network traffic, helping in understanding network dependencies.

- Choosing the right migration strategy and cloud provider: Organizations must decide on a suitable migration strategy and choose a cloud provider that aligns with their business needs.

Solution: Kyndryl can provide expert guidance on selecting the right cloud provider and migration strategy. Insights from the Elastic platform and ElastiFlow can aid in this decision-making process.

Business case and cost calculation

In this phase, organizations struggle with:

- Cost estimation: Organizations struggle with predicting the costs associated with cloud migration and the potential savings post-migration.

Solution: The Elastic platform can facilitate the business case development by providing detailed insights into your current IT infrastructure’s cost and performance. ElastiFlow can help identify potential cost savings from network optimization.

Mobilize Phase

This phase includes:

- Compatibility and integration: Organizations may face compatibility issues between their existing on-premises applications and the cloud environment.

Solution: The Elastic platform can help analyze your applications, data, and workloads for potential compatibility issues. ElastiFlow can provide detailed visibility into network dependencies, aiding in the assessment of integration challenges.

- Data security and privacy: Ensuring the security and privacy of data during and after migration is a critical concern.

Solution: Implement strong security measures like encryption, access control, and multi-factor authentication. Use insights from Elastic and ElastiFlow to monitor network traffic for unusual patterns, indicating security threats. - Technical expertise: Cloud migration requires specialized skills and expertise that may not be readily available within an organization.

Solution: Kyndryl’s team can support the mobilization phase, providing expert guidance and coordination.

Migrate and Modernize Phase

Key challenges during this phase include:

- Downtime and disruption: Minimizing downtime and service disruption during the migration process is crucial to maintaining business continuity.

Solution: The Elastic platform is a game changer during the migration phase, providing real-time cloud migration monitoring of your applications and workloads. With data from the ElastiFlow collectors, you can ensure that your network is performing optimally during the migration, minimizing the chances of downtime. - Compliance and legal requirements: Organizations must ensure that their cloud-based IT services and applications adhere to relevant compliance and legal requirements.

Solution: The Elastic platform’s AI and NLP capabilities can help identify patterns and anomalies related to compliance and regulatory requirements.

- Change management: Managing organizational and cultural changes effectively is crucial to ensure a smooth transition and minimize resistance from employees.

Solution: Develop a well-defined change management plan, with Kyndryl providing resources for communication, training, and employee support.

Steady State Phase

The last phase brings its own set of challenges:

- Performance and latency: Network latency and performance issues can rise after migration. Ensuring optimal performance and user experience in the cloud requires careful planning and optimization.

Solution: Use insights from the Elastic platform and ElastiFlow to monitor network performance, identify bottlenecks, and optimize traffic flows to minimize latency and maintain a high-quality user experience.

- Cost management: Migrating to the cloud can involve unforeseen costs. Organizations must accurately estimate and manage these costs to ensure a cost-effective migration.

Solution: Develop a comprehensive cost model for cloud migration, including data transfer, storage, and licensing fees. Use ElastiFlow to monitor network usage and identify cost-saving opportunities.

- Continuous monitoring and observability: Post-migration, it’s essential to continuously monitor your applications, workloads, and network performance to maintain operational efficiency, security, and compliance. It’s here that the need for a comprehensive observability solution becomes apparent.

Solution: The Elasticsearch platform, along with ElastiFlow, provides a complete observability solution, offering real-time insights into your cloud environment’s performance. By monitoring logs, metrics, traces, and cloud workloads across your stack, you can quickly identify and respond to performance issues, security threats, and other anomalies. Additionally, Kyndryl’s expertise can augment your in-house capabilities, helping you leverage Elastic’s Observability features to their full extent.

In conclusion, the combination of the Elasticsearch platform, ElastiFlow, and Kyndryl helps overcome these migration challenges. Together, they provide a comprehensive, effective, and efficient cloud migration solution that can guide organizations through each phase of the process.

Interlocking with the AWS Migration Portfolio Assessment (MPA) portal

One of the keys to success in any cloud migration is a TCO analysis to highlight the financial impact and benefits of the project. In order to improve the quality of this insight, thorough discovery, assessment, and analysis is required. AWS provides a comprehensive tool for TCO analysis — MPA (Migration Portfolio Assessment) — which relies on captured metrics data to calculate the analysis.

You have already seen the value that Elastic and ElastiFlow can bring using NetFlow data. If you further enhance this by deploying the Elastic Agent and gathering metrics from your hosts, all the required data to generate an accurate, comprehensive TCO report can be captured.

The steps to visualize and export this data are covered later in this blog.

Getting started

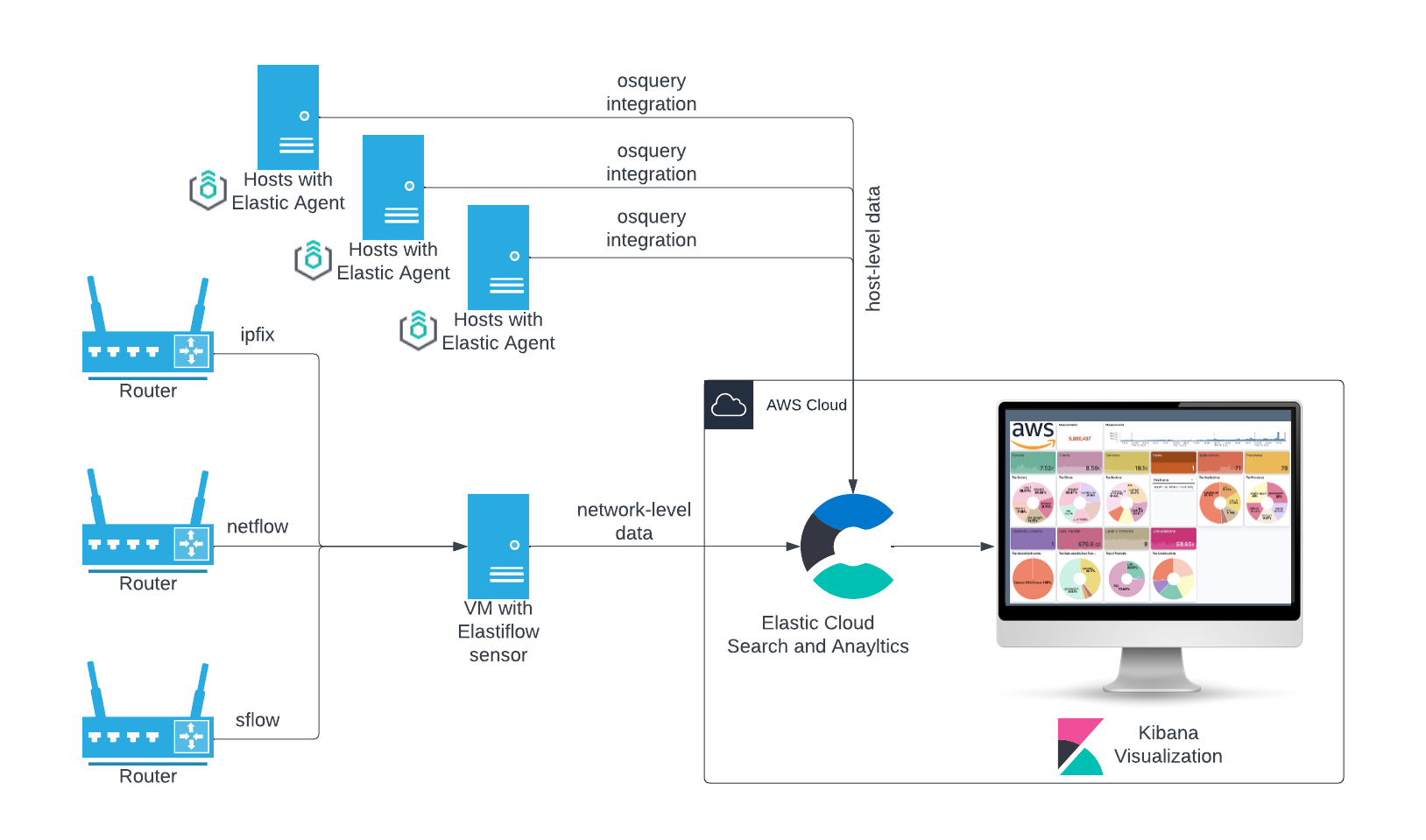

Architecture

In this section, you will learn how to install and configure the ElastiFlow Unified Flow Collector, Elastic Cloud, and Elastic Agent. With this simple but yet powerful architecture, you will be able to access real-time insights for the Assess, Mobilize, and Migrate and Modernize phases. This setup is easily extendable.

Deploying the ElastiFlow Unified Flow Collector

The ElastiFlow Unified Flow Collector can be installed natively on Linux. Docker containers are also available. The following example explains the installation process on Debian/Ubuntu Linux.

Downloading the .deb package

The package can be easily downloaded using wget or curl:

wget https://elastiflow-packages.s3.amazonaws.com/flow-collector/flow-collector_6.2.2_linux_amd64.debcurl https://elastiflow-packages.s3.amazonaws.com/flow-collector/flow-collector_6.2.2_linux_amd64.deb --output flow-collector_6.2.2_linux_amd64.debInstalling the package

The collector requires that libpcap-dev also be installed. This dependency will be installed automatically when using apt.

sudo apt install ./flow-collector_6.2.2_linux_amd64.debConfiguration

The Unified Flow Collector will be installed to run as a daemon managed by systemd. Configuration of the collector is provided via environment variables and, depending on the enabled options, via various configuration files that by default are located within /etc/elastiflow.

To configure the environment variables, edit the file /etc/systemd/system/flowcoll.service.d/flowcoll.conf. For details on all of the configuration options, please refer to the Configuration Reference.

1. Request a Basic or Trial license

The ElastiFlow Basic license is available at no cost and supports standard information elements. A license can be requested on the ElastiFlow website. Alternatively a 30-day Premium trial may be requested, which increases the scalability of the collector and enables all supported vendor and standard information elements.

License keys are generated per account. EF_ACCOUNT_ID must contain the Account ID for the Licence Key specified in EF_FLOW_LICENSE_KEY. The number of licensed units will be 1 for a Basic license and up to 64 for a 30-day trial. The ElastiFlow EULA must also be accepted to use the software.

Environment="EF_LICENSE_ACCEPTED=true"

Environment="EF_ACCOUNT_ID=FROM_THE_EMAIL"

Environment="EF_FLOW_LICENSE_KEY=FROM_THE_EMAIL"2. Enable the Elasticsearch output

Set EF_OUTPUT_ELASTICSEARCH_ENABLE to true to enable the Elasticsearch output.

Environment="EF_OUTPUT_ELASTICSEARCH_ENABLE=true"3. Specify a schema

The Unified Collector outputs data using ElastiFlow's CODEX schema. To output data in Elastic Common Schema (ECS), set EF_OUTPUT_ELASTICSEARCH_ECS_ENABLE to true.

Environment="EF_OUTPUT_ELASTICSEARCH_ECS_ENABLE=true"4. Index shards and replicas

For a small single node install, set the number of shards to 1 and replicas to 0.

Environment="EF_OUTPUT_ELASTICSEARCH_INDEX_TEMPLATE_SHARDS=1"

Environment="EF_OUTPUT_ELASTICSEARCH_INDEX_TEMPLATE_REPLICAS=0"The optimum value for these settings will depend on a number of factors. The number of shards should be at least 1 for each Elasticsearch data node in a cluster. Larger nodes (16+ CPU cores) and higher ingest rates can benefit from 2 shards per node. In a multi-node cluster, 1 or more replicas may be specified for redundancy.

5. Index lifecycle management

Index lifecycle management (ILM) can be used to rollover the indices that store the ElastiFlow data, preventing issues that can occur when shards become too large. Enable rollover by setting EF_OUTPUT_ELASTICSEARCH_INDEX_PERIOD to rollover. When enabled, the collector will automatically bootstrap the initial index and write alias.

Environment="EF_OUTPUT_ELASTICSEARCH_INDEX_PERIOD=rollover"The default ILM lifecycle is elastiflow. If this lifecycle doesn't exist, a basic lifecycle will be added, which will remove data after 7 days. This lifecycle can be edited later via Kibana or the Elasticsearch ILM API.

6. Elasticsearch server and credentials

Define the elasticsearch environment to which the collector should connect and the credentials for which the password was defined during the Elasticsearch installation. When sending the data to Elastic Cloud, use the Cloud ID for your deployment.

Environment="EF_OUTPUT_ELASTICSEARCH_USERNAME=elastic"

Environment="EF_OUTPUT_ELASTICSEARCH_PASSWORD: 'YOUR_PWD'

Environment="EF_OUTPUT_ELASTICSEARCH_CLOUD_ID=YOUR_CLOUD_ID"Instead of specifying a username and password, API Keys are also supported.

7. Encrypted communications with TLS

Enable TLS and either specify the path to the CA certificate or disable TLS verification.

Environment="EF_OUTPUT_ELASTICSEARCH_TLS_ENABLE=true"

Environment="EF_OUTPUT_ELASTICSEARCH_TLS_SKIP_VERIFICATION=true"

Environment="EF_OUTPUT_ELASTICSEARCH_TLS_CA_CERT_FILEPATH=/etc/elastiflow/ca/ca.crt"sudo systemctl daemon-reload && \

sudo systemctl enable flowcoll && \

sudo systemctl start flowcollConfirm the service started successfully by executing:

sudo systemctl status flowcollThe collector is now ready to receive flow records from the network infrastructure.

Import the Kibana Dashboards

Download the latest Kibana dashboards for ElastiFlow from the documentation page here. You will need the dashboards for the ECS schema.

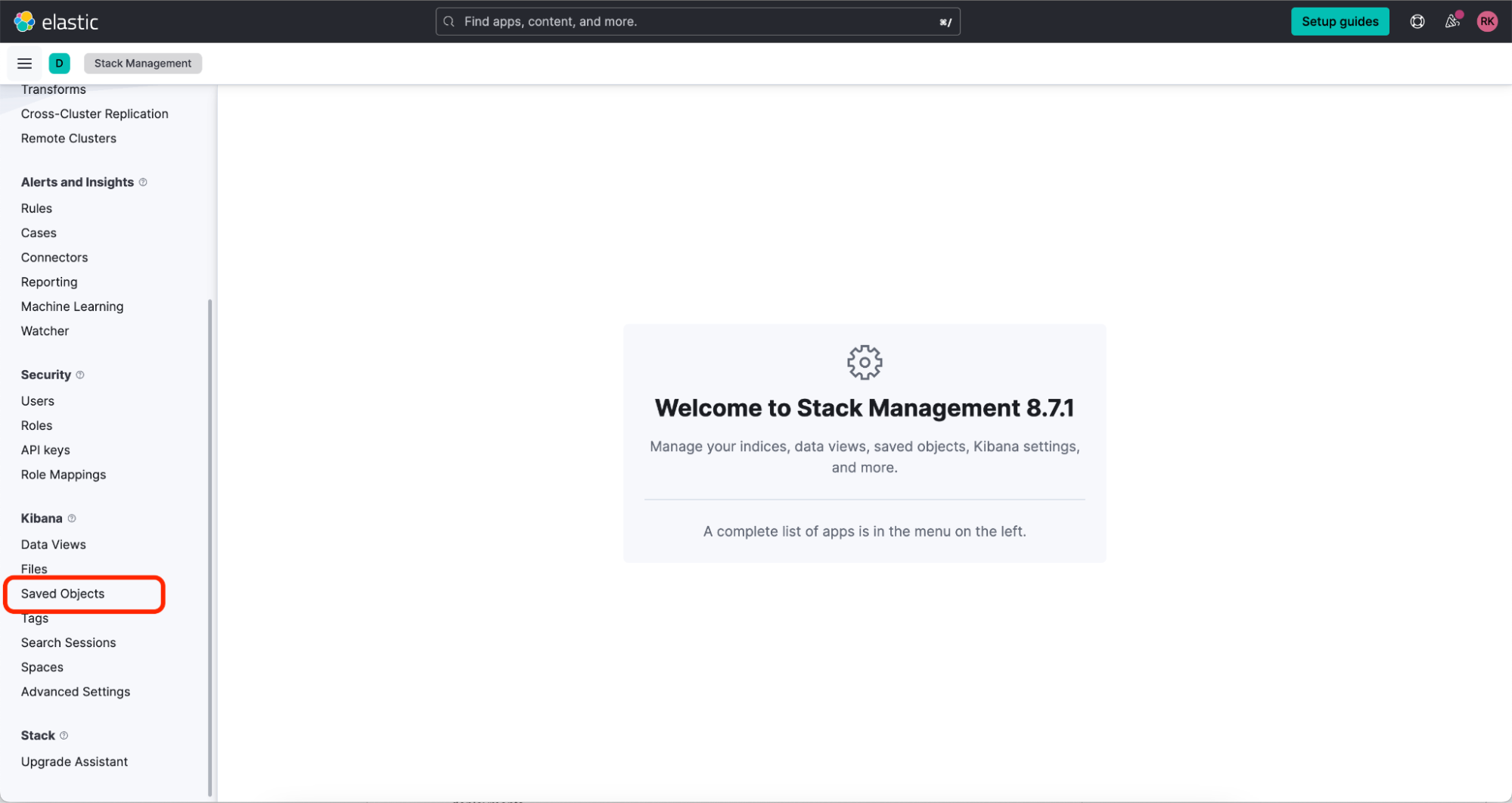

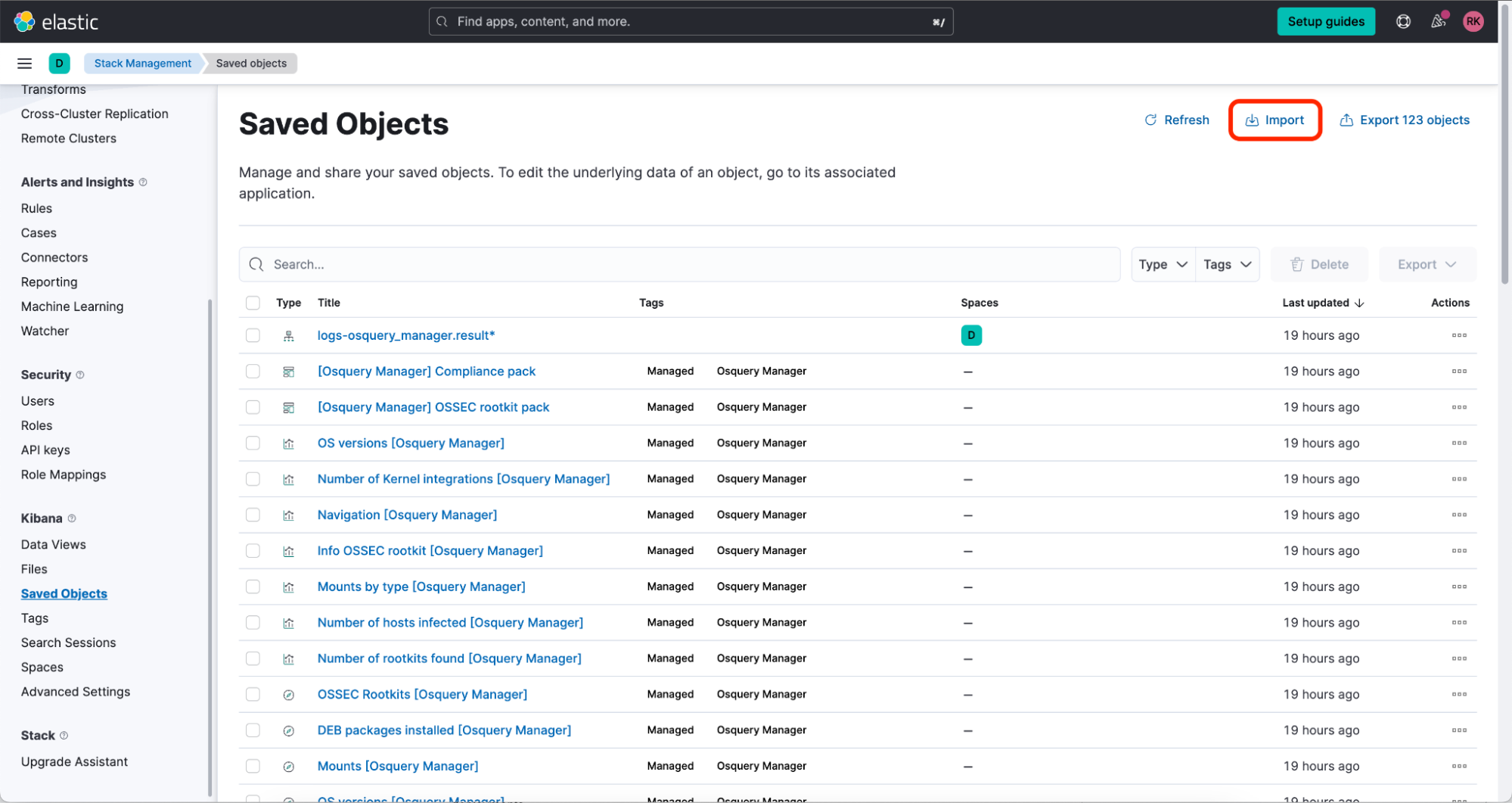

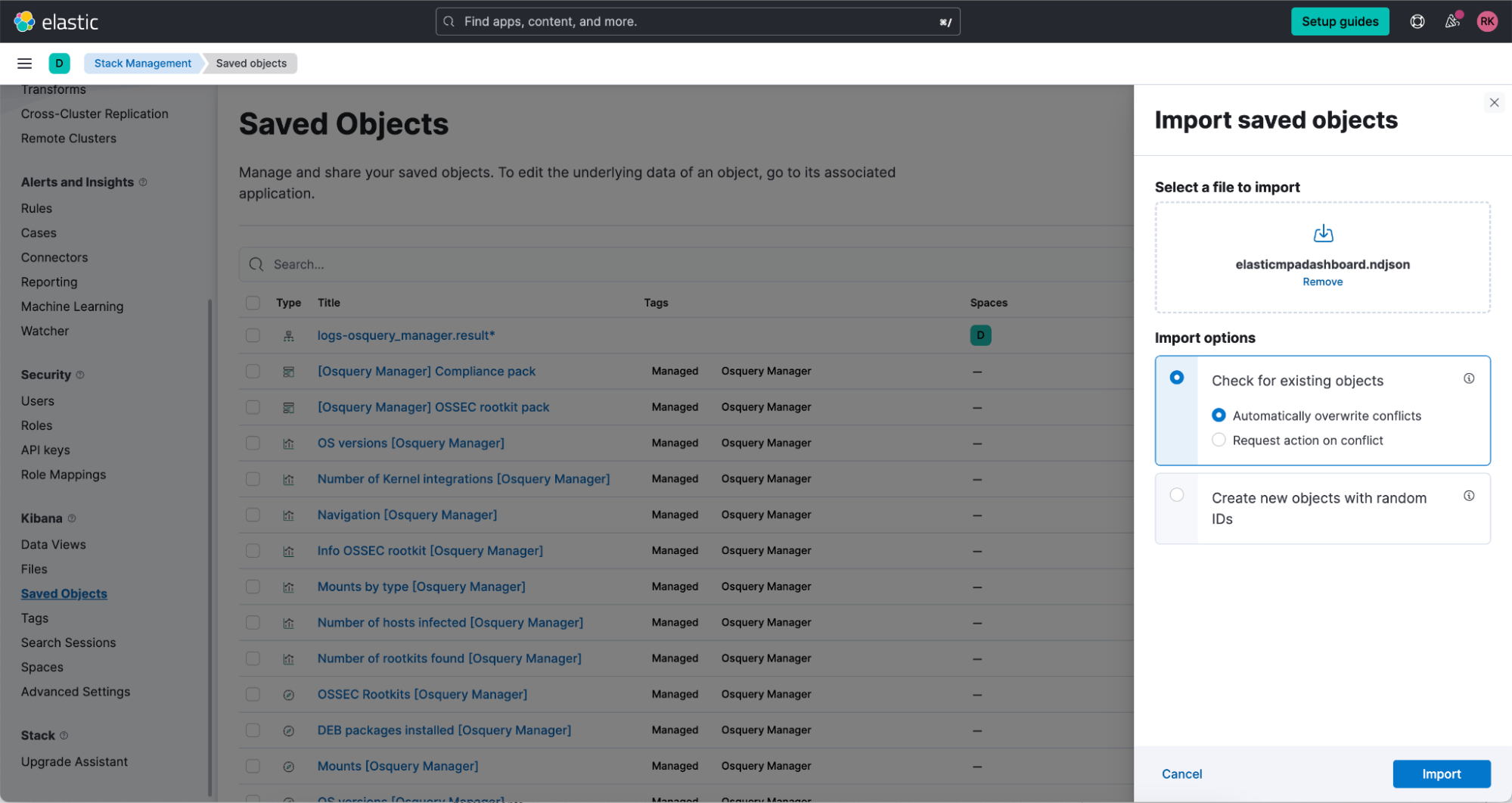

To import the dashboards, in Kibana go to Stack Management → Saved Objects and click Import in the upper right corner.

NOTE: For the best experience, it is recommended that you enable filters:pinnedByDefault under Stack Management → Kibana → Advanced Settings. Pinning a filter allows it to persist when navigating between dashboards. This is very useful when drilling down into something of interest and you want to change dashboards for a different perspective of the same data.

As soon as flow records are received from your network devices, the dashboards will allow you to begin to investigate and analyze your network traffic.

Deploying an Elastic Agent with the Osquery integration

1. Create a policy

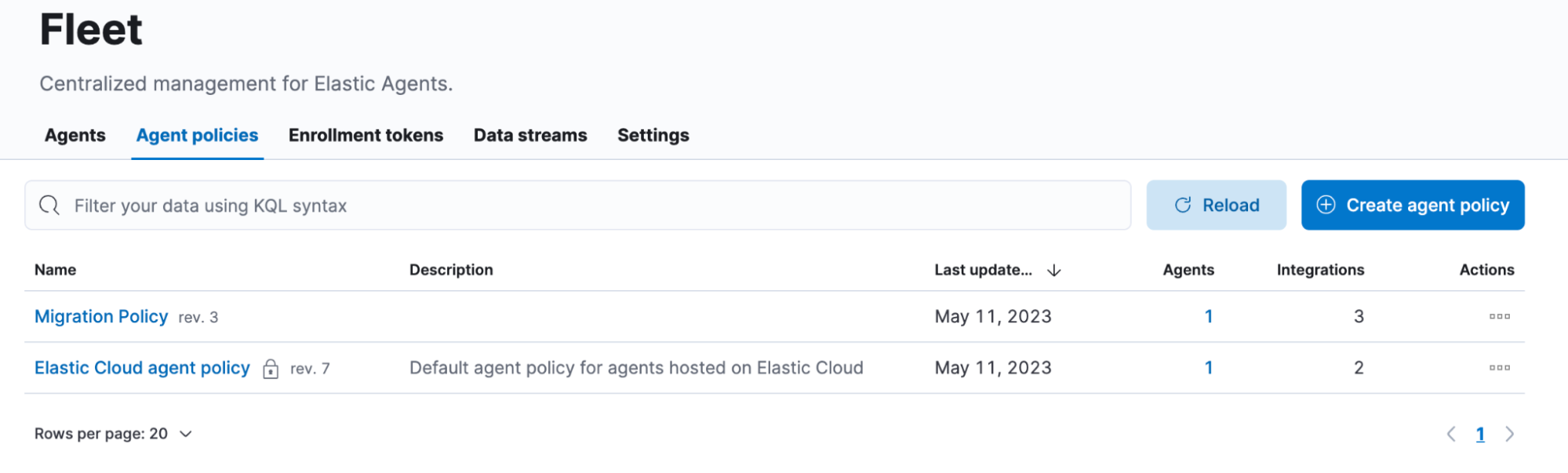

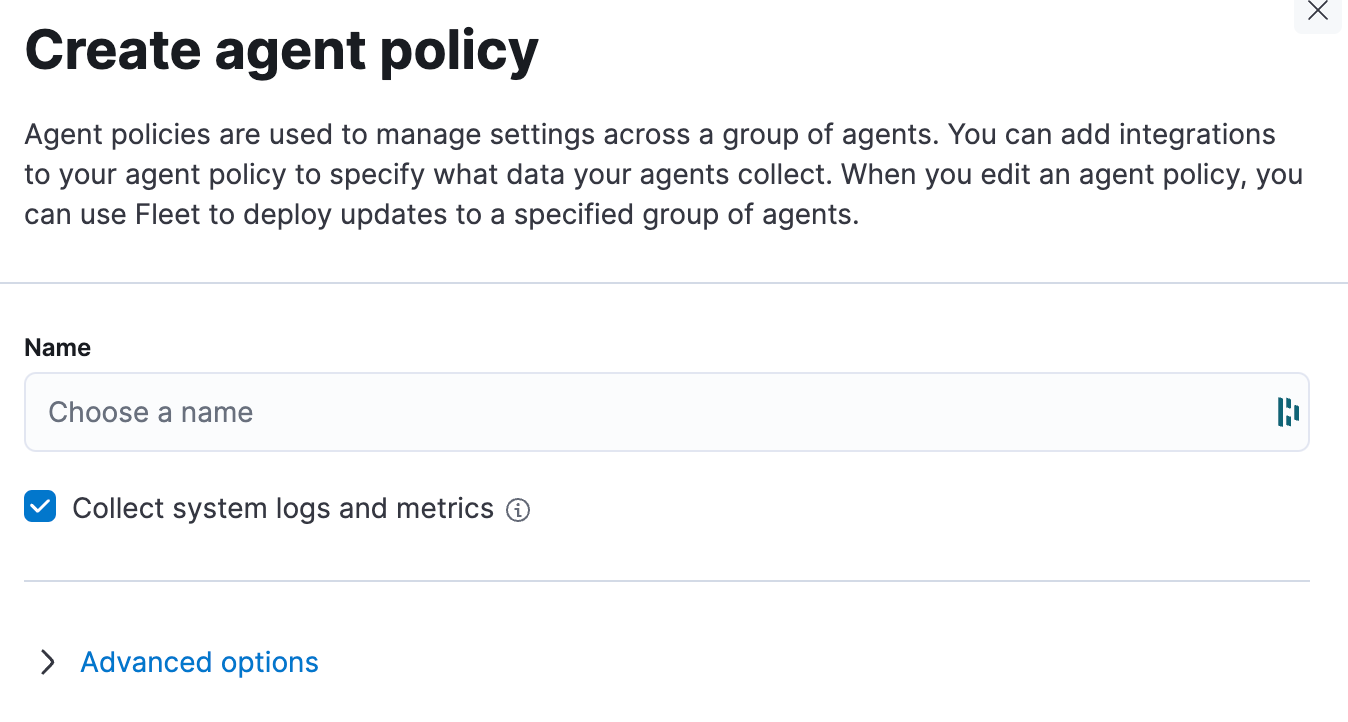

First, we need to create a policy. One or many policies will be assigned to Elastic Agents. That way, you can manage integrations used in Agents.

To create a policy, go to the Fleet management in Kibana, and then click on Create agent policy:

Give it a name (e.g., Migration Policy):

2. Add Osquery integration

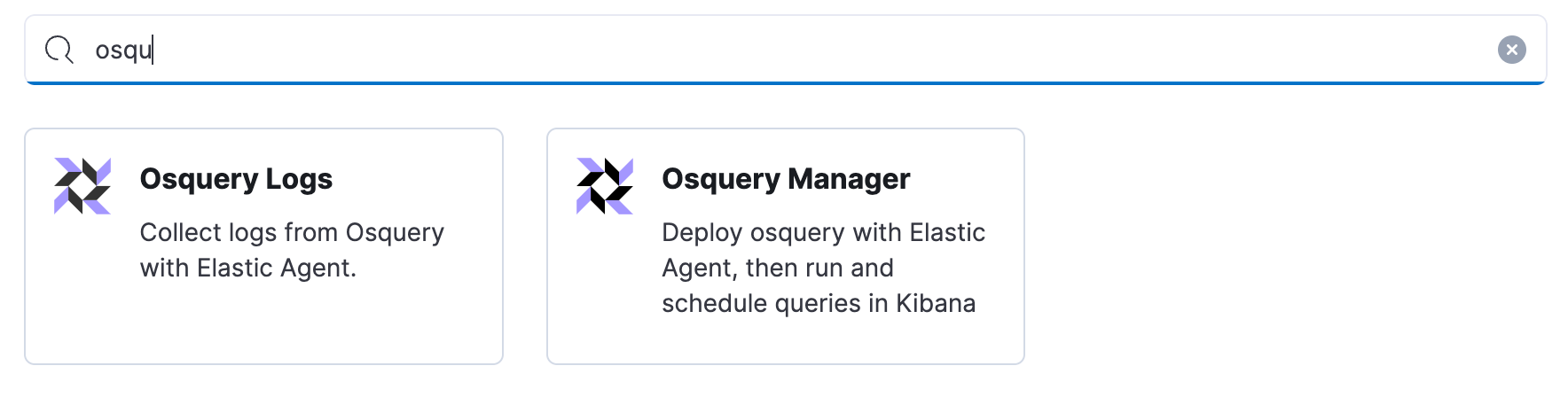

At the time of writing, Elastic has made more than 300 integrations available. Two of them are based on Osquery. These integrations help to query an operating system like a database. That way you can enumerate processes running on the OS, memory used, storage available, and many more. The data will be persisted in Elastic and usable in our dashboards. This is one of the ways to retrieve host-level insights.

To add an integration, click on the Add Integration button.

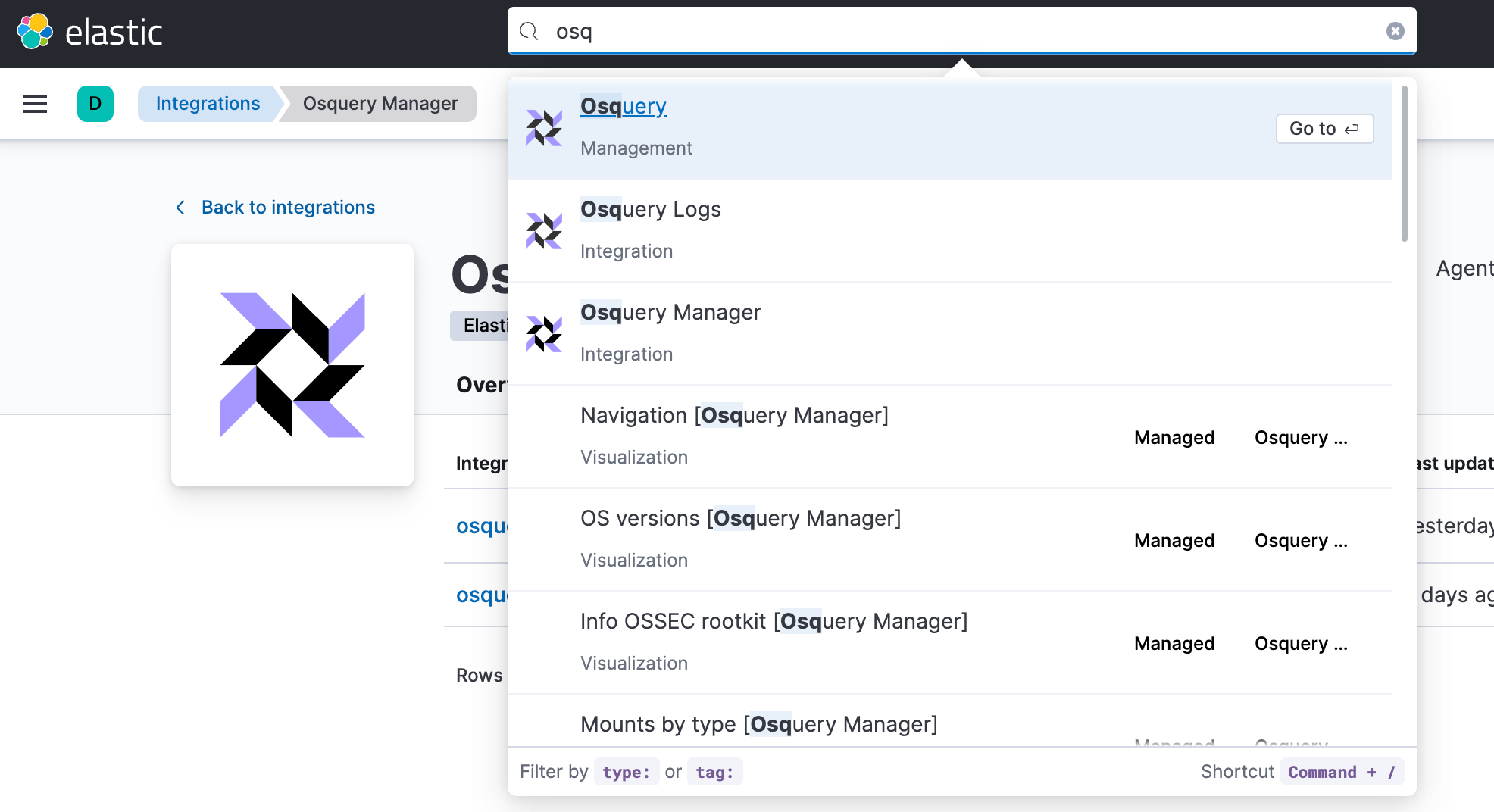

Search for Osquery:

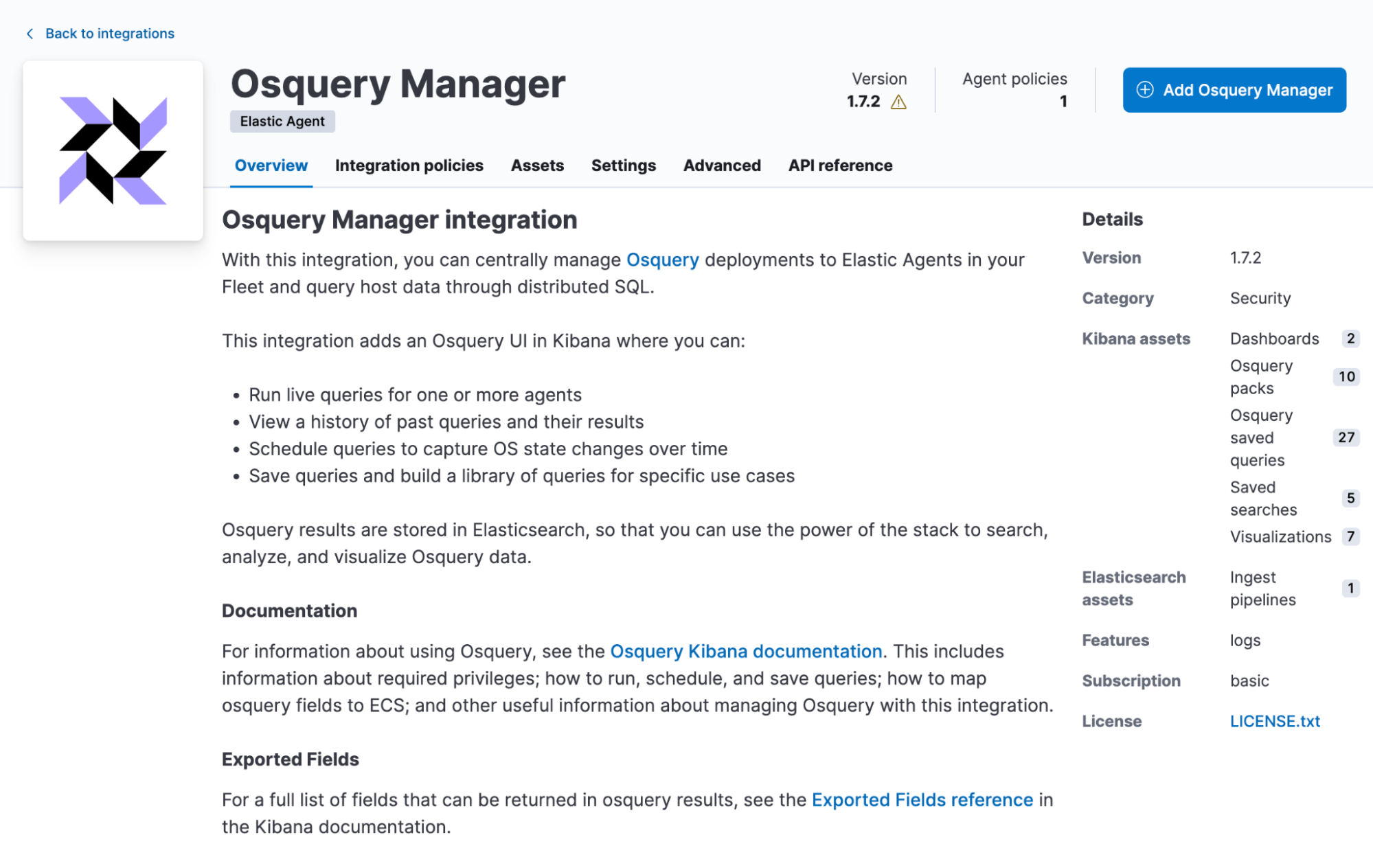

Select Osquery Manager. It allows you to schedule queries.

Click on Add Osquery Manager.

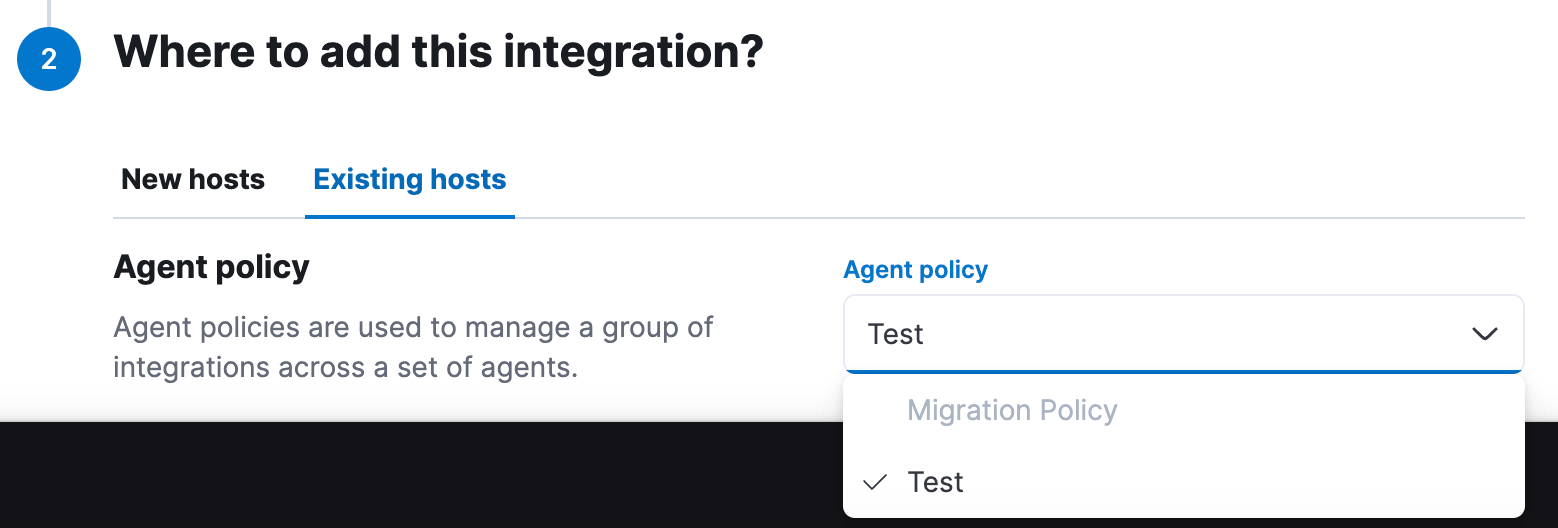

Give the integration a name, and at the bottom select the integration for Existing hosts.

Click on Save and continue.

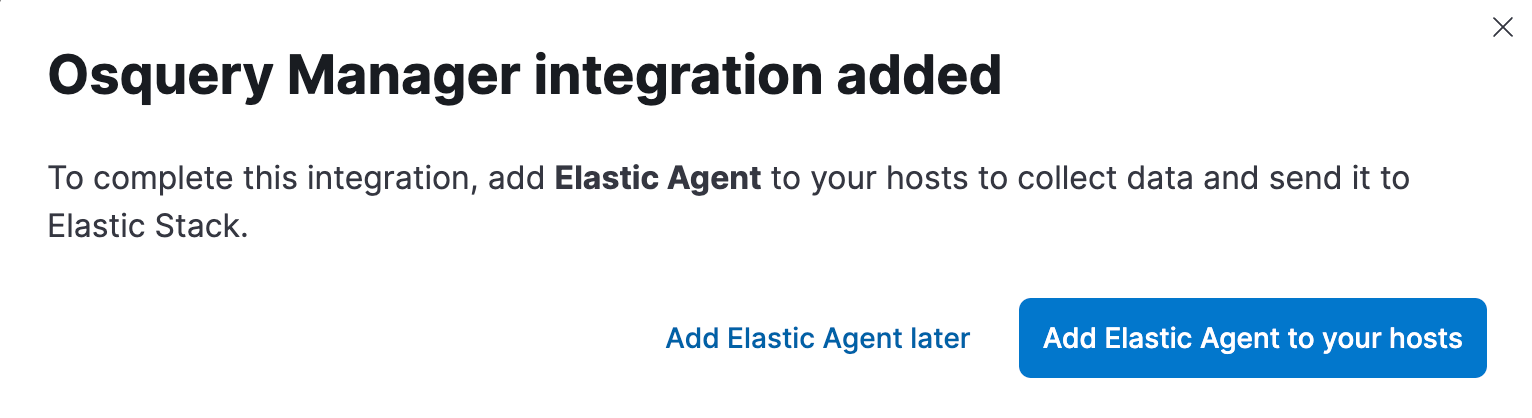

3. Add Elastic Agent to your hosts

Since there are no Elastic Agents deployed yet, Kibana will prompt this screen.

Click Add Elastic Agent to your hosts.

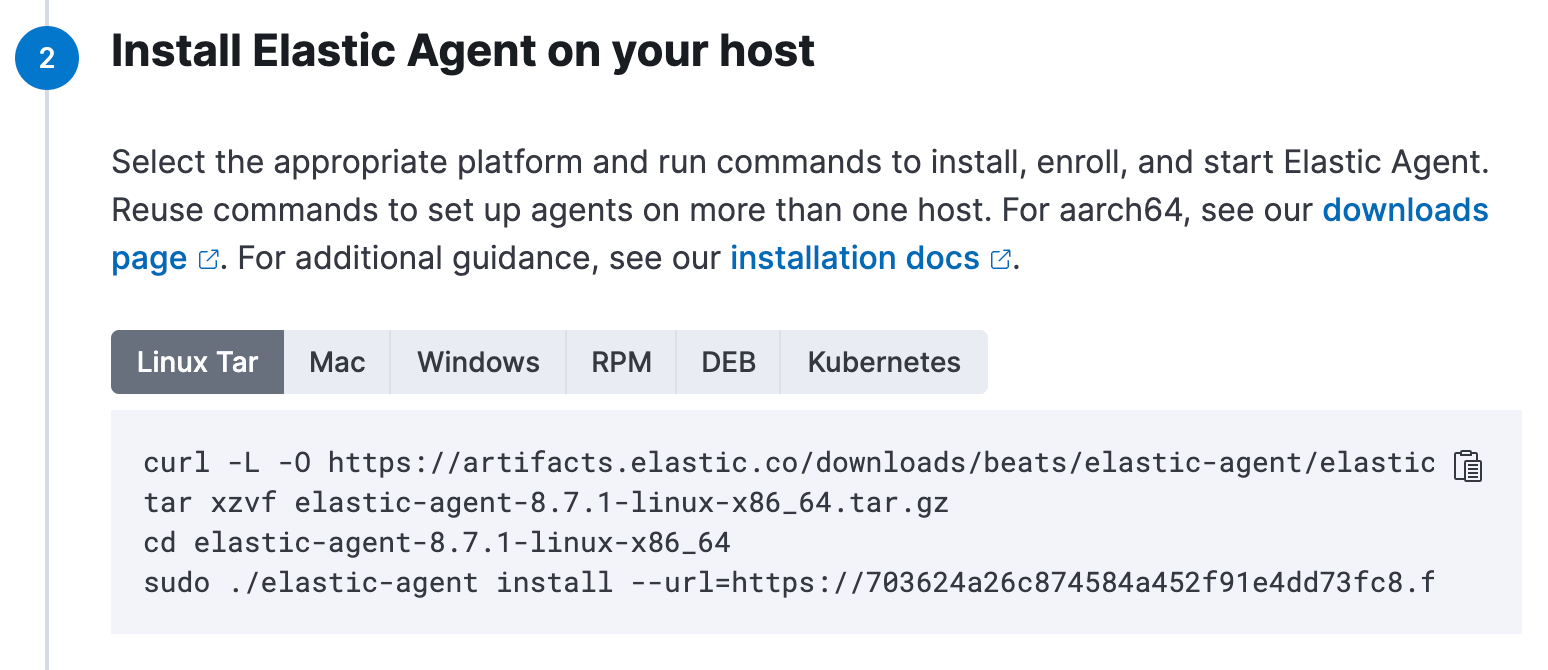

Follow the instructions there and deploy an Agent on your target hosts. All major operating systems are supported. Kubernetes as a deployment option is supported as well.

After a while, you should be able to see a confirmation of Agent enrollment in step 3.

If you have more hosts to be monitored, be sure to install the agent on them as well.

4. Query hosts

In the search box, search for Osquery. Select “Osquery Management.”

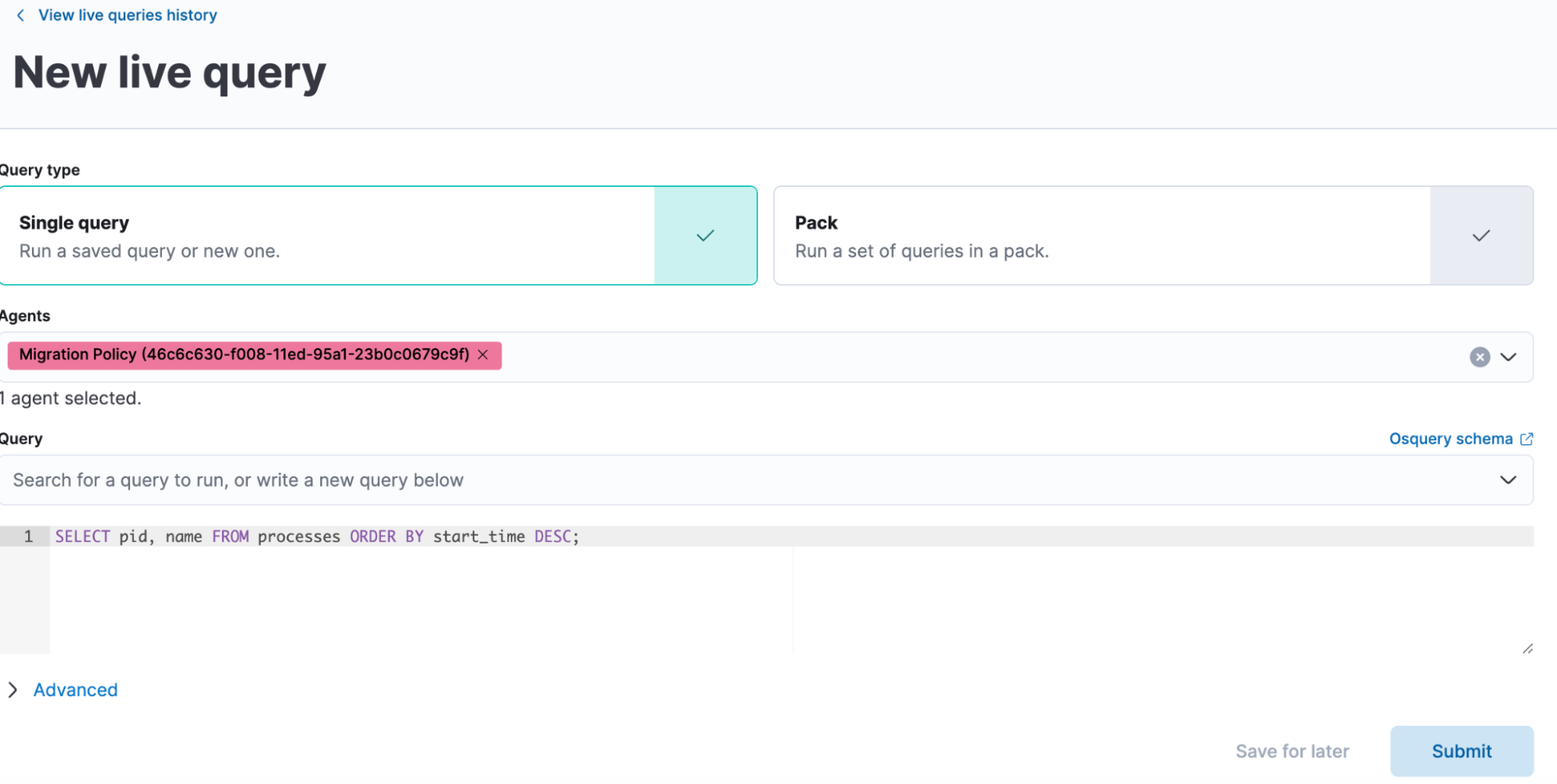

Click on New live query.

Select Single query and agents or policies you want to use for query execution.

Enter this query:

SELECT pid, name FROM processes ORDER BY start_time DESC;

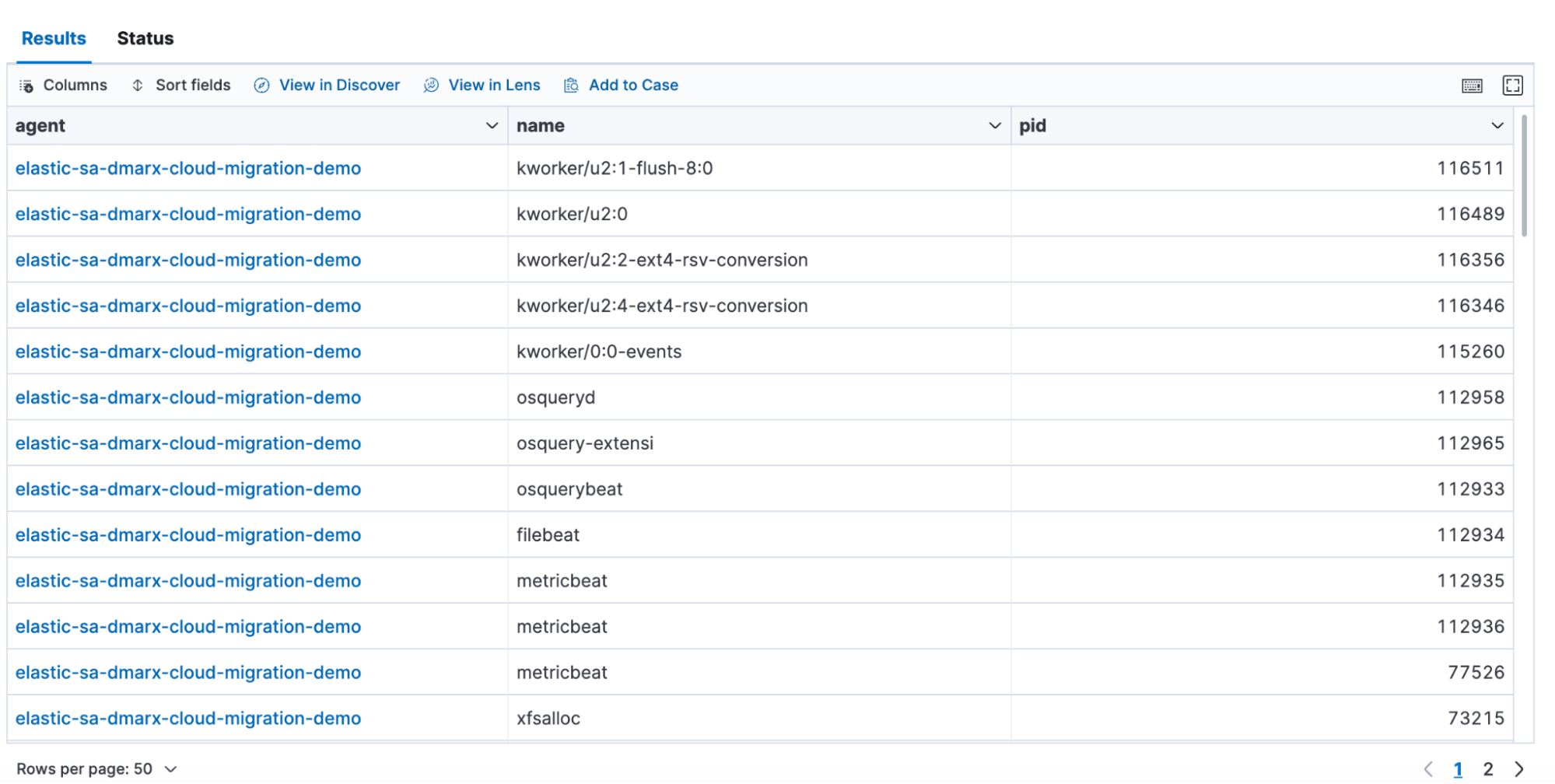

After a while, you should be able to see results that will be persisted in an index.

5. Import Kibana Dashboards

Import the overview dashboard from this repository.

TCO report pipeline requirement

In order to capture the correct metrics for a TCO analysis, you will need to capture storage IOPS. By default, this field is not created by the Elastic agent but can easily be calculated during ingestion using a pipeline. The pipeline can be created by following these steps.

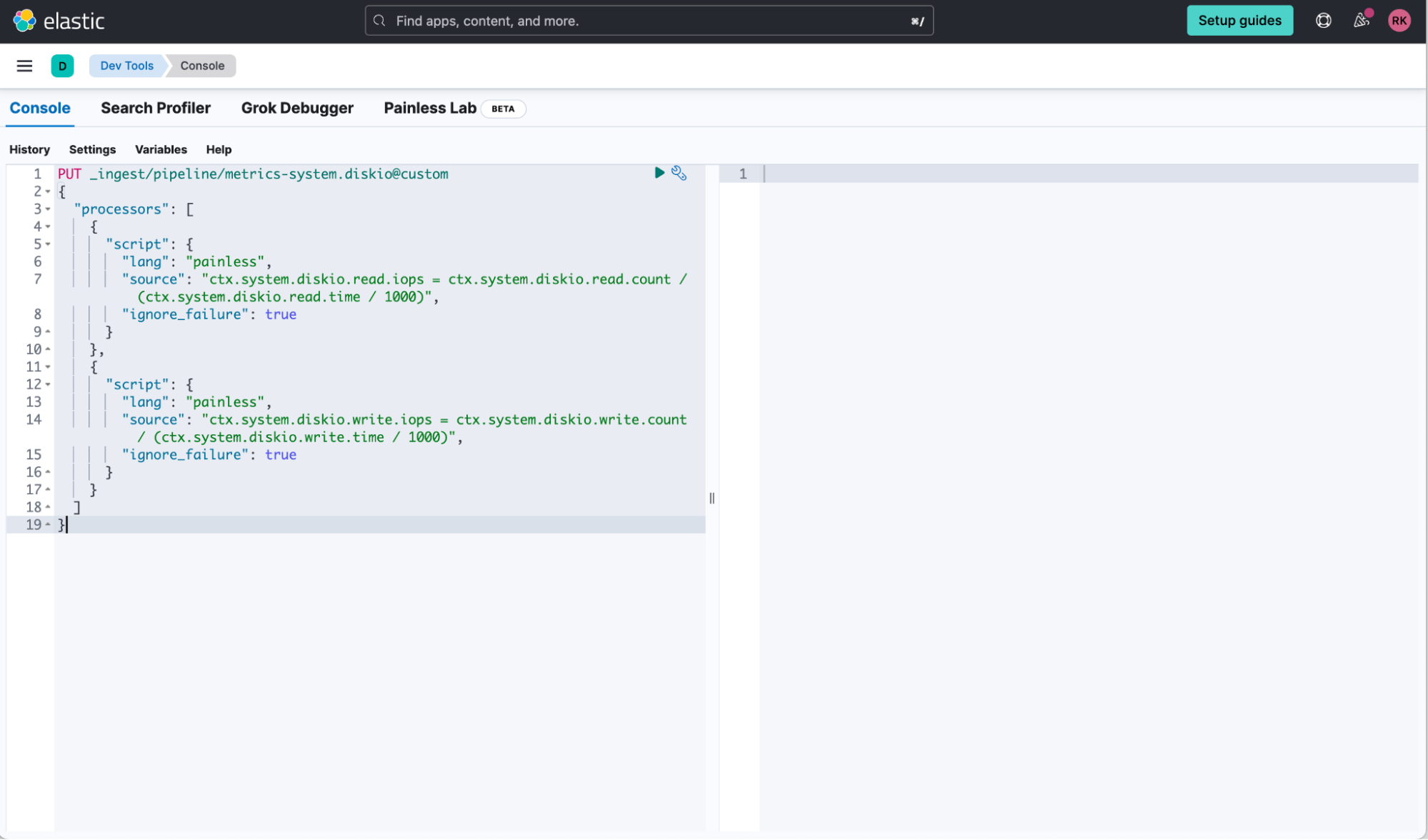

1. Copy the pipeline script

The script to create the pipeline can be found here. Copy the contents of IOPSfieldpipeline.json.

2. Run the script to create the pipeline

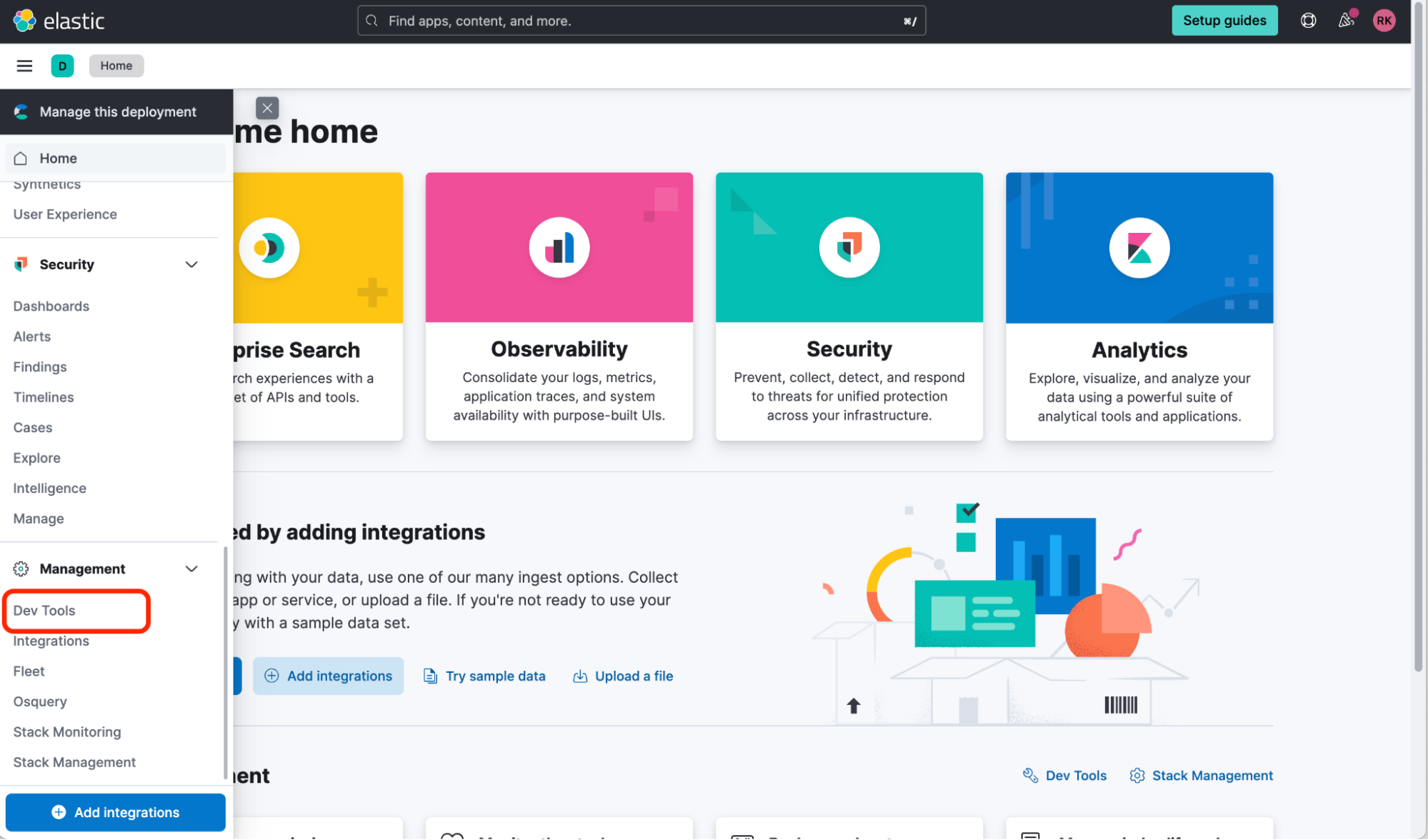

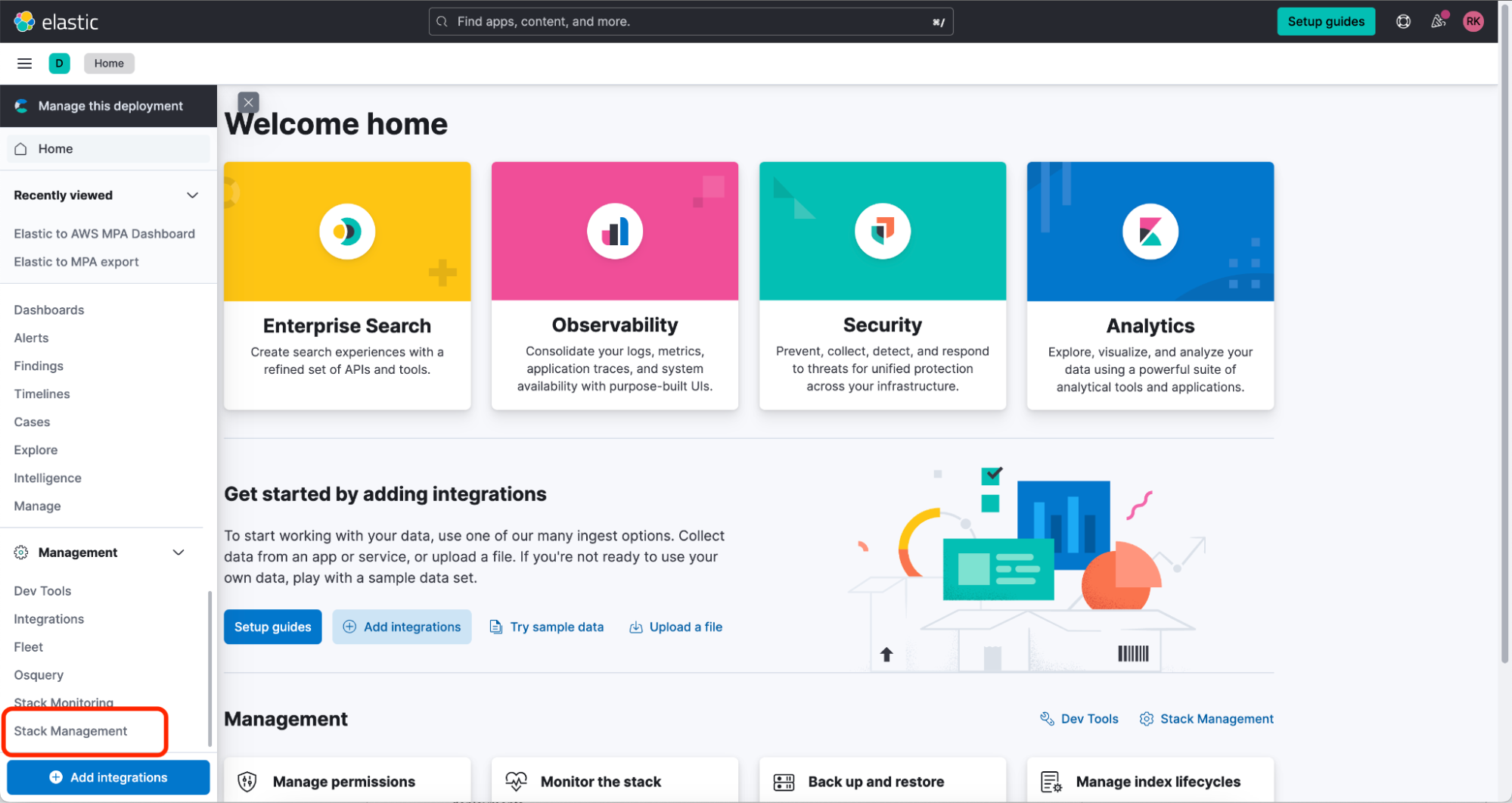

From the homepage of Kibana, select the burger menu on the top left corner of the screen and scroll down to select Management>Dev Tools.

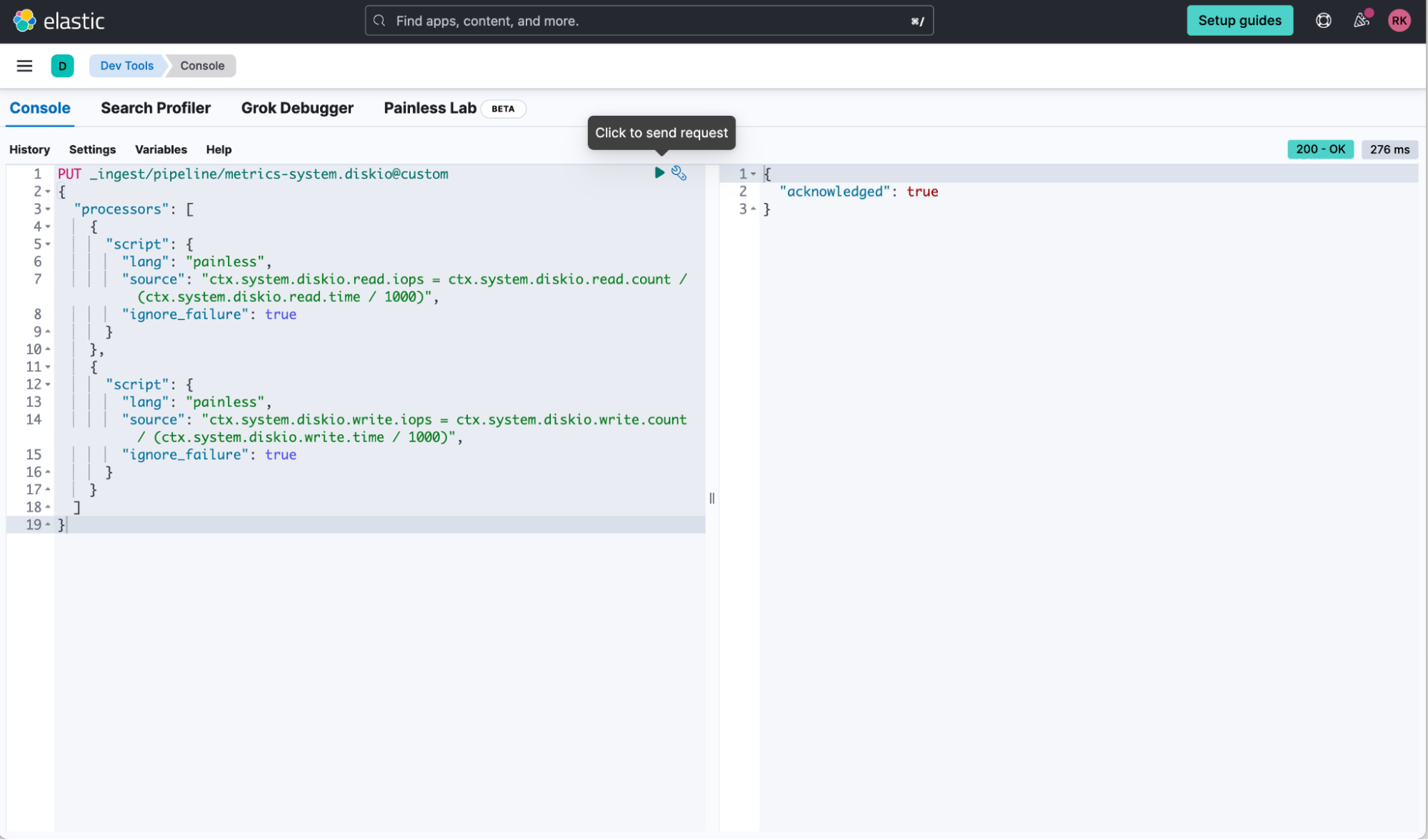

Now paste the contents of the script and run it. NOTE: The pipeline and field names must not be changed.

You should then receive a positive response:

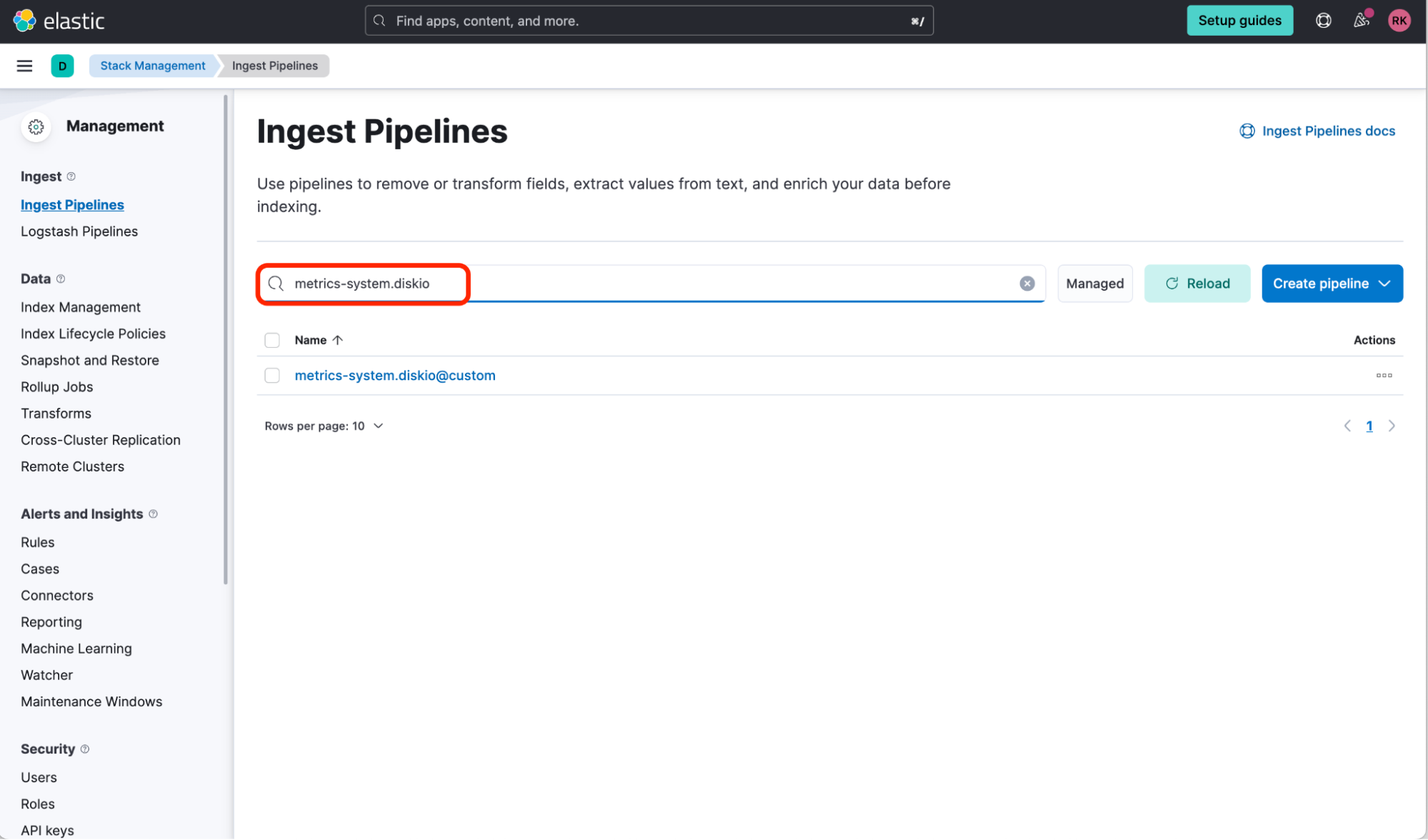

You can now use the burger menu in the top left corner of the screen and select Management>Stack Management and then select Ingest>Ingest Pipelines. Now search for “metrics-system.diskio” and you should see the pipeline we have created.

Elastic will now create the extra fields we need for our TCO analysis when ingesting data from Elastic Agent.

Generating the AWS TCO report

1. Import the required Kibana Objects

In order to visualize your data, you will first need to import the required Lens and Dashboard objects into Kibana.

The required .ndjson files can be found here.

Once downloaded, you will need to import the .ndjson files into Kibana.

Once logged in to Kibana, select the burger menu in the top right corner and then scroll down and select Management>Stack Management.

Now select Kibana>Saved Objects.

You will need to select the Import option toward the top right of the Saved Objects screen.

You can now import the .ndjson files.

Once you have finished importing the files, you will now be able to visualize your data.

2. Visualize and download data

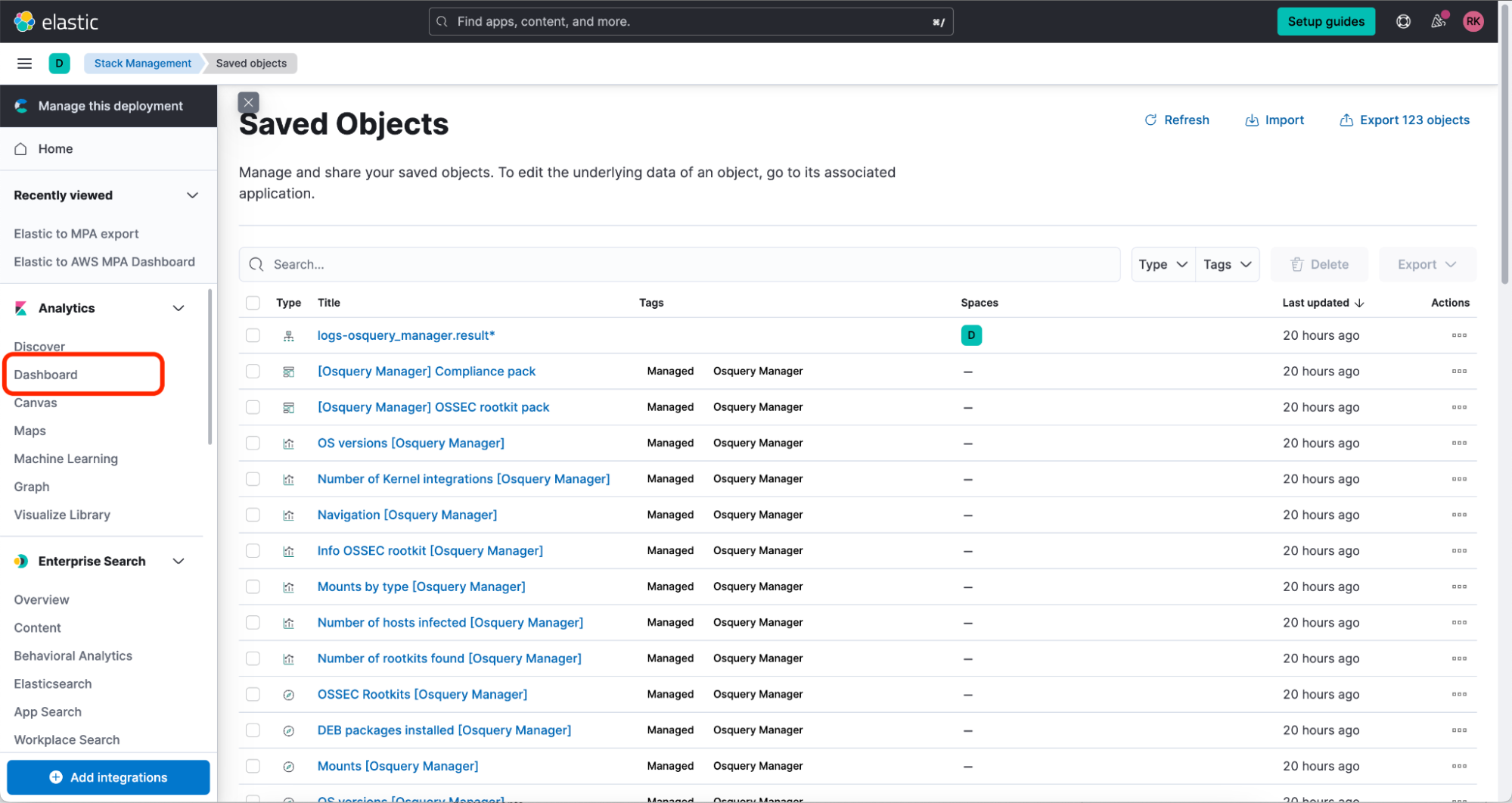

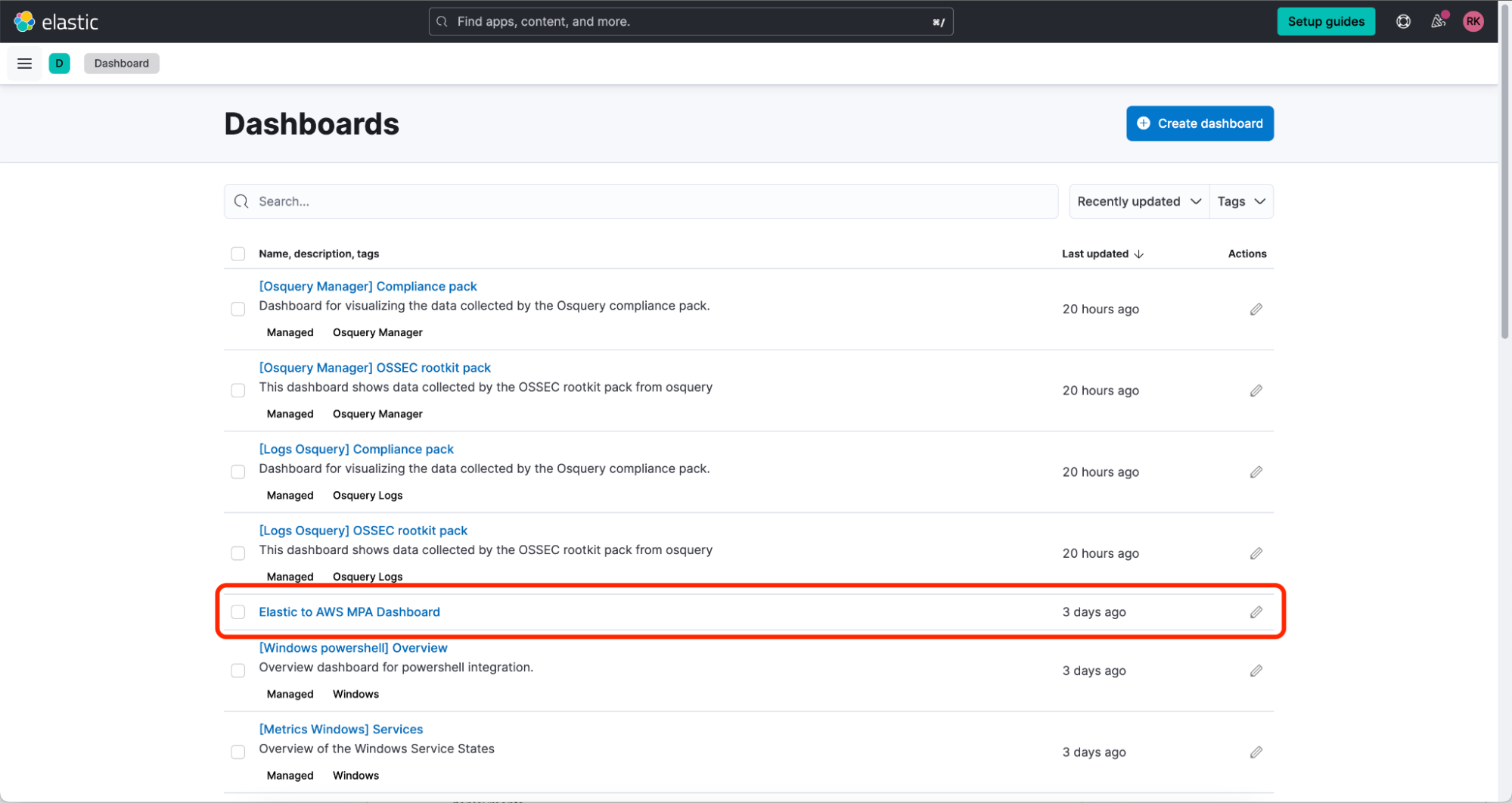

Use the burger menu in the top right of Kibana and select Analytics>Dashboard.

Now browse and find Elastic to MPA Dashboard.

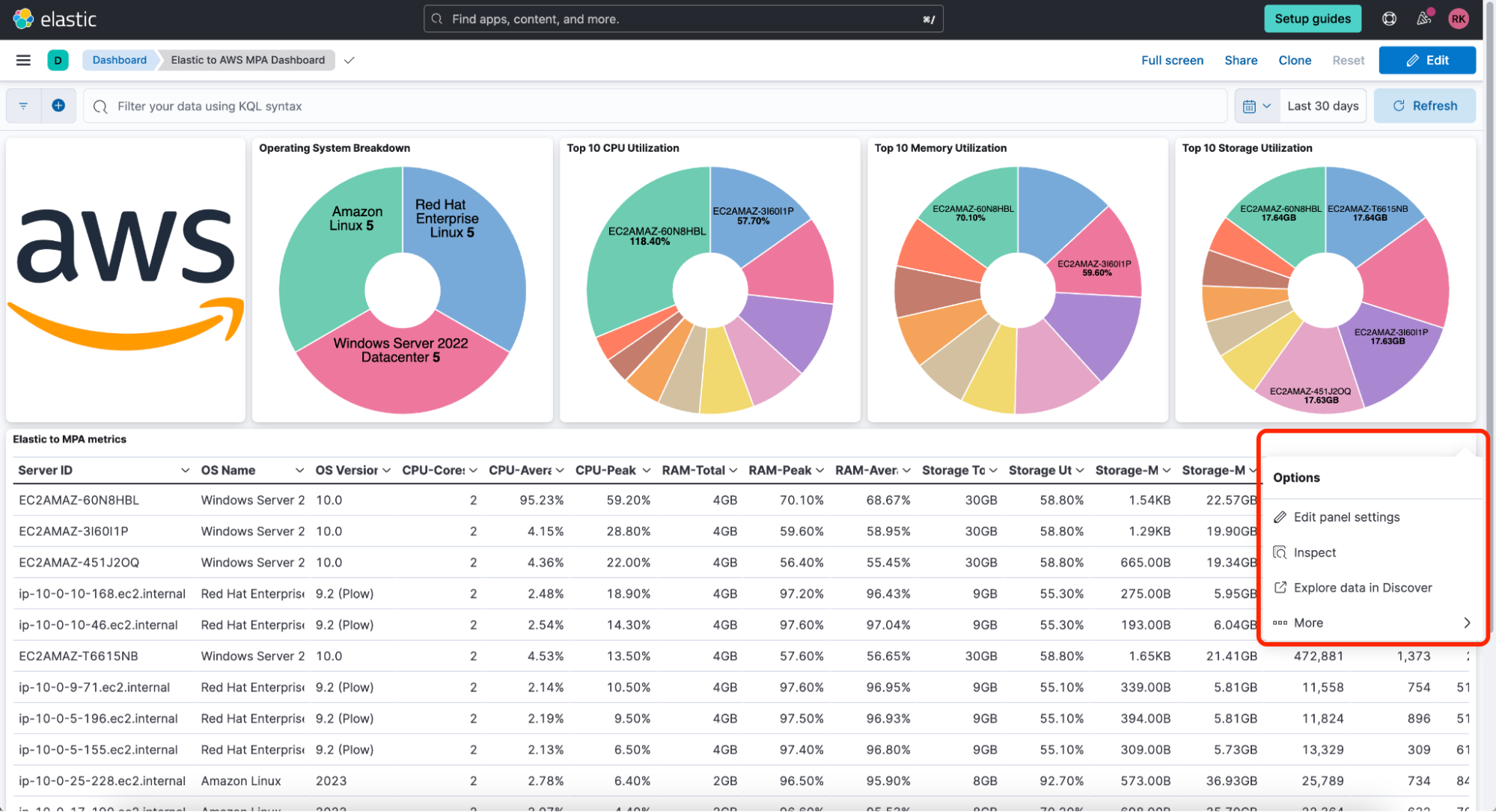

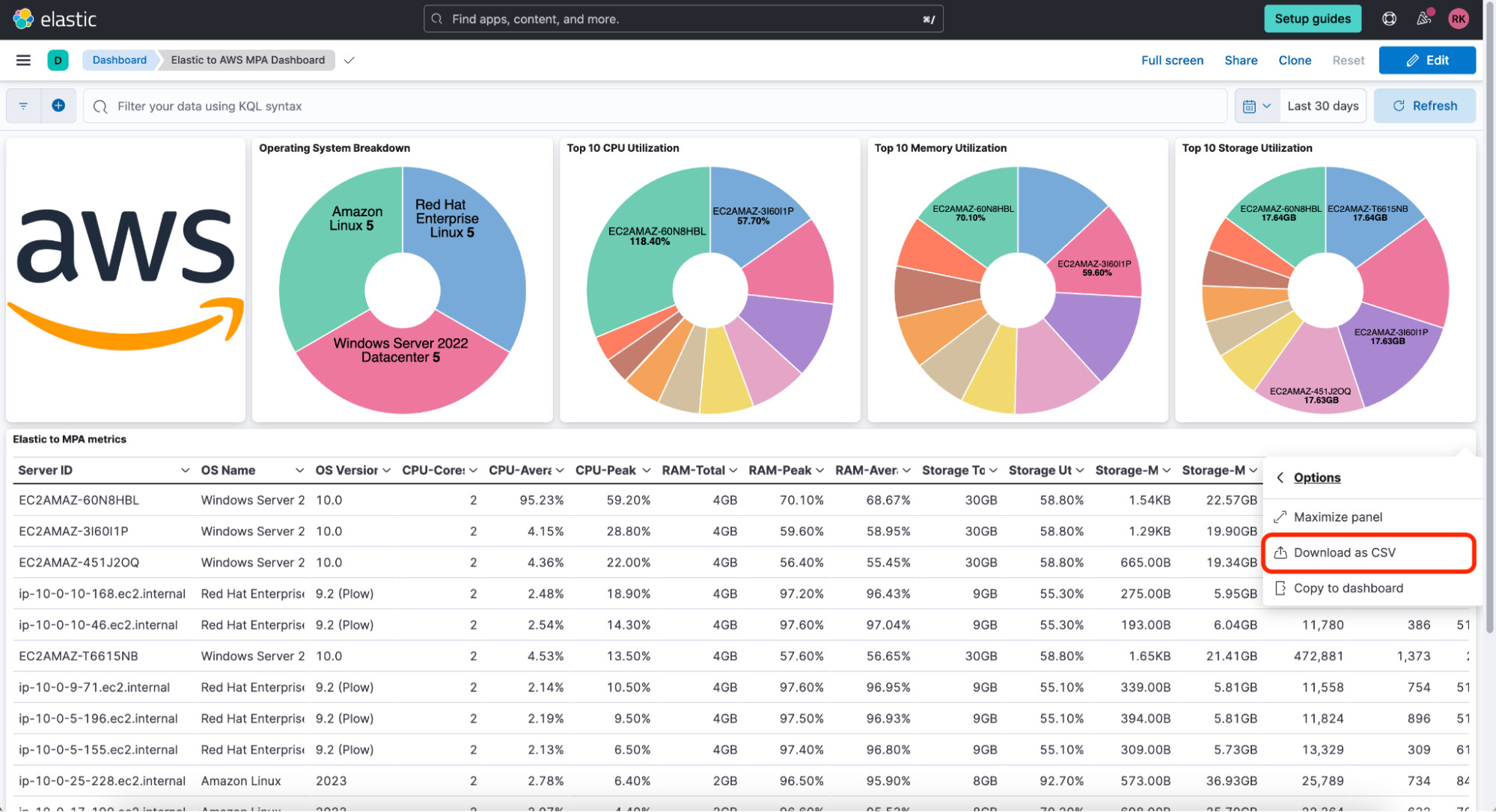

Use the Dashboard to visualize your data. Then, you can use the three dots in the top right of the Elastic to MPA export panel to use the Options menu, select More, and now download the table contents as a .csv file.

We can then use this file to import the data into the Migration Portfolio Assessment portal for TCO calculation.

3. Import data and calculate TCO

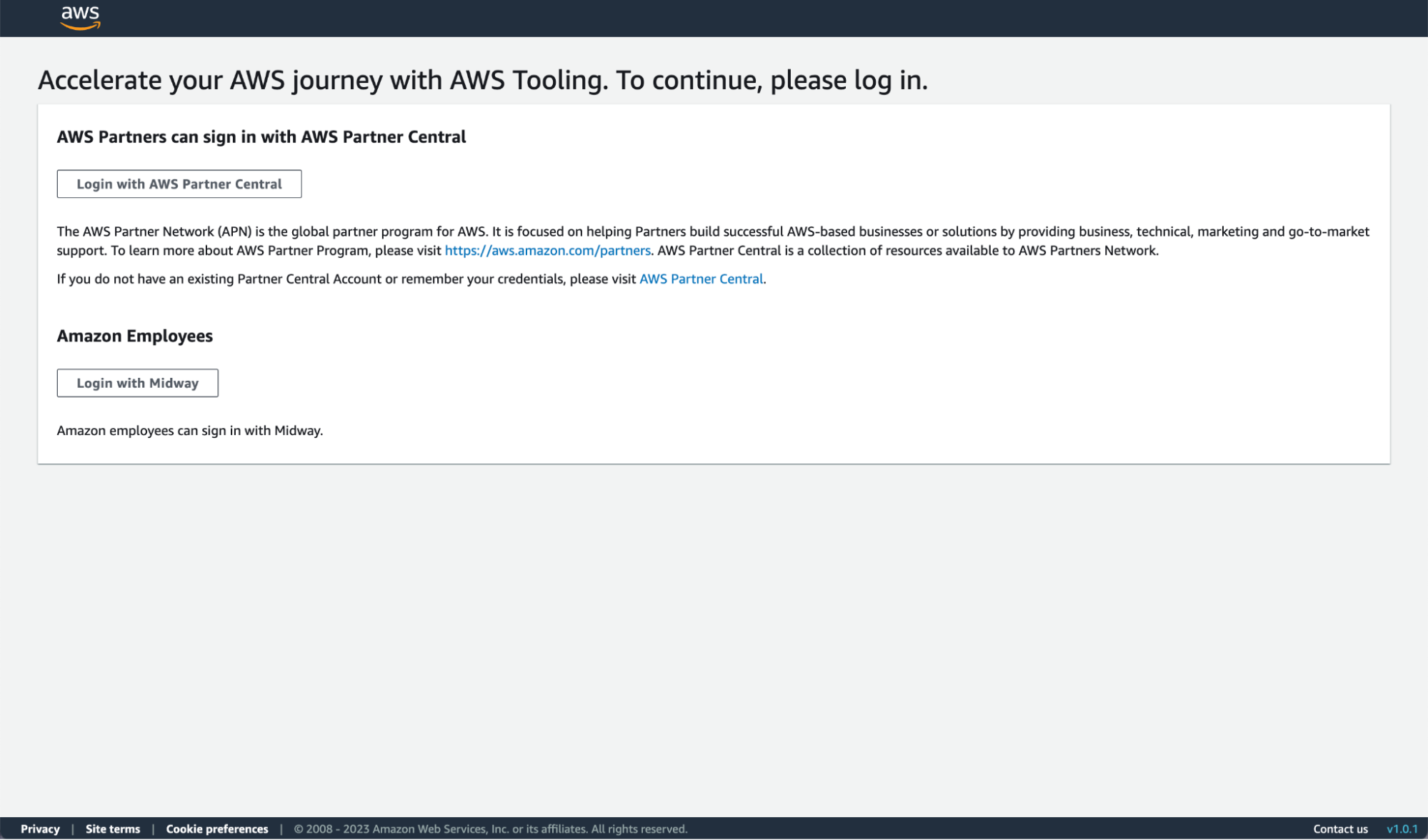

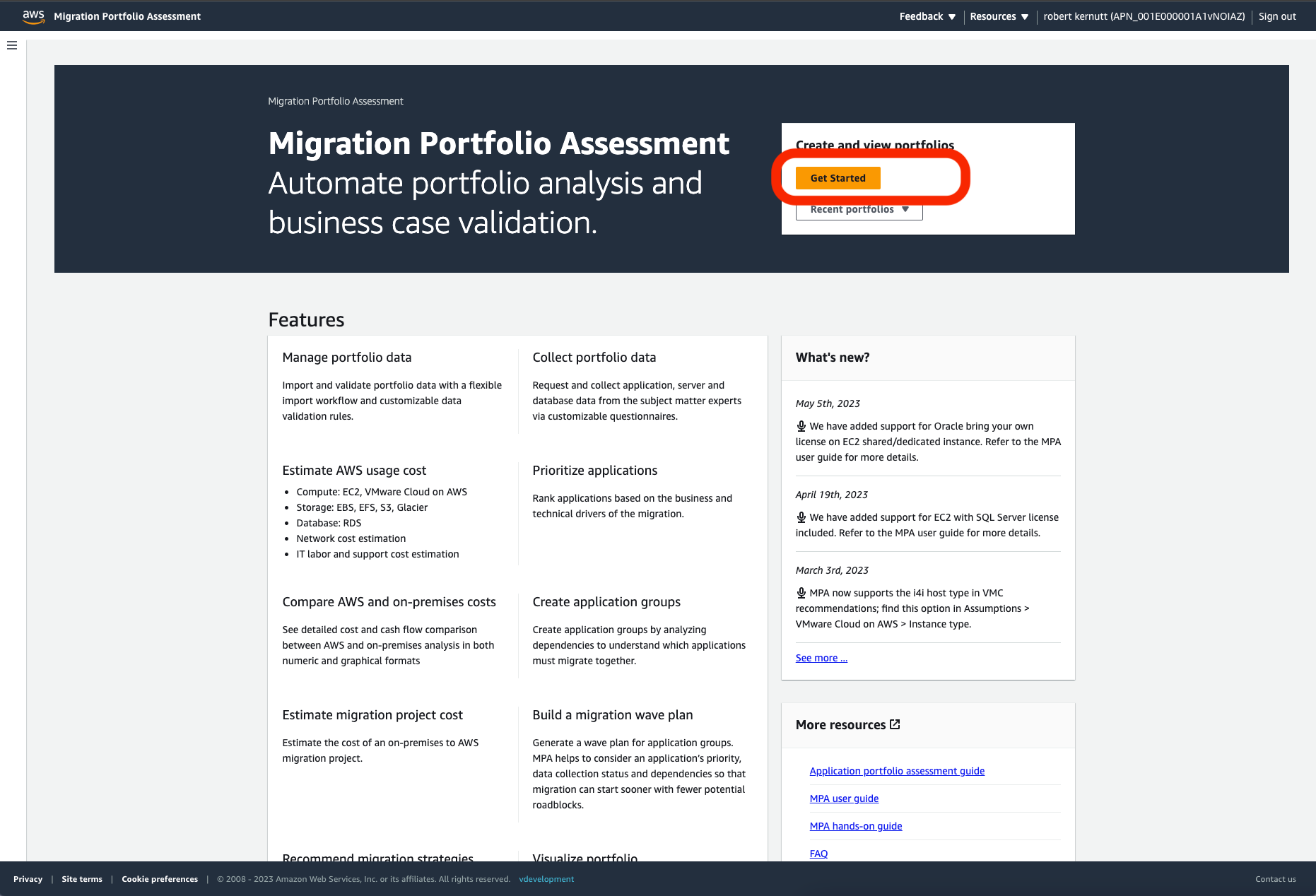

NOTE: To perform a TCO analysis, you will need to be an Amazon employee or be an AWS partner and have an AWS Partner Central account.

The TCO analysis is performed using the MPA portal from AWS. We will create a portfolio and upload the metrics data we have collected in Elastic. To do this, log in to the site using your Midway or APN credentials.

Select Get Started.

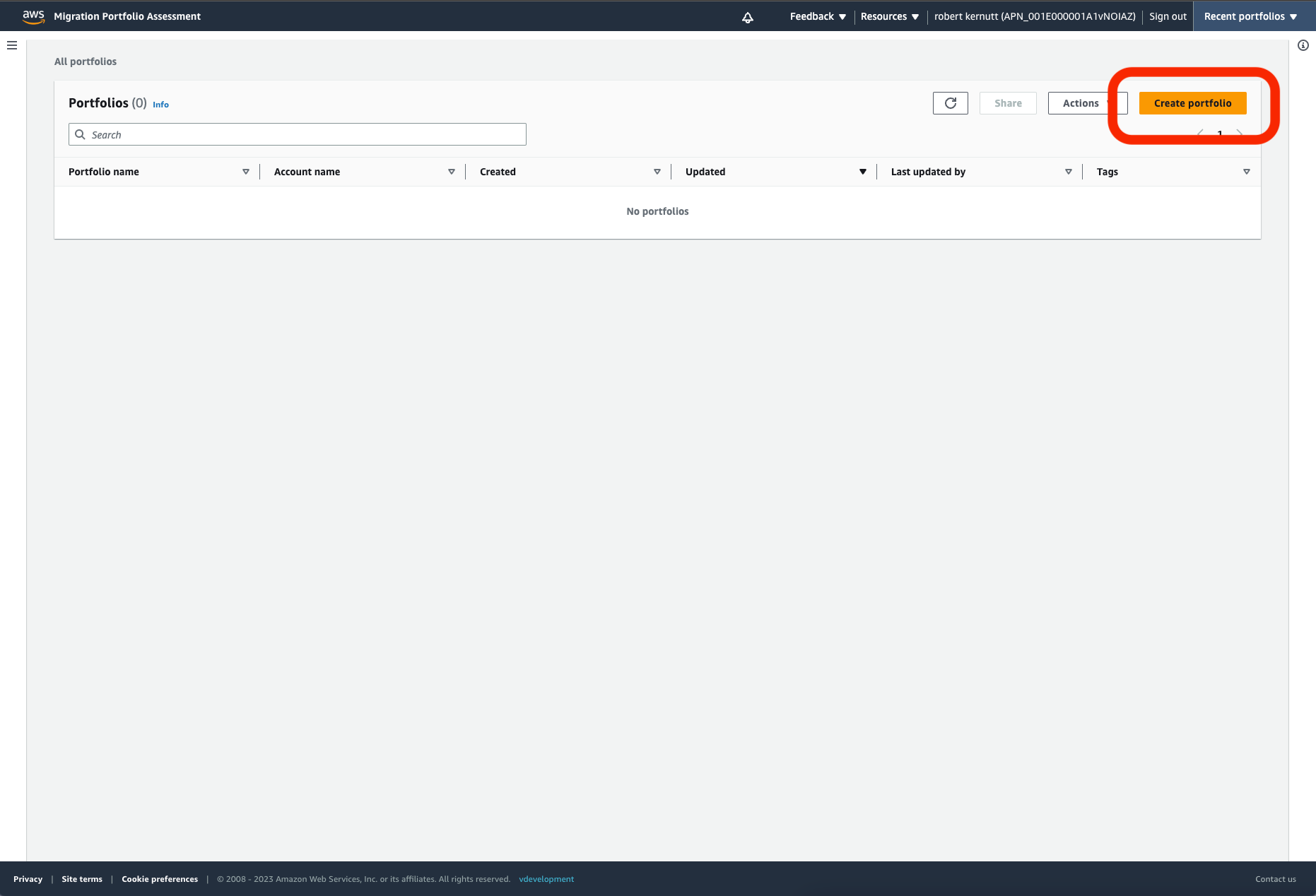

Then select Create Portfolio.

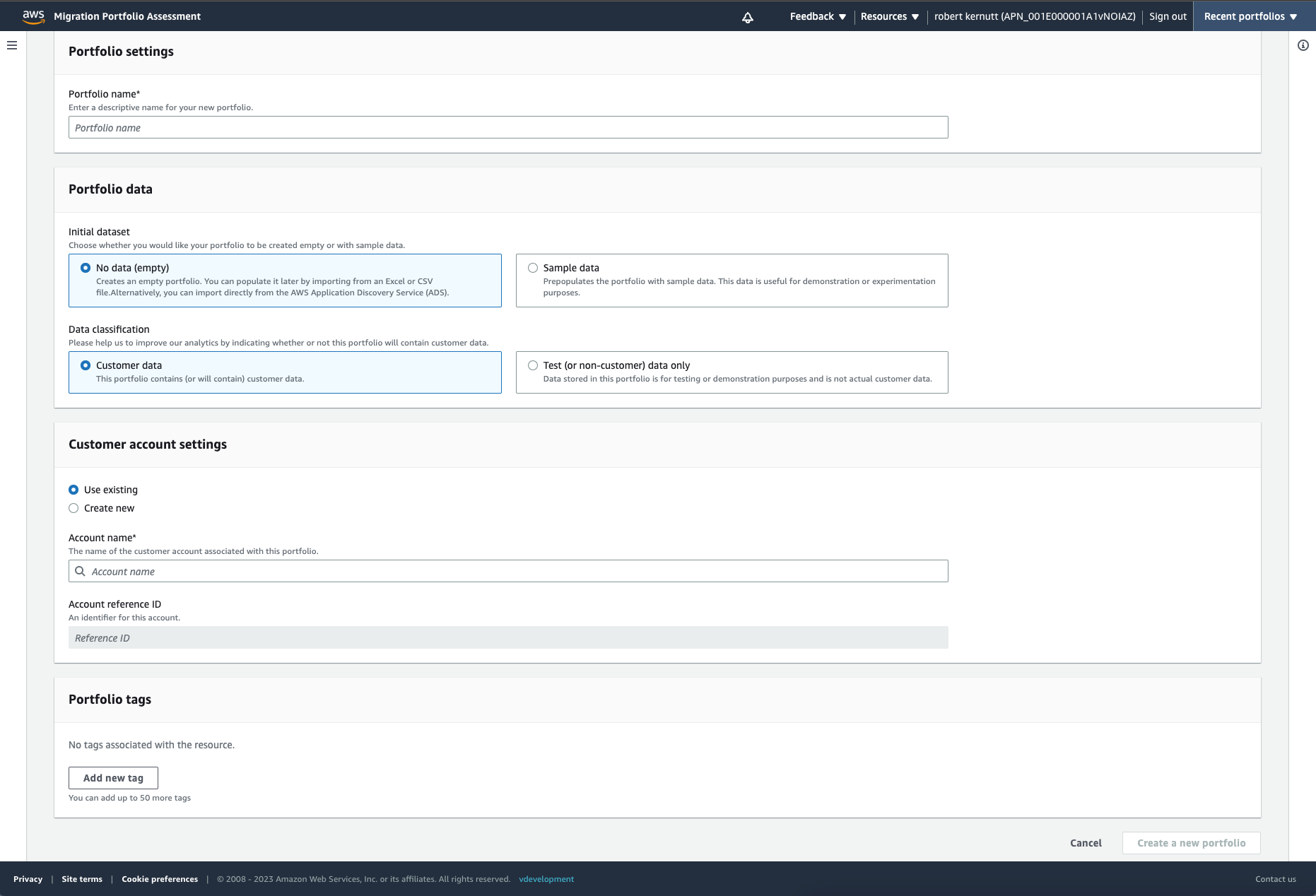

Now complete the details required and select Create a new portfolio.

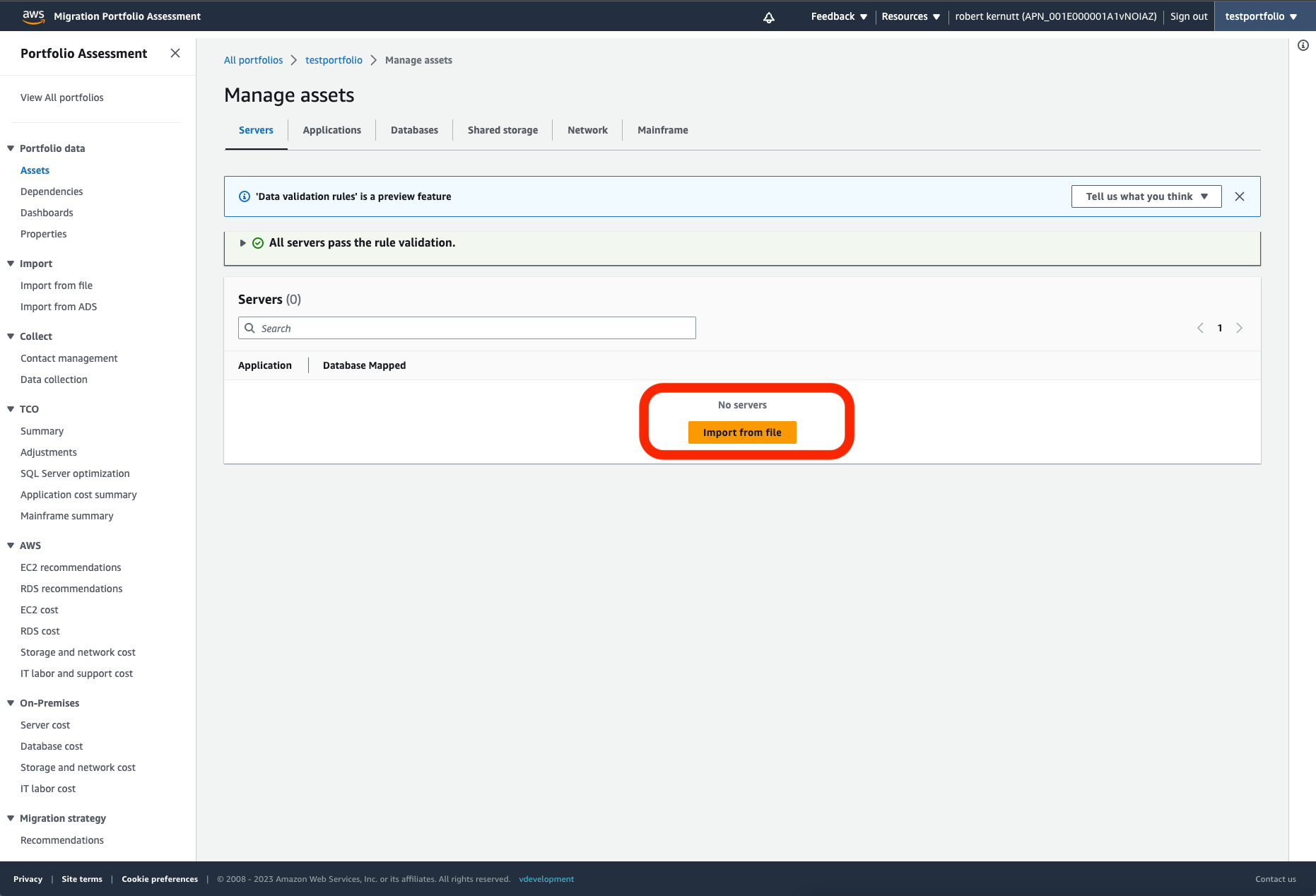

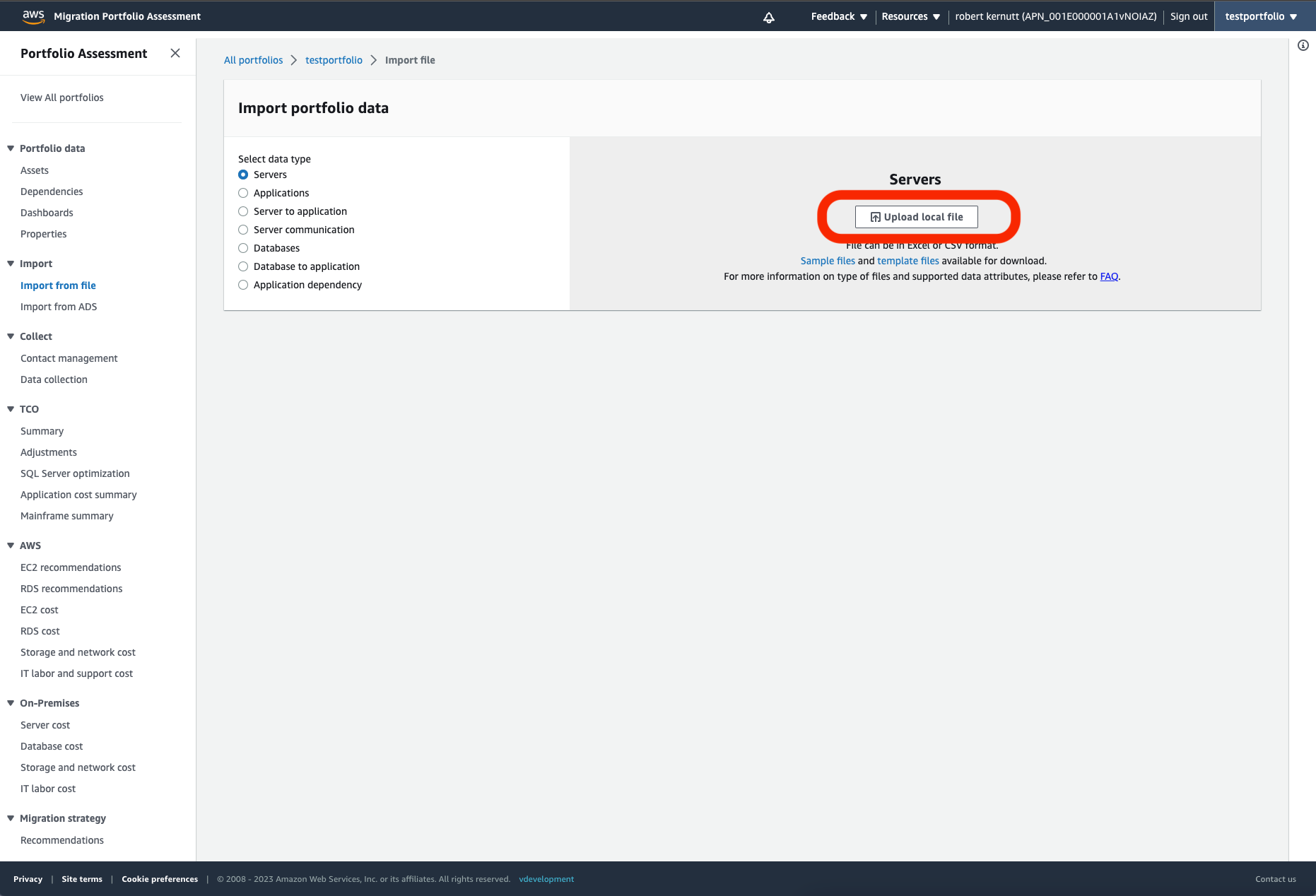

Before you can do any analysis, you will need to import your data. Select Import from file.

Leave the data type as Servers and upload the .csv file you downloaded from Kibana earlier.

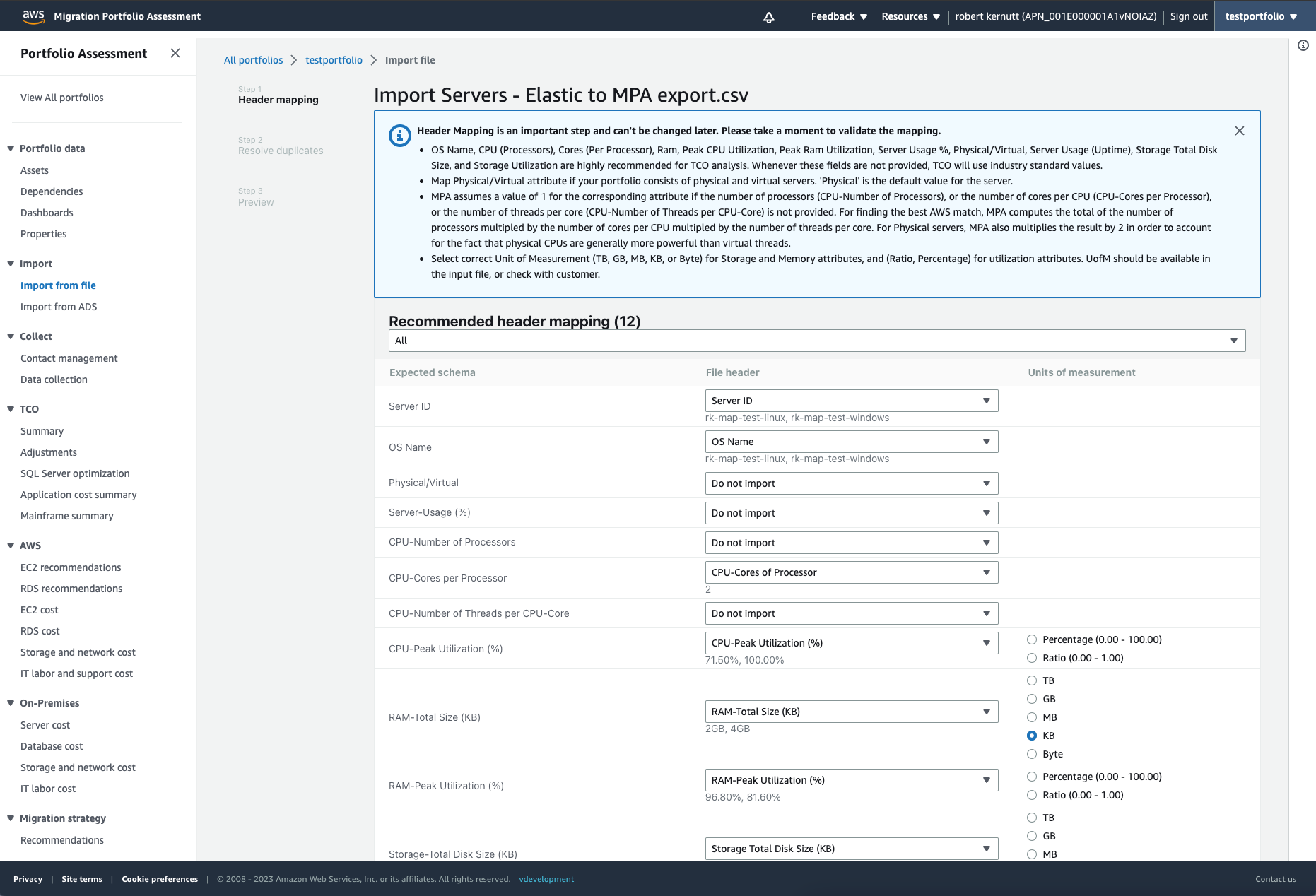

Go through the list of mappings and ensure you select the correct unit of measurement. In order to make this simple, our field mappings in Elastic use the same name as the fields in MPA.

Scroll to the bottom of the list and then select Next.

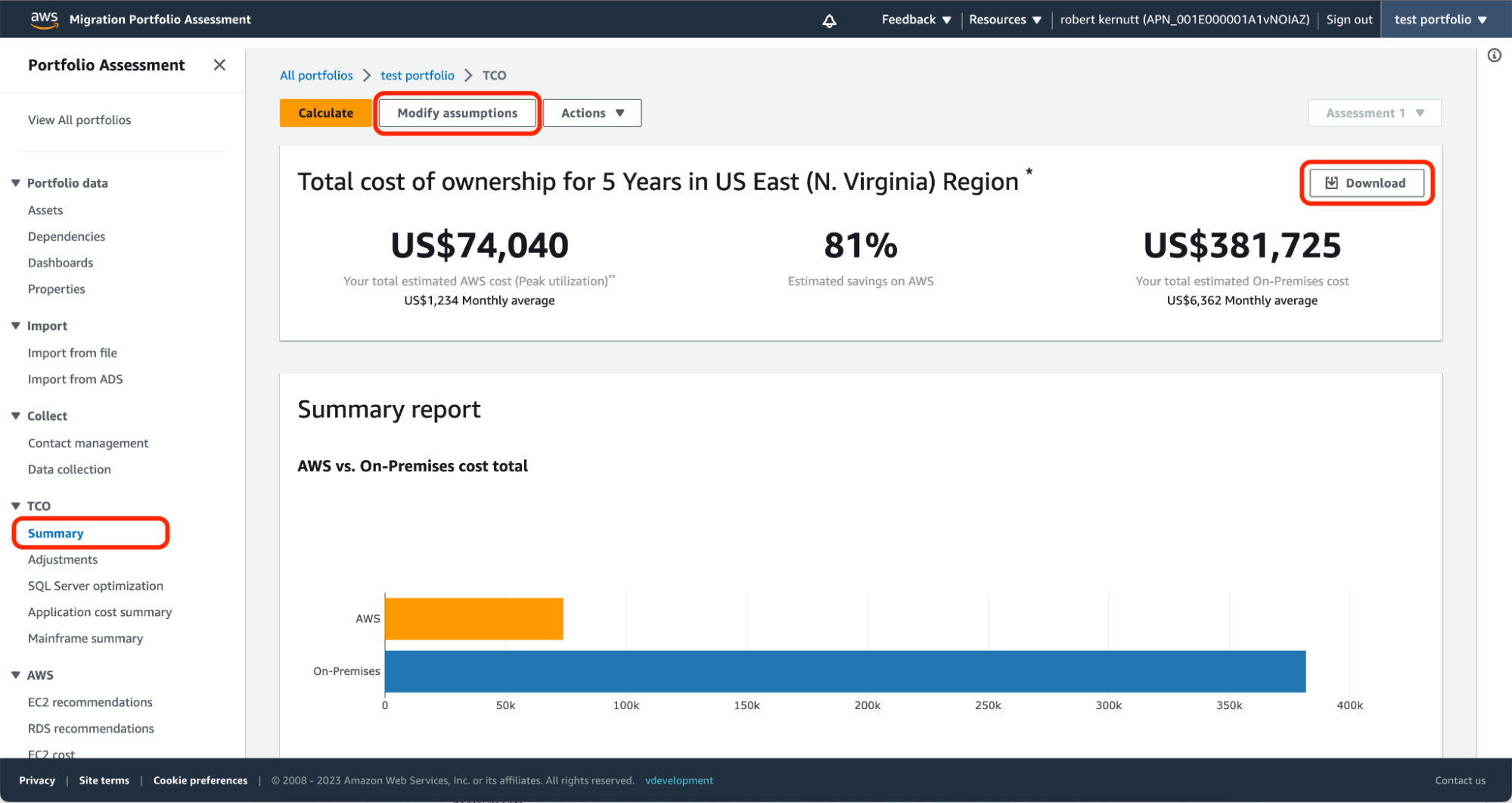

Now select TCO>Summary to see your TCO analysis. You can use the Modify Assumptions option to review the default assumptions and adjust to your own values. You can then download the TCO using the Download option on the top right.

Conclusion

With the combined power of Elastic, ElastiFlow, and Kyndryl to support them, organizations can ensure their migration to the cloud is seamless and efficient, providing a solution for any variables that may arise. They can begin their cloud journey confident that it will allow them to reap the full benefits of their new cloud environment and set them, their employees, and their customers up for even greater success.

Ready to get started? Begin a free 14-day trial of Elastic Cloud to create your own deployment.