Agent Builder is available now GA. Get started with an Elastic Cloud Trial, and check out the documentation for Agent Builder here.

Introduction

This article will show you how to build an AI agent for HR using GPT-OSS and Elastic Agent Builder. The agent can answer your questions without sending data to OpenAI, Anthropic, or any external service.

We’ll use LM Studio to serve GPT-OSS locally and connect it to Elastic Agent Builder.

By the end of this article, you’ll have a custom AI agent that can answer natural language questions about your employee data while maintaining full control over your information and model.

Prerequisites

For this article, you need:

- Elastic Cloud hosted 9.2, serverless or local deployment

- Machine with 32GB RAM recommended (minimum 16GB for GPT-OSS 20B)

- LM Studio installed

- Docker Desktop Installed

Why use GPT-OSS?

With a local LLM you have the control to deploy it in your own infrastructure and fine-tune it to fit your own needs. All this while maintaining control over the data that you share with the model, and of course, you don’t have to pay a license fee to an external provider.

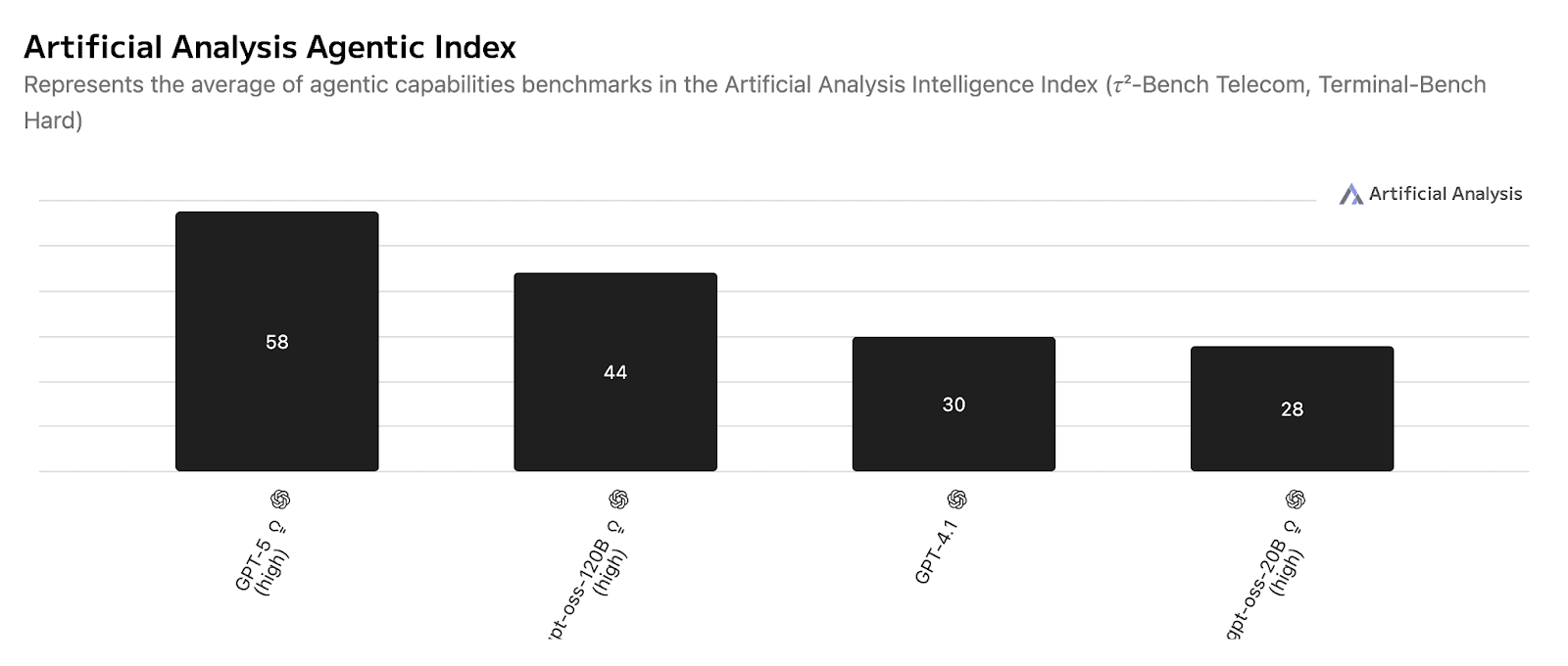

OpenAI released GPT-OSS on August 5, 2025, as part of their commitment to the open model ecosystem.

The 20B parameter model offers:

- Tool use capabilities

- Efficient inference

- OpenAI SDK compatible

- Compatible with agentic workflows

Benchmark comparison:

Benchmark source.

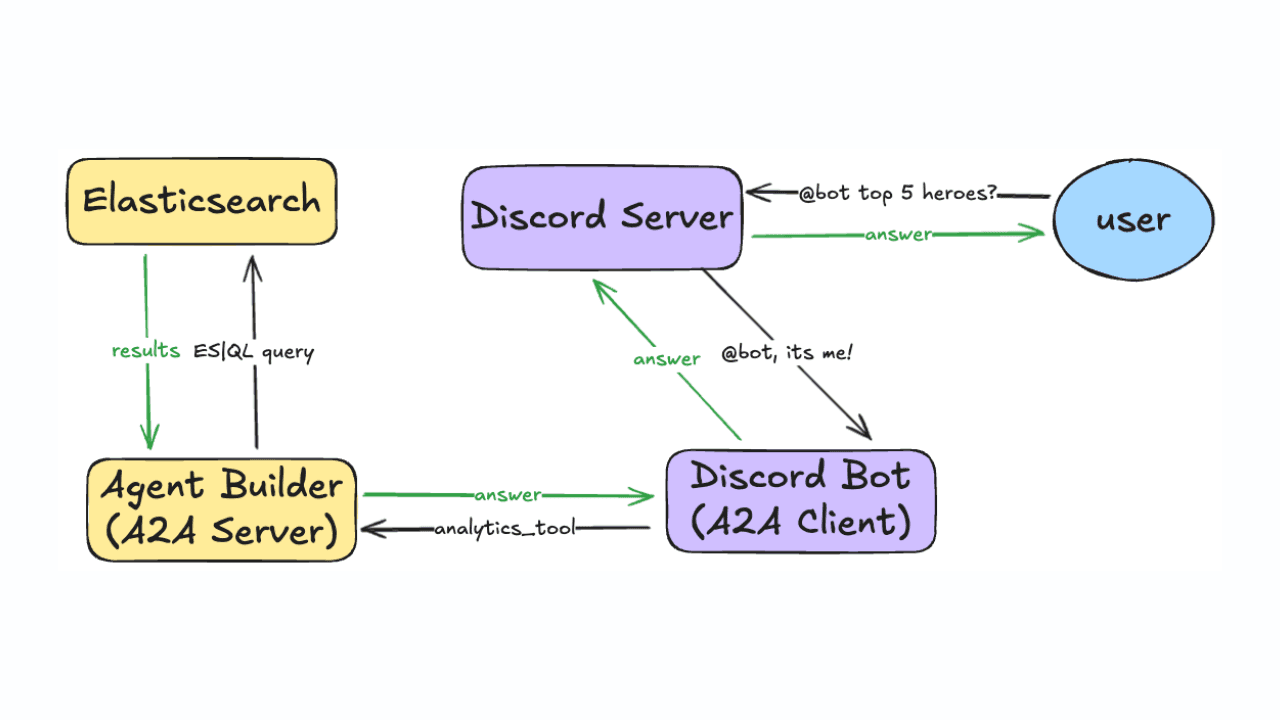

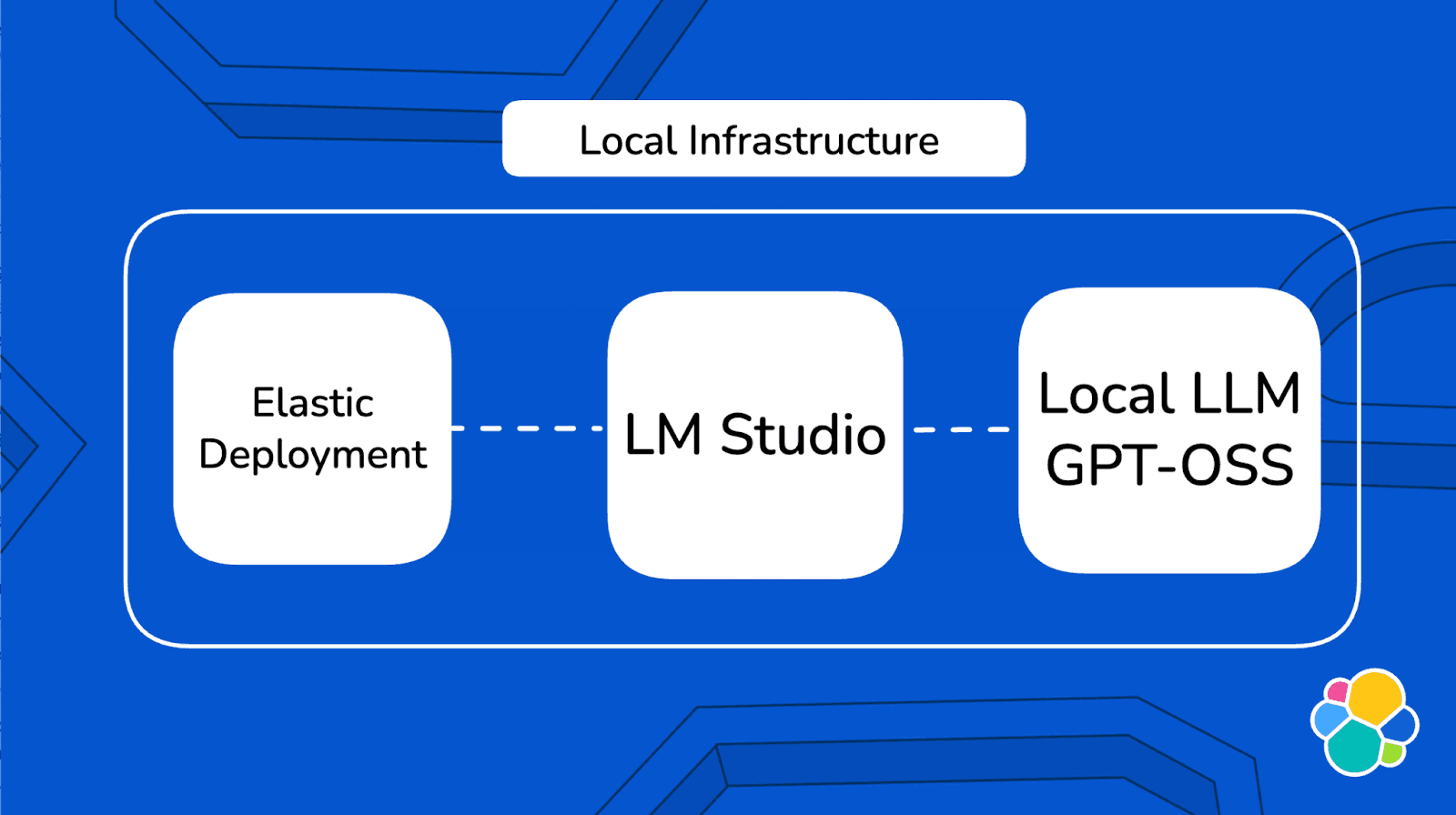

Solution architecture

The architecture runs entirely on your local machine. Elastic (running in Docker) communicates directly with your local LLM through LM Studio, and the Elastic Agent Builder uses this connection to create custom AI agents that can query your employee data.

For more details, refer to this documentation.

Building an AI agent for HR: Steps

We’ll divide the implementation into 5 steps:

- Configure LM studio with a local model

- Deploy Local Elastic with Docker

- Create the OpenAI connector in Elastic

- Upload employee data to Elasticsearch

- Build and test your AI Agent

Step 1: Configure LM Studio with GPT-OSS 20B

LM Studio is a user-friendly application that allows you to run large language models locally on your computer. It provides an OpenAI-compatible API server, making it easy to integrate with tools like Elastic without a complex setup process. For more details, refer to the LM Studio Docs.

First, download and install LM Studio from the official website. Once installed, open the application.

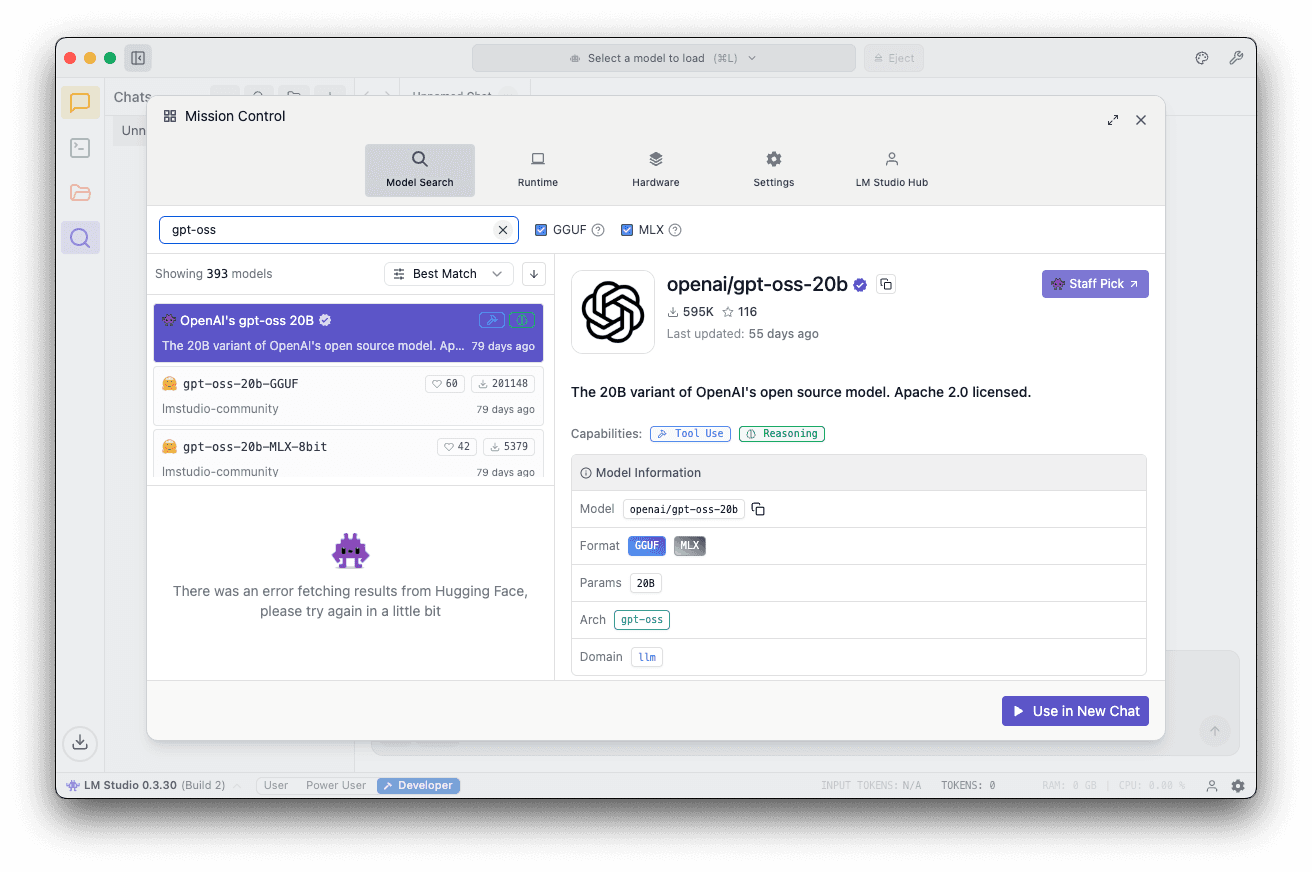

In the LM Studio interface:

- Go to the search tab and search for “GPT-OSS”

- Select the

openai/gpt-oss-20bfrom OpenAI - Click download

The size of this model should be approximately 12.10GB. The download may take a few minutes, depending on your internet connection.

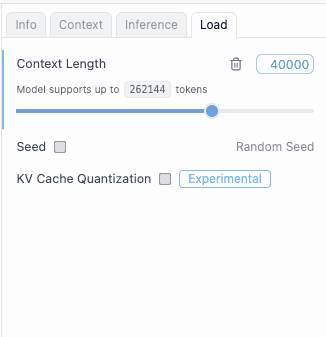

Once the model is downloaded:

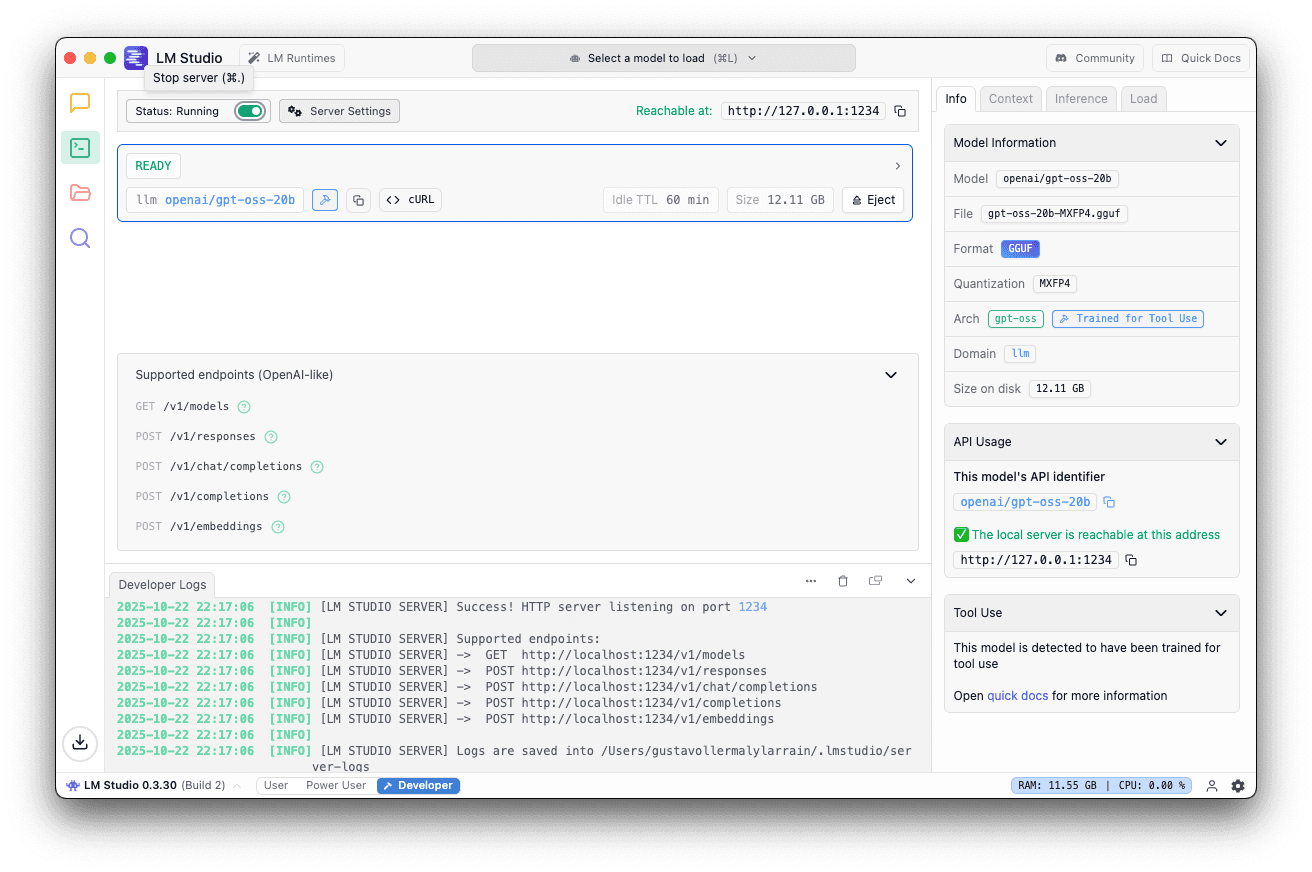

- Go to the local server tab

- Select the openai/gpt-oss-20b

- Use the default port 1234

- On the right panel, go to Load and set the Context Length to 40K or higher

5. Click start server

You should see this if the server is running.

Step 2: Deploy Local Elastic with Docker

Now we’ll set up Elasticsearch and Kibana locally using Docker. Elastic provides a convenient script that handles the entire setup process. For more details refer to the official documentation.

Run the start-local script

Execute the following command in your terminal:

This script will:

- Download and configure Elasticsearch and Kibana

- Start both services using Docker Compose

- Automatically activate a 30-day Platinum trial license

Expected output

Just wait for the following message and save the password and API key shown; you’ll need them to access Kibana:

Access Kibana

Open your browser and navigate to:

Log in using the credentials obtained in the terminal output.

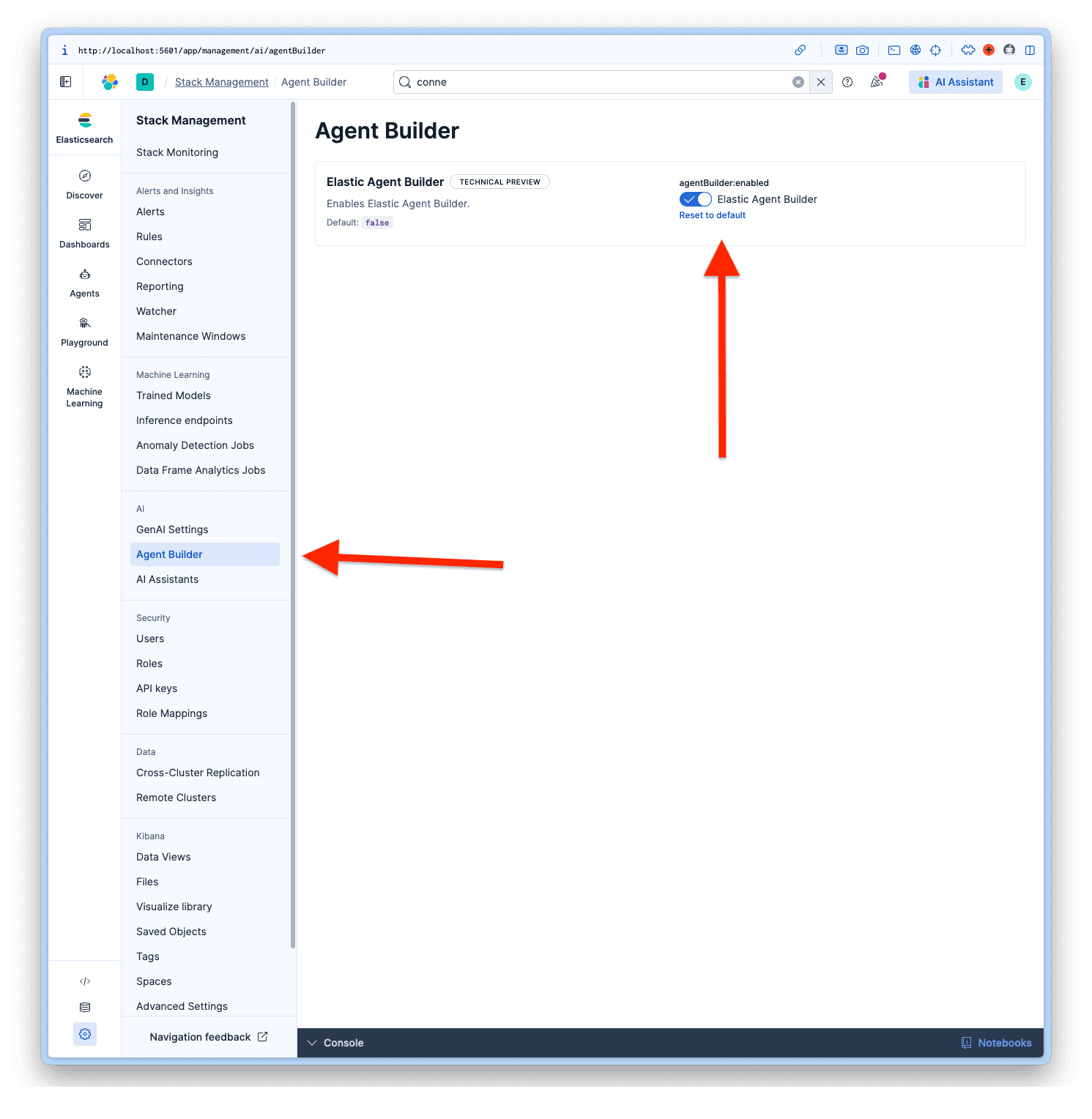

Enable Agent Builder

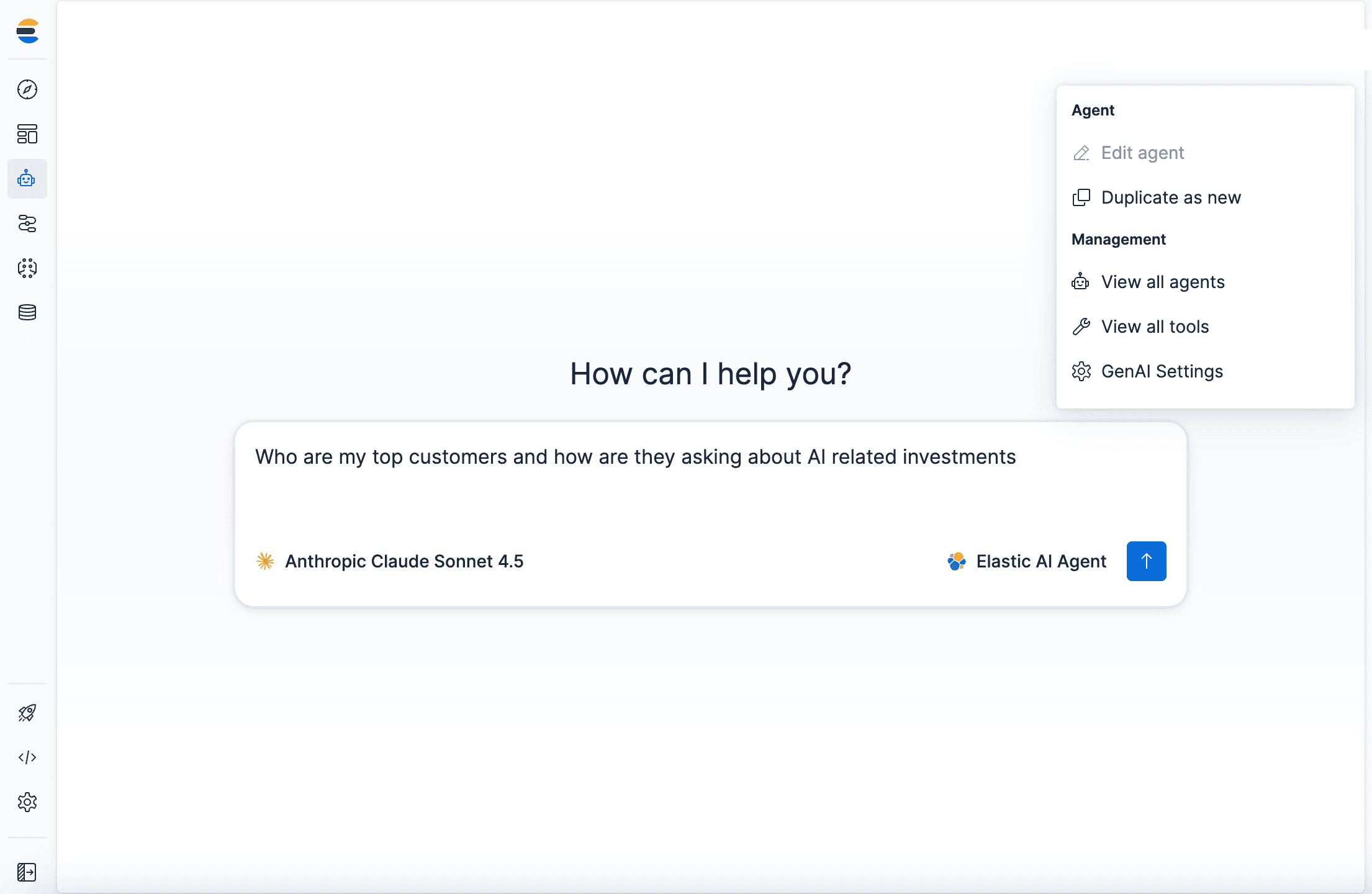

Once logged in to Kibana, navigate to Management > AI > Agent Builder and activate the Agent Builder.

Step 3: Create the OpenAI connector in Elastic

Now we’ll configure Elastic to use your local LLM.

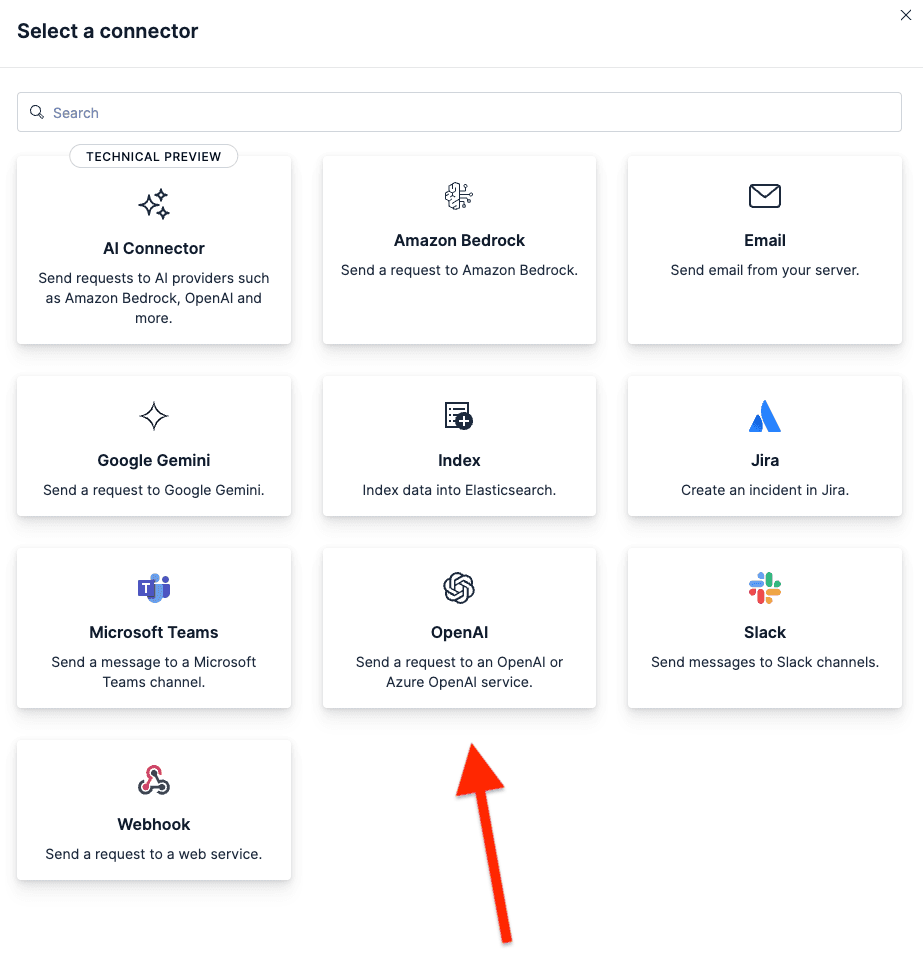

Access Connectors

- In Kibana

- Go to Project Settings > Management

- Under Alerts and Insights, select Connectors

- Click Create Connector

Configure the connector

Select OpenAI from the list of connectors. LM Studio uses the OpenAI SDK, making it compatible.

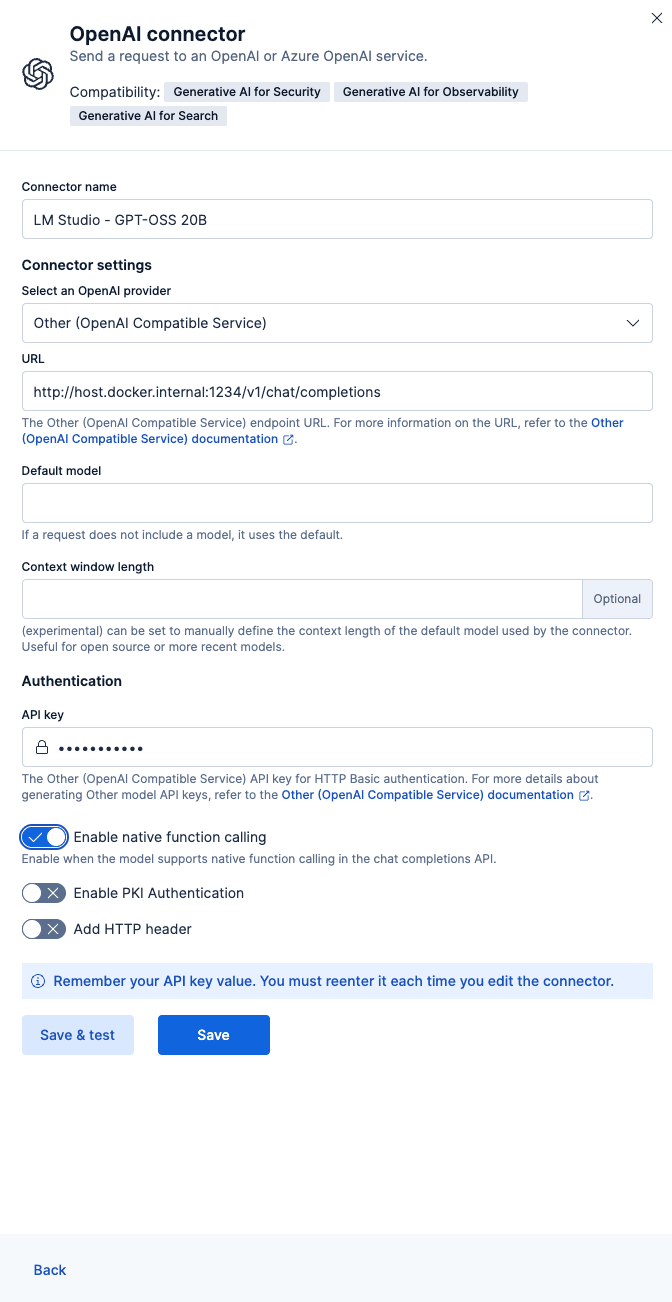

Fill in the fields with these values:

- Connector name: LM Studio - GPT-OSS 20B

- Select an OpenAI provider: Other (OpenAI Compatible Service)

- URL:

http://host.docker.internal:1234/v1/chat/completions - Default model: openai/gpt-oss-20b

- API Key: testkey-123 (any text works, because LM Studio Server doesn't require authentication.)

To finish the configuration, click Save & test.

Important: Toggle ON the “Enable native function calling”; this is required for the Agent Builder to work properly. If you don’t enable this, you’ll get a No tool calls found in the response error.

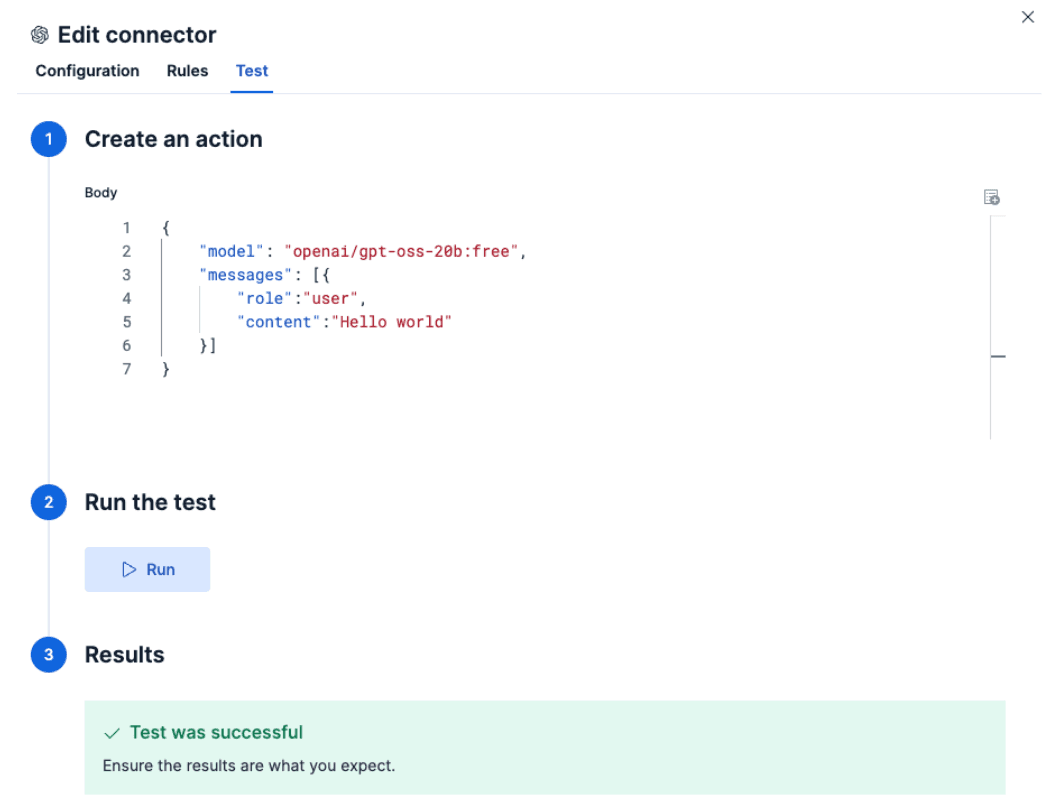

Test the connection

Elastic should automatically test the connection. If everything is configured correctly, you’ll see a success message like this:

Response:

Step 4: Upload employee data to Elasticsearch

Now we’ll upload the HR employee dataset to demonstrate how the agent works with sensitive data. I generated a fictional dataset with this structure.

Dataset structure

Create the index with mappings

First, create the index with proper mappings. Note that we’re using semantic_text fields for some key fields; this enables semantic search capabilities for our index.

Index with Bulk API

Copy and paste the dataset into your Dev Tools in Kibana and execute it:

Verify the data

Run a query to verify:

Step 5: Build and test your AI agent

With everything configured, it’s time to build a custom AI agent using Elastic Agent Builder. For more details refer to the Elastic documentation.

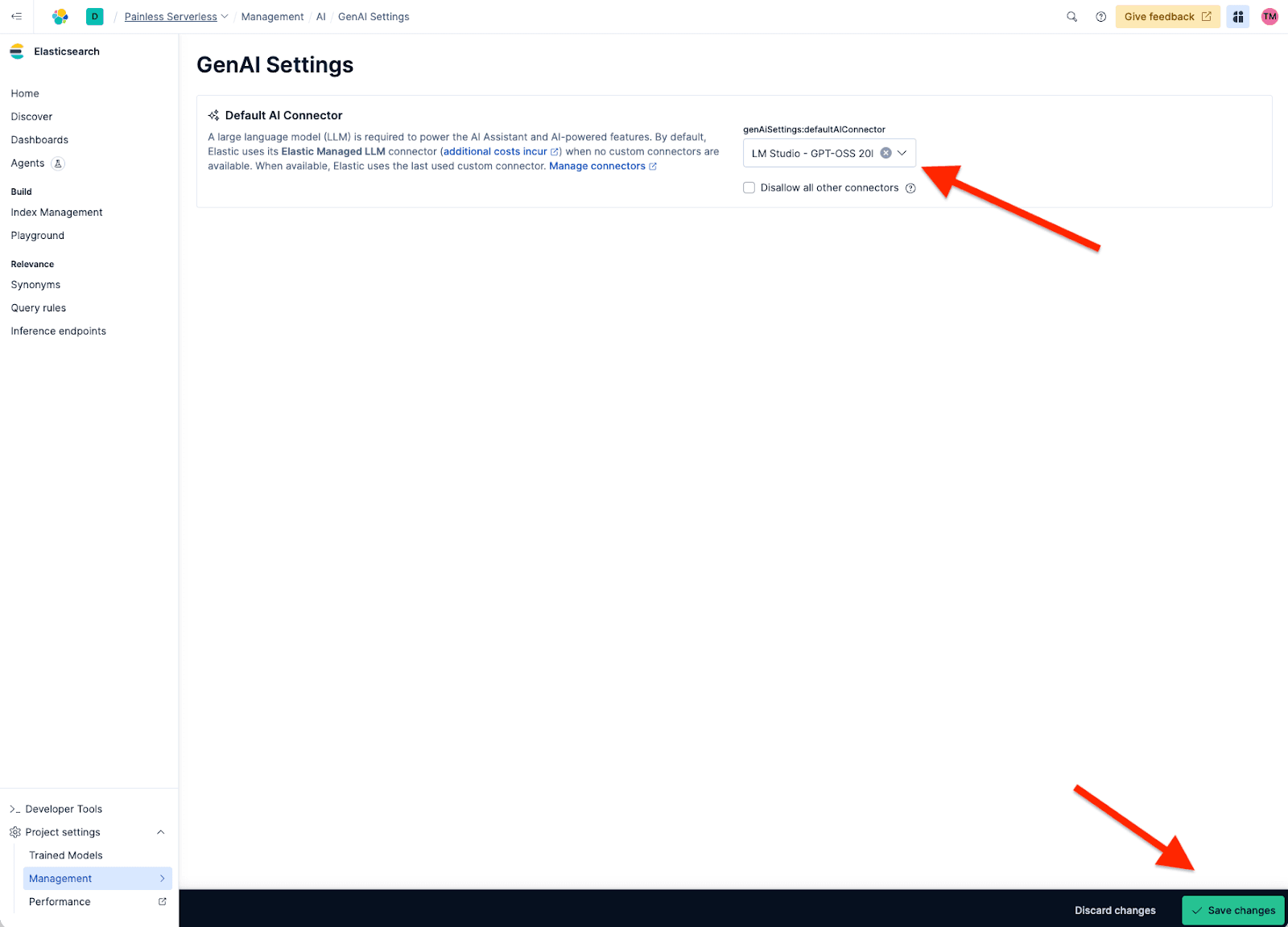

Add the connector

Before we can create our new agent, we have to set our Agent builder to use our custom connector called LM Studio - GPT-OSS 20B because the default one is the Elastic Managed LLM. For that, we need to go to Project Setting > Management > GenAI Settings; now we select the one we created and click Save.

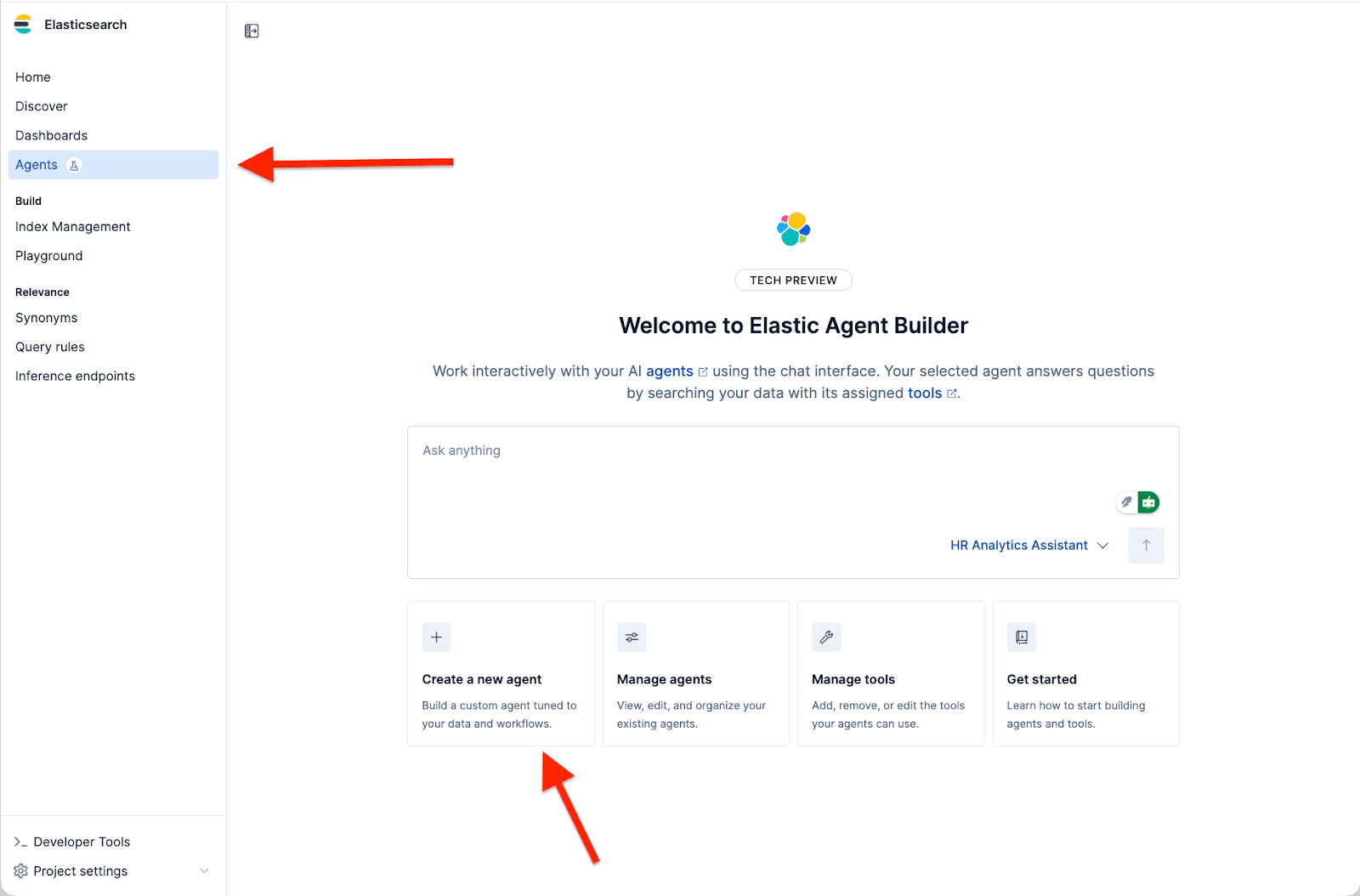

Access Agent Builder

- Go to Agents

- Click on Create a new agent

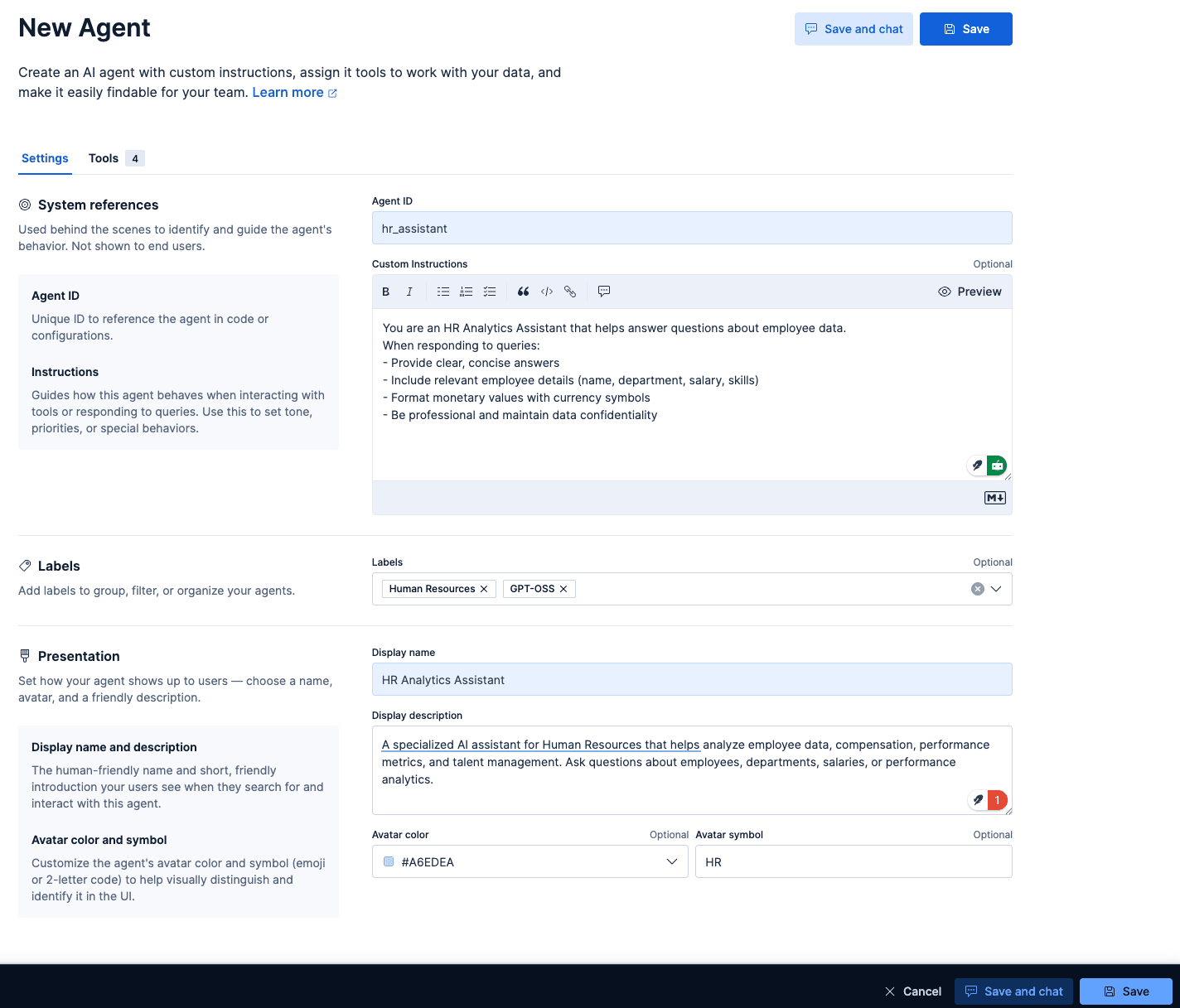

Configure the agent

To create a new agent, the required fields are the Agent ID, Display Name, and Display Instructions.

But there are more customization options, like the Custom Instructions that guide how your agent is going to behave and interact with your tools, similar to a system prompt, but for our custom agent. Labels help organize your agents, avatar color, and avatar symbol.

The ones that I chose for our agent based on the dataset are:

Agent ID: hr_assistant

Custom instructions:

Labels: Human Resources and GPT-OSS

Display name: HR Analytics Assistant

Display description:

With all the data in there, we can click on Save our new agent.

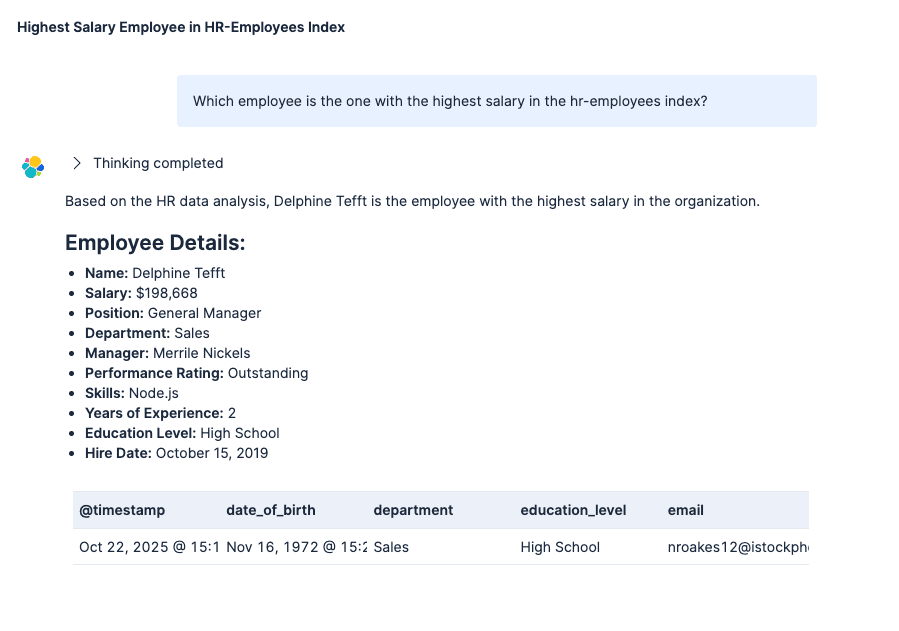

Test the agent

Now you can ask natural language questions about your employee data, and GPT-OSS 20B will understand the intent and generate an appropriate response.

Prompt:

Answer:

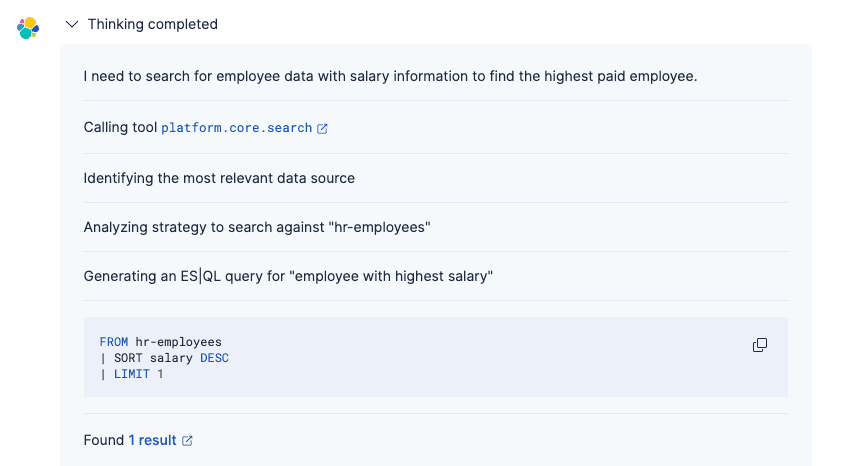

The Agent process was:

1. Understand your question using the GPT-OSS connector

2. Generate the appropriate Elasticsearch query (using the built-in tools or custom ES|QL)

3. Retrieve matching employee records

4. Present results in natural language with proper formatting

Unlike traditional lexical search, the agent powered by GPT-OSS understands intent and context, making it easier to find information without knowing exact field names or query syntax. For more details on the agent's thinking process, refer to this article.

Conclusion

In this article, we built a custom AI agent using Elastic’s Agent Builder to connect to the OpenAI GPT-OSS model running locally. By deploying both Elastic and the LLM on your local machine, this architecture allows you to leverage generative AI capabilities while maintaining full control over your data, all without sending information to external services.

We used GPT-OSS 20B as an experiment, but the officially recommended models for Elastic Agent Builder are referenced here. If you need more advanced reasoning capabilities, there's also the 120B parameter variant that performs better for complex scenarios, though it requires a higher-spec machine to run locally. For more details, refer to the official OpenAI documentation.