Observability AI Assistant

editObservability AI Assistant

editThis functionality is in technical preview and may be changed or removed in a future release. Elastic will work to fix any issues, but features in technical preview are not subject to the support SLA of official GA features.

The Observability AI Assistant is a large language model (LLM) integration that helps Elastic Observability users by adding context, explaining errors and messages, and suggesting remediation in the Observability interface. You can connect the Observability AI Assistant to either the OpenAI or Microsoft Azure LLM service.

You can find Observability AI Assistant prompts throughout Observability:

Observability AI Assistant is in technical preview, and its capabilities are still developing. Users should leverage it sensibly as the reliability of its responses might vary. Always cross-verify any returned advice for accurate threat detection and response, insights, and query generation.

Also, the data you provide to the Observability AI assistant is not anonymized, and is stored and processed by the third-party AI provider. This includes any data used in conversations for analysis or context, such as alert or event data, detection rule configurations, and queries. Therefore, be careful about sharing any confidential or sensitive details while using this feature.

Requirements

editYou need following to use the Observability AI Assistant:

- Elastic Stack version 8.9 and later.

-

An account with a third-party generative AI provider, which the Observability AI Assistant uses to generate responses. The Observability AI Assistant supports the

gpt-3.5-turboorgpt-4models of OpenAI and Azure OpenAI Service.

In Elastic Cloud or Elastic Cloud Enterprise, if you have Machine Learning autoscaling enabled, Machine Learning nodes will be started when using the knowledge base and AI Assistant. Therefore using these features will incur additional costs.

Your data and the AI Assistant

editElastic does not use customer data for model training. This includes anything you send the model, such as alert or event data, detection rule configurations, queries, and prompts. However, any data you provide to the AI Assistant will be processed by the third-party provider you chose when setting up the OpenAI connector as part of the assistant setup.

Elastic does not control third-party tools, and assumes no responsibility or liability for their content, operation, or use, nor for any loss or damage that may arise from your using such tools. Please exercise caution when using AI tools with personal, sensitive, or confidential information. Any data you submit may be used by the provider for AI training or other purposes. There is no guarantee that the provider will keep any information you provide secure or confidential. You should familiarize yourself with the privacy practices and terms of use of any generative AI tools prior to use.

Set up the Observability AI Assistant

editComplete the following steps to use the Observability AI Assistant:

-

Create an API key with your AI provider to authenticate requests from the Observability AI Assistant. You’ll use this in the next step. Refer to your provider’s documentation for generating API keys:

-

Update Kibana’s configuration settings to include the Observability AI Assistant feature flag and additional information about your AI set up.

-

Navigate to the Kibana configuration settings according to your deployment:

-

Self-managed (on-premises) deployments: Add the configuration to the

kibana.ymlfile, which is used to configure Kibana. - Elastic Cloud deployments: Use the YAML editor in the Elastic Cloud console to add the configuration to Kibana user settings.

-

Self-managed (on-premises) deployments: Add the configuration to the

-

Add one of the following configurations that corresponds to the AI service you’re using:

-

OpenAI:

In the following example,

xpack.observability.aiAssistant.provider.openAI.apiKeyis the API key you generated in the first step.xpack.observability.aiAssistant.enabled: true xpack.observability.aiAssistant.provider.openAI.apiKey: <insert API key> xpack.observability.aiAssistant.provider.openAI.model: <insert model name, e.g. gpt-4>

-

Azure OpenAI Service:

In the following example,

xpack.observability.aiAssistant.provider.azureOpenAI.apiKeyis the API key you generated in the first step, andxpack.observability.aiAssistant.provider.azureOpenAI.resourceNameis the name of the Azure OpenAI resource used to deploy your model.xpack.observability.aiAssistant.enabled: true xpack.observability.aiAssistant.provider.azureOpenAI.deploymentId: <insert deployment ID> xpack.observability.aiAssistant.provider.azureOpenAI.resourceName: <insert resource name> xpack.observability.aiAssistant.provider.azureOpenAI.apiKey: <insert API key>

-

-

- If you’re using a self-managed deployment, restart Kibana.

Interact with the Observability AI Assistant

editYou can find Observability AI Assistant prompts throughout Observability that provide the following information:

- Universal Profiling – explains the most expensive libraries and functions in your fleet and provides optimization suggestions.

- Application performance monitoring (APM) – explains APM errors and provides remediation suggestions.

- Infrastructure Observability – explains the processes running on a host.

- Logs – explains log messages and generates search patterns to find similar issues.

- Alerting – provides possible log spike causes and remediation suggestions.

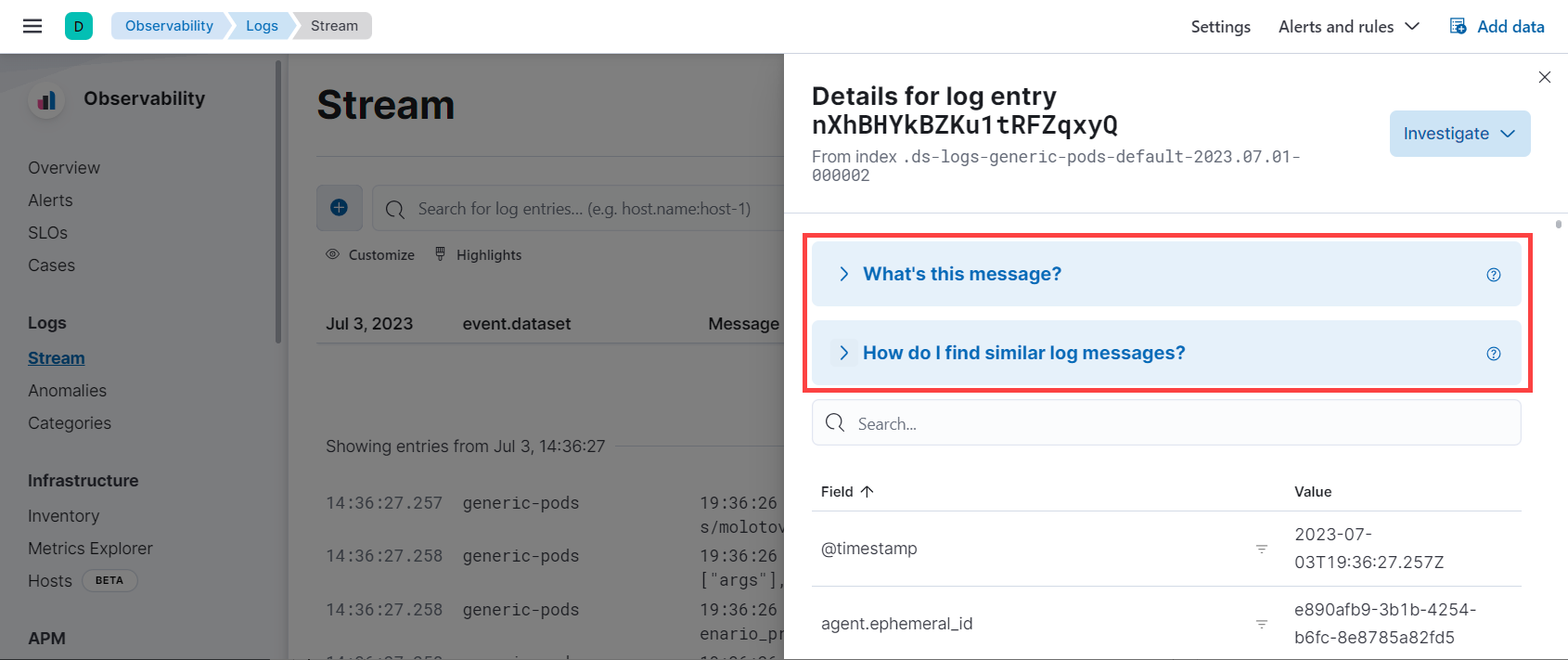

For example, in the log details, you’ll see prompts for What’s this message? and How do I find similar log messages?:

Clicking a prompt generates a message specific to that log entry: