Quick start

editQuick start

editThis guide helps you learn how to:

- install and run Elasticsearch and Kibana (using Elastic Cloud or Docker),

- add simple (non-timestamped) dataset to Elasticsearch,

- run basic searches.

If you’re interested in using Elasticsearch with Python, check out Elastic Search Labs. This is the best place to explore AI-powered search use cases, such as working with embeddings, vector search, and retrieval augmented generation (RAG).

- Tutorial: this walks you through building a complete search solution with Elasticsearch, from the ground up.

-

elasticsearch-labsrepository: it contains a range of Python notebooks and example apps.

Run Elasticsearch

editThe simplest way to set up Elasticsearch is to create a managed deployment with Elasticsearch Service on Elastic Cloud. If you prefer to manage your own test environment, install and run Elasticsearch using Docker.

- Sign up for a free trial.

- Follow the on-screen steps to create your first project.

- Click Continue to open Kibana, the user interface for Elastic Cloud.

- Click Explore on my own.

Start a single-node cluster

We’ll use a single-node Elasticsearch cluster in this quick start, which makes sense for testing and development. Refer to Install Elasticsearch with Docker for advanced Docker documentation.

-

Run the following Docker commands:

docker network create elastic docker pull docker.elastic.co/elasticsearch/elasticsearch:8.11.4 docker run --name es01 --net elastic -p 9200:9200 -p 9300:9300 -e "discovery.type=single-node" -t docker.elastic.co/elasticsearch/elasticsearch:8.11.4

-

Copy the generated

elasticpassword and enrollment token, which are output to your terminal. You’ll use these to enroll Kibana with your Elasticsearch cluster and log in. These credentials are only shown when you start Elasticsearch for the first time.We recommend storing the

elasticpassword as an environment variable in your shell. Example:export ELASTIC_PASSWORD="your_password"

-

Copy the

http_ca.crtSSL certificate from the container to your local machine.docker cp es01:/usr/share/elasticsearch/config/certs/http_ca.crt .

-

Make a REST API call to Elasticsearch to ensure the Elasticsearch container is running.

curl --cacert http_ca.crt -u elastic:$ELASTIC_PASSWORD https://localhost:9200

Run Kibana

Kibana is the user interface for Elastic. It’s great for getting started with Elasticsearch and exploring your data. We’ll be using the Dev Tools Console in Kibana to make REST API calls to Elasticsearch.

In a new terminal session, start Kibana and connect it to your Elasticsearch container:

docker pull docker.elastic.co/kibana/kibana:8.11.4 docker run --name kibana --net elastic -p 5601:5601 docker.elastic.co/kibana/kibana:8.11.4

When you start Kibana, a unique URL is output to your terminal. To access Kibana:

- Open the generated URL in your browser.

- Paste the enrollment token that you copied earlier, to connect your Kibana instance with Elasticsearch.

-

Log in to Kibana as the

elasticuser with the password that was generated when you started Elasticsearch.

Send requests to Elasticsearch

editYou send data and other requests to Elasticsearch using REST APIs. This lets you interact with Elasticsearch using any client that sends HTTP requests, such as curl. You can also use Kibana’s Console to send requests to Elasticsearch.

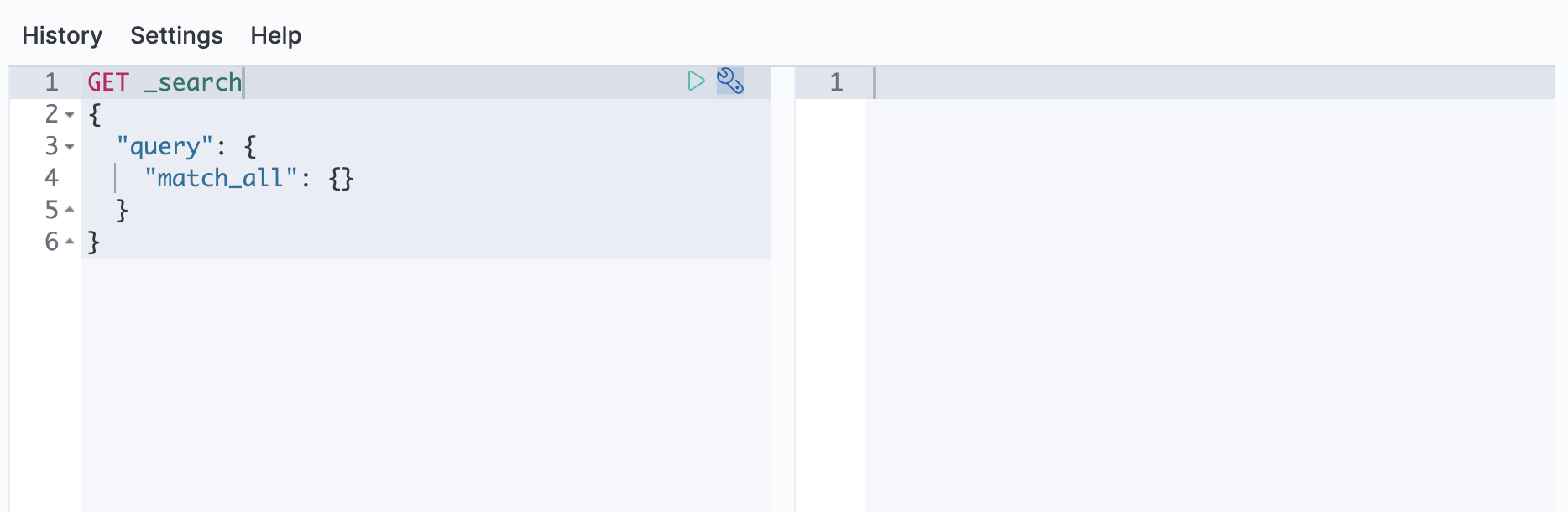

Use Kibana

-

Open Kibana’s main menu ("☰" near Elastic logo) and go to Dev Tools > Console.

-

Run the following test API request in Console:

$response = $client->info();

resp = es.info() print(resp)

response = client.info puts response

res, err := es.Info() fmt.Println(res, err)

const response = await client.info() console.log(response)

GET /

Use curl

To communicate with Elasticsearch using curl or another client, you need your cluster’s endpoint.

- Open Kibana’s main menu and click Manage this deployment.

- From your deployment menu, go to the Elasticsearch page. Click Copy endpoint.

-

To submit an example API request, run the following curl command in a new terminal session. Replace

<password>with the password for theelasticuser. Replace<elasticsearch_endpoint>with your endpoint.curl -u elastic:<password> <elasticsearch_endpoint>/

Use Kibana

-

Open Kibana’s main menu ("☰" near Elastic logo) and go to Dev Tools > Console.

-

Run the following test API request in Console:

$response = $client->info();

resp = es.info() print(resp)

response = client.info puts response

res, err := es.Info() fmt.Println(res, err)

const response = await client.info() console.log(response)

GET /

Use curl

To submit an example API request, run the following curl command in a new terminal session.

curl --cacert http_ca.crt -u elastic:$ELASTIC_PASSWORD https://localhost:9200

Add data

editYou add data to Elasticsearch as JSON objects called documents. Elasticsearch stores these documents in searchable indices.

Add a single document

editSubmit the following indexing request to add a single document to the

books index.

The request automatically creates the index.

resp = client.index(

index="books",

body={

"name": "Snow Crash",

"author": "Neal Stephenson",

"release_date": "1992-06-01",

"page_count": 470,

},

)

print(resp)

response = client.index(

index: 'books',

body: {

name: 'Snow Crash',

author: 'Neal Stephenson',

release_date: '1992-06-01',

page_count: 470

}

)

puts response

const response = await client.index({

index: 'books',

document: {

name: 'Snow Crash',

author: 'Neal Stephenson',

release_date: '1992-06-01',

page_count: 470,

}

})

console.log(response)

POST books/_doc

{"name": "Snow Crash", "author": "Neal Stephenson", "release_date": "1992-06-01", "page_count": 470}

The response includes metadata that Elasticsearch generates for the document including a unique _id for the document within the index.

Expand to see example response

Add multiple documents

editUse the _bulk endpoint to add multiple documents in one request. Bulk data

must be newline-delimited JSON (NDJSON). Each line must end in a newline

character (\n), including the last line.

resp = client.bulk(

body=[

{"index": {"_index": "books"}},

{

"name": "Revelation Space",

"author": "Alastair Reynolds",

"release_date": "2000-03-15",

"page_count": 585,

},

{"index": {"_index": "books"}},

{

"name": "1984",

"author": "George Orwell",

"release_date": "1985-06-01",

"page_count": 328,

},

{"index": {"_index": "books"}},

{

"name": "Fahrenheit 451",

"author": "Ray Bradbury",

"release_date": "1953-10-15",

"page_count": 227,

},

{"index": {"_index": "books"}},

{

"name": "Brave New World",

"author": "Aldous Huxley",

"release_date": "1932-06-01",

"page_count": 268,

},

{"index": {"_index": "books"}},

{

"name": "The Handmaids Tale",

"author": "Margaret Atwood",

"release_date": "1985-06-01",

"page_count": 311,

},

],

)

print(resp)

response = client.bulk(

body: [

{ index: { _index: 'books' } },

{ name: 'Revelation Space', author: 'Alastair Reynolds', release_date: '2000-03-15', page_count: 585},

{ index: { _index: 'books' } },

{ name: '1984', author: 'George Orwell', release_date: '1985-06-01', page_count: 328},

{ index: { _index: 'books' } },

{ name: 'Fahrenheit 451', author: 'Ray Bradbury', release_date: '1953-10-15', page_count: 227},

{ index: { _index: 'books' } },

{ name: 'Brave New World', author: 'Aldous Huxley', release_date: '1932-06-01', page_count: 268},

{ index: { _index: 'books' } },

{ name: 'The Handmaids Tale', author: 'Margaret Atwood', release_date: '1985-06-01', page_count: 311}

]

)

puts response

const response = await client.bulk({

operations: [

{ index: { _index: 'books' } },

{

name: 'Revelation Space',

author: 'Alastair Reynolds',

release_date: '2000-03-15',

page_count: 585,

},

{ index: { _index: 'books' } },

{

name: '1984',

author: 'George Orwell',

release_date: '1985-06-01',

page_count: 328,

},

{ index: { _index: 'books' } },

{

name: 'Fahrenheit 451',

author: 'Ray Bradbury',

release_date: '1953-10-15',

page_count: 227,

},

{ index: { _index: 'books' } },

{

name: 'Brave New World',

author: 'Aldous Huxley',

release_date: '1932-06-01',

page_count: 268,

},

{ index: { _index: 'books' } },

{

name: 'The Handmaids Tale',

author: 'Margaret Atwood',

release_date: '1985-06-01',

page_count: 311,

}

]

})

console.log(response)

POST /_bulk

{ "index" : { "_index" : "books" } }

{"name": "Revelation Space", "author": "Alastair Reynolds", "release_date": "2000-03-15", "page_count": 585}

{ "index" : { "_index" : "books" } }

{"name": "1984", "author": "George Orwell", "release_date": "1985-06-01", "page_count": 328}

{ "index" : { "_index" : "books" } }

{"name": "Fahrenheit 451", "author": "Ray Bradbury", "release_date": "1953-10-15", "page_count": 227}

{ "index" : { "_index" : "books" } }

{"name": "Brave New World", "author": "Aldous Huxley", "release_date": "1932-06-01", "page_count": 268}

{ "index" : { "_index" : "books" } }

{"name": "The Handmaids Tale", "author": "Margaret Atwood", "release_date": "1985-06-01", "page_count": 311}

You should receive a response indicating there were no errors.

Expand to see example response

{

"errors": false,

"took": 29,

"items": [

{

"index": {

"_index": "books",

"_id": "QklI2IsBaSa7VYx_Qkh-",

"_version": 1,

"result": "created",

"_shards": {

"total": 2,

"successful": 2,

"failed": 0

},

"_seq_no": 1,

"_primary_term": 1,

"status": 201

}

},

{

"index": {

"_index": "books",

"_id": "Q0lI2IsBaSa7VYx_Qkh-",

"_version": 1,

"result": "created",

"_shards": {

"total": 2,

"successful": 2,

"failed": 0

},

"_seq_no": 2,

"_primary_term": 1,

"status": 201

}

},

{

"index": {

"_index": "books",

"_id": "RElI2IsBaSa7VYx_Qkh-",

"_version": 1,

"result": "created",

"_shards": {

"total": 2,

"successful": 2,

"failed": 0

},

"_seq_no": 3,

"_primary_term": 1,

"status": 201

}

},

{

"index": {

"_index": "books",

"_id": "RUlI2IsBaSa7VYx_Qkh-",

"_version": 1,

"result": "created",

"_shards": {

"total": 2,

"successful": 2,

"failed": 0

},

"_seq_no": 4,

"_primary_term": 1,

"status": 201

}

},

{

"index": {

"_index": "books",

"_id": "RklI2IsBaSa7VYx_Qkh-",

"_version": 1,

"result": "created",

"_shards": {

"total": 2,

"successful": 2,

"failed": 0

},

"_seq_no": 5,

"_primary_term": 1,

"status": 201

}

}

]

}

Search data

editIndexed documents are available for search in near real-time.

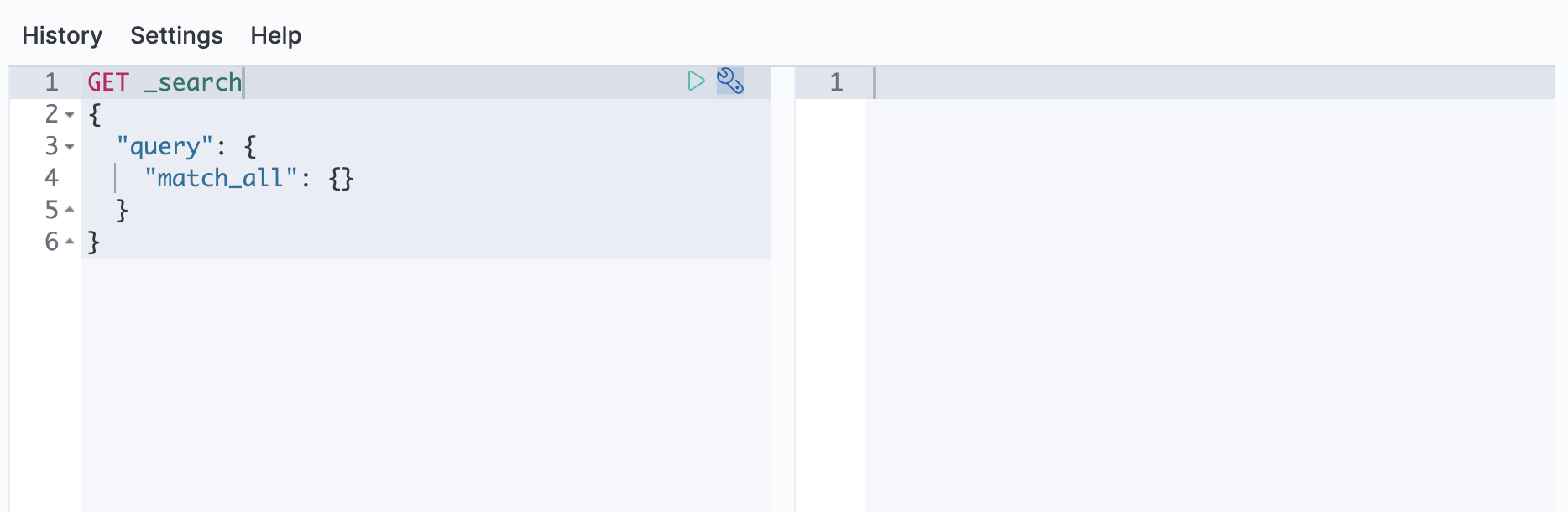

Search all documents

editRun the following command to search the books index for all documents:

resp = client.search(

index="books",

)

print(resp)

response = client.search( index: 'books' ) puts response

const response = await client.search({

index: 'books'

})

console.log(response)

GET books/_search

The _source of each hit contains the original

JSON object submitted during indexing.

match query

editYou can use the match query to search for documents that contain a specific value in a specific field.

This is the standard query for performing full-text search, including fuzzy matching and phrase searches.

Run the following command to search the books index for documents containing brave in the name field:

resp = client.search(

index="books",

body={"query": {"match": {"name": "brave"}}},

)

print(resp)

response = client.search(

index: 'books',

body: { query: { match: { name: 'brave' } } }

)

puts response

const response = await client.search({

index: 'books',

query: {

match: {

name: 'brave'

}

}

})

console.log(response)

GET books/_search

{

"query": {

"match": {

"name": "brave"

}

}

}

Next steps

editNow that Elasticsearch is up and running and you’ve learned the basics, you’ll probably want to test out larger datasets, or index your own data.

Learn more about search queries

edit- Search your data. Jump here to learn about exact value search, full-text search, vector search, and more, using the search API.

Add more data

edit- Learn how to install sample data using Kibana. This is a quick way to test out Elasticsearch on larger workloads.

- Learn how to use the upload data UI in Kibana to add your own CSV, TSV, or JSON files.

- Use the bulk API to ingest your own datasets to Elasticsearch.

Elasticsearch programming language clients

edit- Check out our client library to work with your Elasticsearch instance in your preferred programming language.

-

If you’re using Python, check out Elastic Search Labs for a range of examples that use the Elasticsearch Python client. This is the best place to explore AI-powered search use cases, such as working with embeddings, vector search, and retrieval augmented generation (RAG).

- This extensive, hands-on tutorial walks you through building a complete search solution with Elasticsearch, from the ground up.

-

elasticsearch-labscontains a range of executable Python notebooks and example apps.