Introduction

editIntroduction

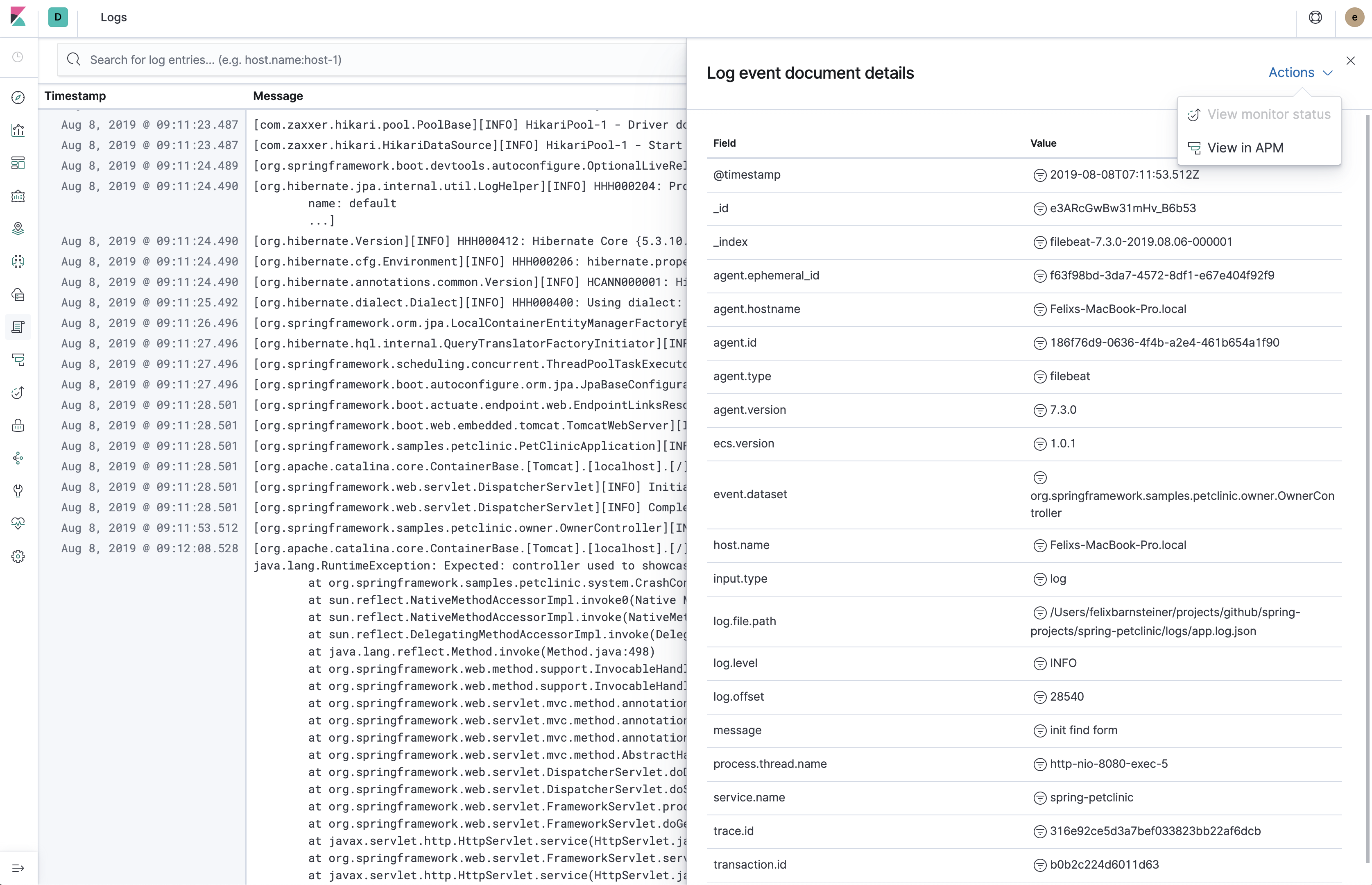

editCentralized application logging with the Elastic stack made easy.

What is ECS?

editElastic Common Schema (ECS) defines a common set of fields for ingesting data into Elasticsearch. For more information about ECS, visit the ECS Reference Documentation.

What is ECS logging?

editECS loggers are plugins for your favorite logging library. They make it easy to format your logs into ECS-compatible JSON. For example:

{"@timestamp":"2019-08-06T12:09:12.375Z", "log.level": "INFO", "message":"Tomcat started on port(s): 8080 (http) with context path ''", "service.name":"spring-petclinic","process.thread.name":"restartedMain","log.logger":"org.springframework.boot.web.embedded.tomcat.TomcatWebServer"}

{"@timestamp":"2019-08-06T12:09:12.379Z", "log.level": "INFO", "message":"Started PetClinicApplication in 7.095 seconds (JVM running for 9.082)", "service.name":"spring-petclinic","process.thread.name":"restartedMain","log.logger":"org.springframework.samples.petclinic.PetClinicApplication"}

{"@timestamp":"2019-08-06T14:08:40.199Z", "log.level":"DEBUG", "message":"init find form", "service.name":"spring-petclinic","process.thread.name":"http-nio-8080-exec-8","log.logger":"org.springframework.samples.petclinic.owner.OwnerController","transaction.id":"28b7fb8d5aba51f1","trace.id":"2869b25b5469590610fea49ac04af7da"}

Get started

editRefer to the installation instructions of the individual loggers:

Why ECS logging?

edit- Simplicity: no manual parsing

-

Logs arrive pre-formatted, pre-enriched and ready to add value, making problems quicker and easier to identify. No more tedious grok parsing that has to be customized for every application.

- Decently human-readable JSON structure

-

The first three fields are

@timestamp,log.levelandmessage. This lets you easily read the logs in a terminal without needing a tool that converts the logs to plain-text. - Enjoy the benefits of a common schema

-

Use the Kibana Logs app without additional configuration.

Using a common schema across different services and teams makes it possible create reusable dashboards and avoids mapping explosions.

- APM Log correlation

-

If you are using an Elastic APM agent, you can leverage the log correlation feature without any additional configuration. This lets you jump from the Span timeline in the APM UI to the Logs app, showing only the logs which belong to the corresponding request. Vice versa, you can also jump from a log line in the Logs UI to the Span Timeline of the APM UI.

Additional advantages when using in combination with Filebeat

editWe recommend shipping the logs with Filebeat. Depending on the way the application is deployed, you may log to a log file or to stdout (for example in Kubernetes).

Here are a few benefits to this over directly sending logs from the application to Elasticsearch:

- Resilient in case of outages

-

Guaranteed at-least-once delivery without buffering within the application, thus no risk of out of memory errors or lost events. There’s also the option to use either the JSON logs or plain-text logs as a fallback.

- Loose coupling

-

The application does not need to know the details of the logging backend (URI, credentials, etc.). You can also leverage alternative Filebeat outputs, like Logstash, Kafka or Redis.

- Index Lifecycle management

-

Leverage Filebeat’s default index lifecycle management settings. This is much more efficient than using daily indices.

- Efficient Elasticsearch mappings

-

Leverage Filebeat’s default ECS-compatible index template.

Field mapping

editDefault fields

editThese fields are populated by the ECS loggers by default.

Some of them, such as the log.origin.* fields, may have to be explicitly enabled.

Others, such as process.thread.name, are not applicable to all languages.

Refer to the documentation of the individual loggers for more information.

| ECS field | Description | Example |

|---|---|---|

The timestamp of the log event. |

|

|

The level or severity of the log event. |

|

|

The name of the logger inside an application. |

|

|

The name of the file containing the source code which originated the log event. |

|

|

The line number of the file containing the source code which originated the log event. |

|

|

The name of the function or method which originated the log event. |

|

|

The log message. |

|

|

Only present for logs that contain an exception or error. The type or class of the error if this log event contains an exception. |

|

|

Only present for logs that contain an exception or error. The message of the exception or error. |

|

|

Only present for logs that contain an exception or error. The full stack trace of the exception or error as a raw string. |

|

|

The name of the thread the event has been logged from. |

|

Configurable fields

editRefer to the documentation of the individual loggers on how to set these fields.

| ECS field | Description | Example |

|---|---|---|

Helps to filter the logs by service. |

|

|

Helps to filter the logs by service version. |

|

|

Helps to filter the logs by environment. |

|

|

Allow for two nodes of the same service, on the same host to be differentiated. |

|

|

Enables the log rate anomaly detection. |

|

Custom fields

editMost loggers allow you to add additional custom fields.

This includes both, static and dynamic ones.

Examples for dynamic fields are logging structured objects,

or fields from a thread local context, such as MDC or ThreadContext.

When adding custom fields, we recommend using existing ECS fields for these custom values.

If there is no appropriate ECS field,

consider prefixing your fields with labels., as in labels.foo, for simple key/value pairs.

For nested structures, consider prefixing with custom..

This approach protects against conflicts in case ECS later adds the same fields but with a different mapping.