It is time to say goodbye: This version of Elastic Cloud Enterprise has reached end-of-life (EOL) and is no longer supported.

The documentation for this version is no longer being maintained. If you are running this version, we strongly advise you to upgrade. For the latest information, see the current release documentation.

Configure Beats and Logstash with Cloud ID

editConfigure Beats and Logstash with Cloud ID

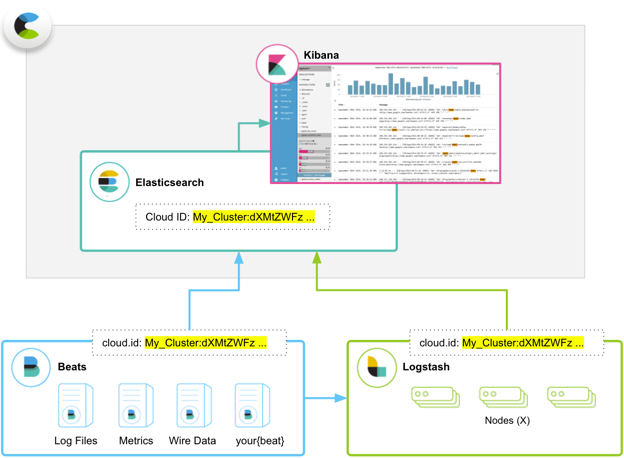

editThe Cloud ID reduces the number of steps required to start sending data from Beats or Logstash to your hosted cluster on Elastic Cloud Enterprise. Because we made it easier to send data, you can start exploring visualizations in Kibana on Elastic Cloud Enterprise that much more quickly.

The Cloud ID works by assigning a unique ID to your hosted Elasticsearch cluster on Elastic Cloud Enterprise. All clusters that support the Cloud ID automatically get one. Clusters running version 5.x and later are all supported, including clusters that existed before we introduced the Cloud ID.

You include your Cloud ID along with your user credentials when you run Beats or Logstash locally, and then let Elastic Cloud Enterprise handle all of the remaining connection details to send the data to your hosted cluster on Elastic Cloud Enterprise safely and securely.

What are Beats and Logstash?

editNot sure why you need Beats or Logstash? Here’s what they do:

- Beats is our open source platform for single-purpose data shippers. The purpose of Beats is to help you gather data from different sources and to centralize the data by shipping it to Elasticsearch. Beats install as lightweight agents and ship data from hundreds or thousands of machines to your hosted Elasticsearch cluster on Elastic Cloud Enterprise. If you want more processing muscle, Beats can also ship to Logstash for transformation and parsing before the data gets stored in Elasticsearch.

- Logstash is an open source, server-side data processing pipeline that ingests data from a multitude of sources simultaneously, transforms it, and then sends it to your favorite place where you stash things, here your hosted Elasticsearch cluster on Elastic Cloud Enterprise. Logstash supports a variety of inputs that pull in events from a multitude of common sources, all at the same time. You can easily ingest from your logs, metrics, web applications, data stores, and various AWS services, all in continuous, streaming fashion.

Before You Begin

editTo use the Cloud ID, you need:

- Beats or Logstash version 6.o or later, installed locally wherever you want to send data from.

- An Elasticsearch cluster on version 5.x or later to send data to.

-

To configure Beats or Logstash, you need:

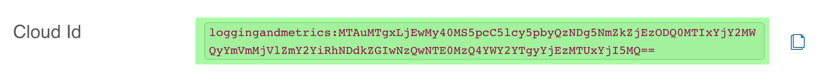

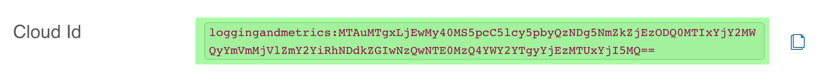

- The unique Cloud ID for your cluster, shown when you create your cluster or available from the cluster Manage page.

-

A user ID and password that has permission to send data to your cluster.

In our examples, we use the

elasticsuperuser that every version 5.x cluster comes with. The password for theelasticuser is provided when you create a cluster (and can also be reset if you forget it). On a production system, you should adapt these examples by creating a user that can write to and access only the minimally required indices.

Configure Beats with Your Cloud ID

editThe following example shows how you can send operational data with the Cloud ID from Metricbeat to Elastic Cloud Enterprise. Any of the available Beats will work, but we had to pick one for this example.

To get started with Metricbeat and Elastic Cloud Enterprise:

- Log into the Cloud UI.

-

Create a new cluster and copy down the password for the

elasticuser and the Cloud ID information:

Or you can also use an existing cluster. The unique Cloud ID is shown on the cluster Manage page.

-

Set up Metricbeat version 6.4.0:

- Download and unpack Metricbeat.

-

Modify the metricbeat.yml configuration file to add your user name and password:

cloud.auth: "elastic:MY_PASSWORD"

You can also include the

cloud.idparameter right in the configuration file instead of specifying it on the command line when you run Metricbeat in the next step. -

In the Metricbeat install directory, run:

./metricbeat --setup -e -E 'cloud.id=My_Cluster:MY_CLOUD_ID'

To adapt these configuration examples, make sure you replace the values for cloud.id and cloud.auth with your own information.

-

Open Kibana from the cluster Overview page and explore!

Metricbeat creates an index pattern in Kibana with defined fields, searches, visualizations, and dashboards that you can start exploring. Look for information related to system metrics, such as CPU usage, utilization rates for memory and disk, and details for processes.

If you want to learn more about how Metricbeat works with a Cloud ID, see Configure the output for the Elastic Cloud.

Configure Logstash with Your Cloud ID

editThe following example shows how you can send operational data with the Cloud ID from Logstash to an Elasticsearch cluster hosted on Elastic Cloud Enterprise.

Cloud ID applies only when a Logstash module is enabled, otherwise specifying Cloud ID has no effect. Cloud ID applies to data that gets sent via the module, to runtime metrics sent via X-Pack monitoring, and to the endpoint used by X-Pack central management features of Logstash, unless explicit overrides to X-Pack settings are specified in logstash.yml.

To get started with Logstash and Elastic Cloud Enterprise:

- Log into the Cloud UI.

-

Create a new cluster and copy down the password for the

elasticuser and the Cloud ID information:

Or you can also use an existing cluster. The unique Cloud ID is shown on the cluster Manage page.

-

Set up Logstash version 6.4.0:

- Download and unpack Logstash.

-

Modify the logstash.yml configuration file to add your user name and password:

cloud.auth: "elastic:YOUR_PASSWORD"

You can also include the

cloud.idparameter right in the configuration file instead of specifying it on the command line when you run Logstash in the next step. -

In the Logstash install directory, run the module. For example:

bin/logstash --modules netflow -M "netflow.var.input.udp.port=3555" --cloud.id My_Cluster:MY_CLOUD_ID

To adapt these configuration examples, make sure you replace the values for cloud.id and cloud.auth with your own information.

-

Open Kibana from the cluster Overview page and explore!

Logstash creates an index pattern in Kibana with defined fields, searches, visualizations, and dashboards for events that you can explore.

If you want to learn more about how Logstash works with a Cloud ID, see Using Elastic Cloud.